Lecture

In the era of search engines, SEO can be reduced to 3 main elements or functions: time to survey a site (detection), time to index (which also includes filtering), and time to ranking (algorithms).

Today, the first part of the three, we will talk about the discovery of the site by search engines.

Combining SEO to these basic elements allows SEOs to create a kind of template for working on search engine promotion. Using this template, you can not go into the specifics and subtleties of the actions in this template.

And sometimes it is good for us, because search engines are top-secret monsters ... monster robots.

This thesis is based on the following assumptions:

Search engines explore, index and rank web pages. Therefore, SEO should base all their tactics on these steps of search engines. Here are the simple conclusions that will be useful for SEO, regardless of the competition in the niche:

But of course, Google, like all other search engines, exists with one goal - to develop a business, satisfying user needs. Therefore, we must constantly remember the following:

And of course, since by its nature SEO is a competition in which to reach the top of the list you need to defeat your competitors, we should note the following:

Considering the above, we can derive several SEO tactics for each of the phases of the search engine.

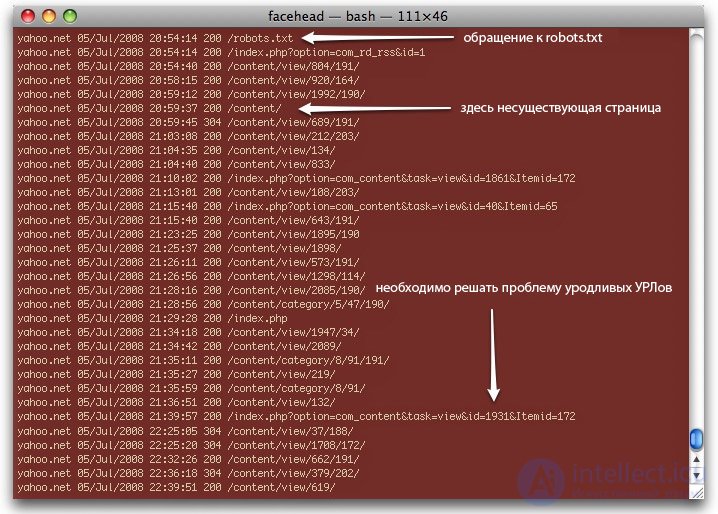

SEO begins with a study of how a search bot discovers pages. Googlebot, for example, exploring our site, sees all the details that are recorded in the logs. These carry the following information:

Special scripts (AudetteMedia's logfilt), which help to quickly parse a lot of such logs, are used in different situations:

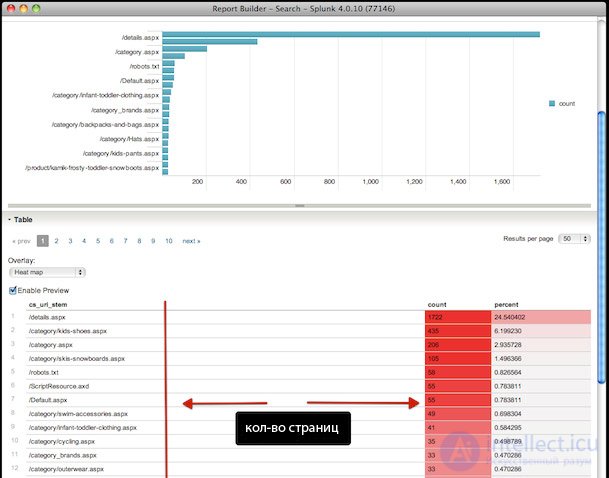

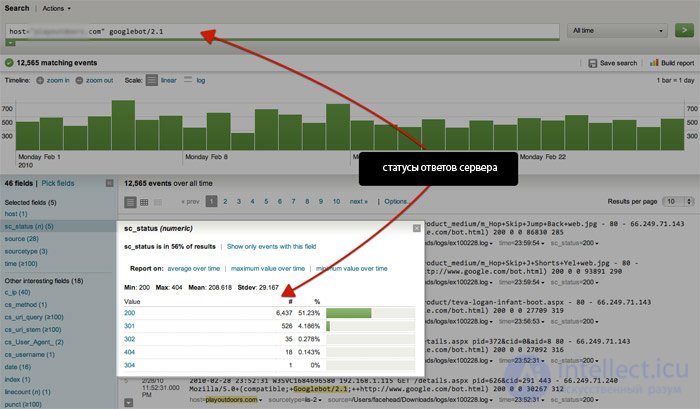

At the corporate level, there is a tool for analysis, such as http://www.splunk.com/, which can parse all the information by user-agent, sorting it by server status code, time and date:

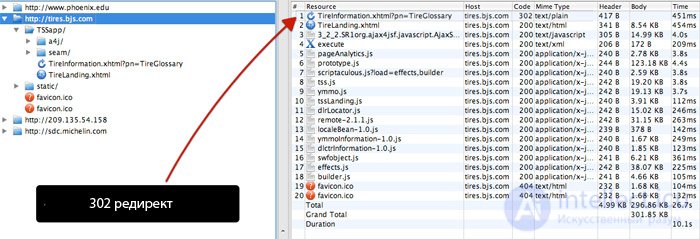

Xenu is a full-fledged application that provides the most complete information about a search bot visiting a site. The application works with more than 10,000 pages, so it’s best to use it at the corporate level. Here are some tips for working with Xenu or the like:

This quick and simple technique helps to find codes such as 302 and others.

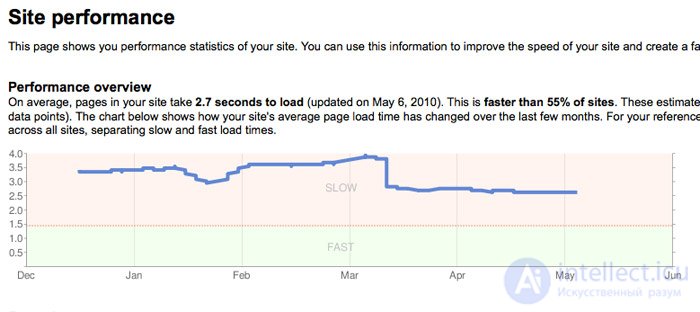

Google Webmaster Tools also has useful information for SEOers about page detection, duplicate content, and how often a bot visits the site:

There are also other tools with which you can look at the pages of the site through the eyes of a search bot (for example, Lynx and SEO Browser).

The most important thing in SEO is accurate information, but more importantly, what and how can you do with this information. The thing is how you interpret this information, not how you get it.

Subjecting SEO to work of systematization, savvy SEOs make a pattern of actions or methods based on the theory of detection, indexing and ranking, which subsequently leads their sites straight to the tops of search results.

Last time, in a series of three articles, we met the first article, where we looked at the first stage of the search engine: site discovery. We also considered the possible methods of SEO work with each of the stages.

Before continuing, I think it would be appropriate to refresh in memory of what was discussed in the first article:

The search engine detects, indexes and ranks web pages. SEO should base their promotion tactics on these three stages of search engine performance. Therefore, the following conclusions were made:

But of course, Google, like any other search engine, exists with one goal - to build and grow a business, satisfying user needs. Therefore, we must constantly remember the following:

Knowing all of the above, we can develop several methods of promotion for each of the phases of the search engine, which in the end can lead to a single SEO strategy.

Indexing is the next step after finding the page. Identification of duplicate content is the main function of this step of the search engine. It may not be an exaggeration if I say that all large sites do not have unique content, albeit internationally.

Online stores can have the same content in the form of the same goods. We can state this with precision, having extensive experience in working with such vendors as Zappos and Charming Shoppes.

More problems with news portals of famous newspapers and publications. Marshall Symonds and his team, working on The New York Times and other publications, are daily confronted with duplicate content, which is a major SEO job.

The site will never be specifically sanctioned if there are duplicate content on its pages. But there are filters that can distinguish the same or slightly modified content on multiple pages. This problem is one of the main for SEO.

Duplicates will also affect the visibility of the site, so you need to reduce the number of duplicates to zero. Different versions of the same content in the search engine index is also not the best optimization result.

Matt Cutts , in an interview with Eric Engem, confirmed the existence of a "crawl cap" (site visibility cap), which depends on the PR site (not the tool PR) and told about what problems may arise due to duplicate content:

The full version of the interview with Matt Cutts includes the most complete information for any serious SEO user on the issue of duplicate content. Although the majority of what you hear there will not be news, but to confirm some guesses and decisions that we face every day will not be superfluous.

Links, especially from sites with excellent structure, with relevant and high-quality pages will not only improve the indexing of the site, but also improve its visibility.

Determining the level of "penetration" of the search engine into the site, "site visibility cap", the number of duplicate content, and then eliminating them, will improve both the visibility of the site in the eyes of the search engine and the site indexation.

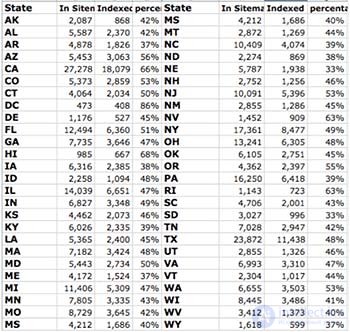

There are several great ways to find out:

The problem of the number of duplicates for SEO is very complex and requires separate consideration. In short, the problem of duplicates can be solved using "rel = canonical" and standard "View All page" on pages that serve as the main one.

Search results are another unique situation. There are many ways to manage these results.

During the detection of problems with the site indexation, any "weaknesses" of the URL structure of the site pages will pop up. This is especially true of corporate level sites where you will encounter all kinds of unexpected results in a search engine index.

These problems arise when the site has many different types of users and members of the administration. Of course, we ourselves often make mistakes, SEO is not the solution to all problems.

Site indexing is the main component of site visibility, index, ranking, and is usually the main focus of SEOs. Clean up the index of your site and enjoy scanning efficiency, speed of indexing your site.

Stay with us, as there will be the third and final article in this series.

This article is aimed at considering the ranking - the last of the steps of the search engine with a web page. Prior to this, we considered the two previous stages of the search engine: the detection and indexing of a web page.

According to not experienced SEO, ranking is the simplest of the three parts of the search engine. However, it is not.

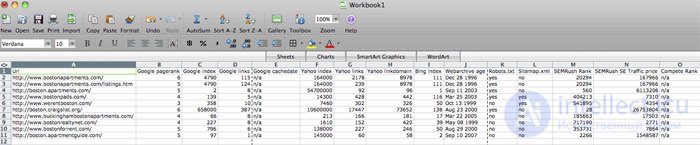

The whole essence of SEO comes down to search results. If the search results URL of your competitors suddenly become higher than yours, you should analyze and put the following into the table:

You need to collect data for each URL and for each domain. The data for both the URL and the domain as a whole are very important, but you should not consider every factor that could move the page up in the issue. Consider the most important ones, the ones you already dealt with.

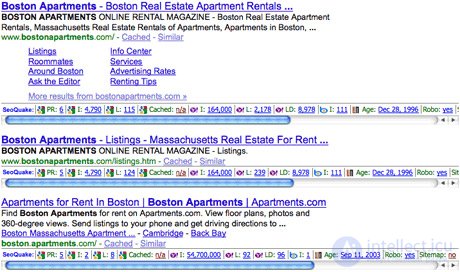

SEO Quake is a great tool for quickly obtaining basic SEO metrics right from search results. Moreover, all data can be easily exported to Excel. All the information you receive can be presented in various ways.

So, what is left with you after this analysis? The obtained information will clearly show you what needs to be done in order to bypass your competitors in the search results and in general where your site is among the competitors.

The task here is to "identify and conquer" the sites of competitors.

By systematically SEO analyzing the three component stages of a search engine: detection, indexing, and ranking, competent SEOs compose their own methods of improving (“pumping”) their sites, which will then reward them with excellent ranking results in search results.

Comments

To leave a comment

seo, smo, monetization, basics of internet marketing

Terms: seo, smo, monetization, basics of internet marketing