Lecture

We continue a series of interesting publications on SEOM.info, in this article we will discuss factors that should be taken into account when developing a website promotion strategy, namely, factors that are somehow taken into account by search engines.

At 9:30 am, the beginning of the working day, and you are already talking to a new client . All of them are very similar, such inquisitive, everything is interesting for them and everyone wants to know how the search engine ranks the pages, why the changes you proposed will have a positive effect on where you studied SEO, and of course, ask to show examples of your previous works.

The deeper you are explaining to the client all the details of working on a new project, the closer you get to the question of building up the reference mass. You draw a table of factors affecting the ranking of the page by a search engine, in the first place of importance are links. The client asks why the links are so important. Moreover, he asks a question that puzzles you:

How to assess the impact of a specific link on the position of my site in the issuance of Google?

In this article I will try to help you answer this question. Listed below are most of the most important factors that influence the calculation of link weights by search engines.

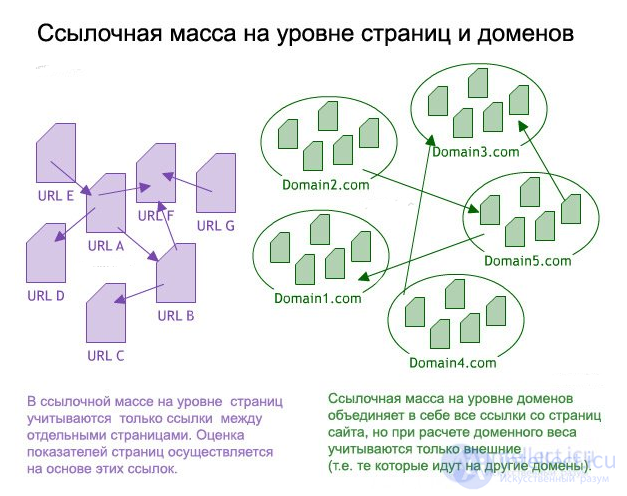

But first, look carefully at the drawing, in a simplified form, it depicts a very important mechanism that you need to know to understand the material below:

As you have probably noticed, the search engines are more and more attached to the indicators of the domain than a single page.

You did not have a question, why are we increasingly seeing new pages with a very small number of links in the first positions of the search results?

This is due to the fact that these pages are located under reputable domains with a large number of backlinks and high internal indicators.

We conducted a survey among SEO practitioners about what factors they consider most important when ranking, most of the respondents put the Domain Rank (as we called this indicator) at the top of the list, as they consider it one of the key in the Google ranking algorithm.

The " Domain Rank " is most likely calculated from its total reference mass, which differs from the reference mass of the individual pages of the site (from which the Page Rank is calculated for pages). Below, I will talk about the factors directly affecting one of these indicators, or both at the same time.

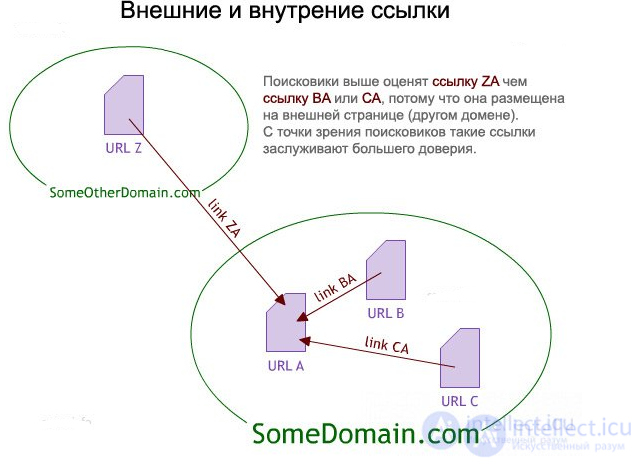

When search engines first started using links to assess the popularity, importance and relevance of a document to a given search query, the well-known everyday principle was taken as a basis: what others say about you is much more important (and deserves more confidence) than what you say about yourself.

Thus, when internal links (links from one internal page of a site to another) transfer some weight, external links (from other sites) weigh significantly more.

This does not mean that you do not need to work on a good linking of the internal pages of your site (you should do the correct URL structure), or care about the quality of internal links (good link text, avoid unnecessary, unnecessary links, etc.), I just I wanted to say that SEO page performance is very dependent on its citation by external resources, i.e. quantity and quality of external links.

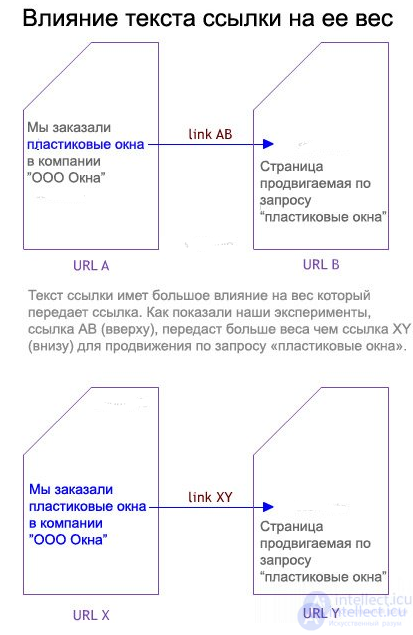

For SEO specialists, it is well known that the link text is one of the most important parameters that the search engine takes into account when ranking, so it is not surprising that the anchors of the links are given special attention.

From our SEO experiments (as well as on the basis of vast practical experience), it became apparent that the “ exact match ” of the link text to the intended search query has a significant advantage over the banal set of keywords in the anchor.

It should be noted that search engines do not always make such a big difference in the text links, especially for ordinary (non-branded) key phrases, this is often manipulated by some promoters in order to get the best positions in the sickle.

Most search engines have their own puzomerki to measure the weight of the pages and, the most common: StaticRank (Microsoft), WebRank (Yahoo!), PageRank (Google), TCI (Yandex) or mozRank (Linkscape's). Most of them are based on the Markov chain algorithm. If you draw an analogy, the links in the Page Rank are like votes, which page got more votes and has more influence in the future.

For a very basic understanding of the basic principles of Paige Rank, it is enough to know the following:

PageRank can be calculated for the link mass at the page level, assigning its indicators to the URL, and at the domain level, the so-called Domain Rank. When calculating indicators at the domain level, the entire reference weight of the site is taken into account, and the Domain Rank indicator is calculated on the basis of it.

The basic principles of this system are described in our article - "About domain puzomerka. Domain Rank. Domain Trust. Domain Juice.". The main idea of the Trust Rank system is that the “ good ” and high “ trust ” pages are located in a larger link “proximity” than the pages containing spam, which are at a large link distance from this virtual trust center.

Thus, Pujrank type puzomerki transmit only weight, and Puzz Rank type puzomerki help search engines filter spam from quality resources.

Despite the fact that search engines do not publicize the performance of this puzomerki, it is quite obvious that the " reference distance from the center of trust " is taken into account when ranking pages. There is also a Reverse Trust Rank, which tracks who links to already known spam sites, it is also part of the ranking algorithms.

As with the Page Rank, the Trust Rank (and the Back Trust Rank) can be measured at the level of the reference page weight or for the domain reference weight. In Linkscape, we created mozTrust (mT) and mozTrust Domain (DmT), but we still believe that this is only the beginning and there is a lot of work to be done to improve the measurement of these important indicators.

The main idea is as follows: get links from trust sites , not potential spam resources.

The definition of the Domain Rank (also known as the “ level of authority of the domain ”) is often discussed among SEO experts, there is still no exact, universal definition of this concept.

Most optimizers under this general term imply a complex of factors characterizing a domain: fame, importance, level of trust. Domain Rank for each domain is calculated by search engines individually, primarily based on reference information (some experts say that the age of the site is also taken into account when calculating this parameter).

Search engines take into account the site’s “authority” indicator when calculating link weights, and therefore should always be taken into account when planning link promotion. I will say more, domain metrics may be more important than the performance of a single page that links to your site.

We analyzed all the reference indicators known to us, and found that with high positions in issuing the strongest relationship has an indicator characterizing the diversity of referenced domains.

We suspect this is due to the fact that this indicator is the most difficult to manipulate, and search engines use it as a reliable way to combat spam.

As the experience of many SEO experts shows, a variety of link sources has a strong positive effect when ranking a site by search engines. Following this logic, it turns out that it is always better to have a certain number of links from different sites than the same number of links from just a few sites, the higher the variety of reference sources the better.

In turn, links from sites that themselves have a large link diversity (that is, many different sites link to them) will transfer more weight than links from sites with low link diversity.

Search engines can use different methods to determine the identity of sites to the same person (or company), which is the relationship between different sites. Among these methods are the following (of course this is not all):

If search engines find such a relationship between a network of sites, they reduce the weight transmitted by the links between them, or even remove the possibility of transmitting a reference weight.

Microsoft was the first search engine who publicly admitted that he was going to analyze the block structure of pages. (in MS Research part in VIPS - VIsion-based Page Segmentation).

Since then, SEO experts have begun to notice the same element of analytics in Google and Yahoo. According to the observations of our company, for example, internal links in the basement of the page do not convey less weight than links in the header or side panels.

Some experts are of the opinion that in this way search engines try to deal with corrupt external links, i.e. what would be unattractive are the places where webmasters usually try to stuff them: side panels and basement.

All SEOs are united in one thing - the most valuable links are from the "content" part of the page , and the weight is transmitted from such links and the traffic through them also goes.

There are many ways in which search engines can determine the thematic proximity of two separate pages (or sites).

Previously, for this purpose, Google Labs used algorithms that automatically classified sites by subject (medical, real estate, sports, and many others), depending on their URL, category names, and subcategories of the site.

It is possible, and now they are using similar mechanisms to identify " related " sites within different topics. Based on thematic proximity links between sites can transmit more or less weight.

Personally, I do not always care about the thematic proximity of sites - if there is an opportunity to get links from such reputable general thematic resources, such as NYTimes.com , why not, or if some highly specialized blog for some reason decided to refer to you Why neglect this.

On the other hand, search engines use the " proximity " factor to combat spam. When a site with a good reputation suddenly begins to refer to some thematically distant suspicious resources (pharma, online casinos, adult), search engines may consider such actions as spam.

Thematic proximity can tell the search engines the relationship between links on the page, the content and context of the link plays a significant role in transferring the link weight from one page to another.

In a contextual / contextual analysis of links, search engines try to determine, as far as possible, by machine, why there are links on this page.

If these are natural links, then several options are possible. They can be integrated into content, refer to related pages, use standard HTML rules, use words, phrases, language, etc.

Analyzing all these indicators, the robot can quite accurately distinguish natural links from those that are present for other reasons: secretly affixed (hacking), copyright links (they convey some weight, but not much), and of course paid links.

Geographical data links are interrelated with the site on which it is located, but search engines, especially Google, are more and more paying attention to the geographical match of the links to the site to which they link (the so-called geographical relevance). For a geographical location of sites, search engines use different determining factors, including:

On the one hand, by acquiring links from sites of a particular region, you get the opportunity to be in good positions in local search for this region.

On the other hand, if everyone who links to you is localized in only one specific region, it will be difficult for you to advance in the search for other regions, even if your site has all the necessary geographically-determining factors for this (hosting IP address, national domain name, language content, etc.).

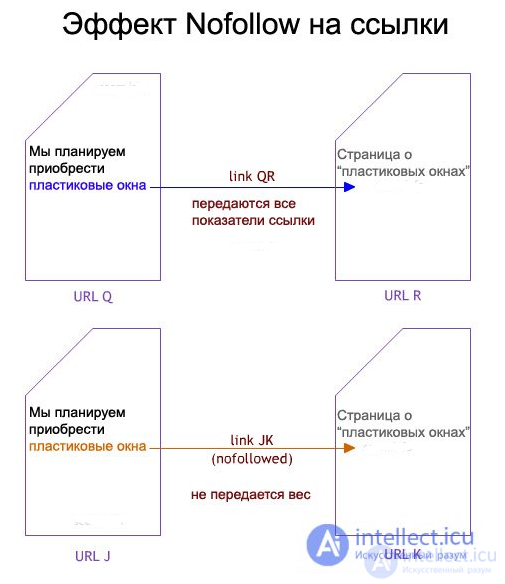

Sometimes it seems that a lot of time has passed since the nofollow tag appeared, but in fact it was only in January 2005 that Google announced the use of a new HTML tag.

It's very simple, when you close the link in the rel = "nofollow" tag, it can no longer transfer any weight to other pages. To date, according to the Linkscape Index, approximately 3% of all links on the network are hidden in the nofollow tag, with more than half of them hiding internal links.

From time to time the same question is posted among SEOs, whether and always all search engines follow the rules of this rule. It is well known that Google can transmit some of the reference indicators through external links to Wikipedia, despite the fact that they are hidden in the nofollow tag.

Links can be in various formats. The three most common:

Google recently announced that they not only learned how to click on links in Java code, but also pass reference weight through them (this news upset many webmasters who hid paid / promotional links on their websites in Java code).

For many years now, search engines have been using the "alt" attribute in graphic links similar to anchor text in regular text links.

Be that as it may, not all links have equal weight for search engines. We conducted a series of experiments, which resulted in the following data: the usual HTML direct links with standard anchor text are the most powerful, followed by graphical links with rich "alt" attributes, and finally, the Java script links (despite the fact that Google announced that he copes with this type of links, their mechanism of working with them is not yet perfect, at least our experience testifies to this).

I would like to draw the attention of all those who are going to increase the link mass for their site, that search engines still do not work well with " non-standard " links, so first of all you need to try to get direct text HTML links with the correct anchor text, they will be the most effective.

When a page has external links, when calculating the weight of the transmitted link, not only the number of links, but also the number of sites that are referenced is taken into account.

As we noted above (point 3), the “ Page Rank ” algorithm, regardless of which search engine it is, divides the entire reference page weight by the number of outgoing links to it.

In addition, search engines rate on how many domains are all links. If, for example, links from page content go to just a few domains with high-quality relevant content, then they can transfer more weight than the same number of links from different page corners to different domains of poor-quality sites on remote subjects.

Also, the search engine track anyone else besides you refers to one or another page. Getting links from pages that besides you link to some sites with a dubious reputation is always much worse than from a page that refers to another high-quality and authoritative resource.

Каждый кто профессионально занимается SEO бизнесом , видит в своем страшном сне санкции которые могут накладывать поисковики, результат которых может быть от потери возможности передавать ссылочный вес до полного бана страницы или сайта в поисковике.

Если сайт или страница теряет возможность передавать ссылочный вес, тогда продажа ссылок с нее теряет всякий смысл.

Имейте ввиду что поисковики могут использовать самые разные приемы санкций (невозможность продвигаться по определенным запросам, понижение показателей Пейдж Ранка, и т.д.) в первую очередь это направлено против тех кто систематически пытается манипулировать результатами выдачи, но алгоритмы все еще не совершенны и под фильтры могут попасть также случайные страницы.

На сегодняшний день в сети очень популярен интегрированный контент с различных хостинг сервисов (видео, документы, фотографии, файл - хостинги и т.д.) который содержит в себе обратные ссылки на домашний сайт.

Я не думаю что поисковые системы полностью откажутся учитывать эти ссылки, но все же будут пытаться ограничивать вес и значимость таких ссылок, даже несмотря на то что эти сайты не являются спам ресурсами и очень полезны для пользователей сети.

В нашей компании , мы склонны к мнению что поисковые машины сопоставляют контент сайта с ссылками размещенными на нем, для того что бы определить уровень ссылочного разнообразия и качество ссылок.

Когда поисковые машины видят один и тот же контент с одними и теми же ссылками на тысячах разных сайтов, это есть сигналом для них о понижении уровня показателя ссылочного разнообразия. Этот вопрос может быть предметом дискуссий, но поисковые системы пытаются найти и отфильтровать ссылки полученный таким способом, что бы избежать дальнейших манипуляций.

Время и дата появления ссылок идут последним пунктом в нашем списке ( но это никак не понижает значимости этого фактора ).

Роботы поисковых машин путешествуя в сети собирают и сравнивают информацию о том как новые и старые сайты обретают ссылки, эта информация в первую очередь нужна поисковикам для борьбы со спамом, к тому частота с которой сайт получает новые ссылки влияет на уровень его авторитетности, поэтому новые сайты которые очень быстро получают большое количество ссылок могут вызвать подозрение.

Как используют поисковые системы все вышеперечисленные факторы это уже отдельная тема для дискуссий и дебатов, но все они однозначно учитываются в алгоритмах ранжирования, и влияют на позиции в серпе.

Ну и самый последний совет - избегайте одновременной покупки большого количества ссылок, так как поисковик может воспринять это как спамерские действия, а это существенно отразиться на позициях вашего сайта.

Although our list contains many items, you should not consider it a dogma, use what you think is necessary. We will be happy if you add some of your advice, or share your experience with us.

Comments

To leave a comment

seo, smo, monetization, basics of internet marketing

Terms: seo, smo, monetization, basics of internet marketing