Lecture

A neurocomputer is a device for processing information based on the principles of operation of natural neural systems. [1] These principles were formalized, which allowed to speak about the theory of artificial neural networks. The problem of neurocomputers is to build real physical devices, which will allow not only to simulate artificial neural networks on a regular computer, but also to change the principles of computer operation, which makes it possible to say that they work in accordance with the theory of artificial neural networks.

The terms neurocybernetics, neuroinformatics, neurocomputers entered into scientific use recently - in the mid 80s of the XX century. However, the electronic and biological brains were constantly compared throughout the history of computing. The famous book of N. Wiener "Cybernetics" (1948) [2] has the subtitle "Control and communication in the animal and the machine."

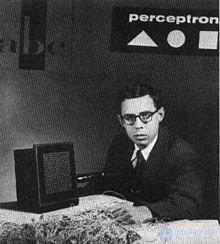

The first neurocomputers were Rosenblatt perceptrons: Mark-1 (1958) and Tobermory (1961-1967) [3] , as well as Adaline, developed by Widrow and Hoff (1960) on the basis of the delta rule ( Widrow formula ) [4] . Currently, Adaline (an adaptive adder learning using the Widrow formula) is a standard feature of many signal processing and communication systems. [5] In the same series of the first neurocomputers is the program "Cora", developed in 1961 under the leadership of M. M. Bongard [6] .

The monograph of Rosenblatt (1958) [7] played a major role in the development of neurocomputers.

The idea of neuro-bionics (creation of technical means on neuro-principles) began to be intensively implemented in the early 1980s. The impulse was the following contradiction: the sizes of the elementary parts of computers became equal to the sizes of elementary “information converters” in the nervous system, the speed of individual electronic elements was achieved millions of times greater than that of biological systems, and the efficiency of solving problems, especially related tasks of orientation and decision making natural environment, living systems are still unattainable above.

The theoretical impetus of the 1980s on the theory of neural networks (the Hopfield network, the Kohonen network, the back propagation error method) gave another impetus to the development of neurocomputers.

Unlike digital systems, which are combinations of processor and storage units, neuroprocessors contain memory distributed in the connections between very simple processors, which can often be described as formal neurons or blocks of similar formal neurons. Thus, the main load on the implementation of specific functions by processors rests on the system architecture, the details of which, in turn, are determined by interneuron connections. An approach based on the representation of both data memory and algorithms by a system of links (and their weights) is called connectionism.

Three main advantages of neurocomputers:

Developers of neurocomputers seek to combine the stability, speed and parallelism of AVMs - analog computers - with the versatility of modern computers. [eight]

For the role of the central problem solved by all neuroinformatics and neurocomputing, A. Gorban [9] proposed the problem of efficient parallelism. It has long been known that computer performance increases much more slowly than the number of processors. M. Minsky formulated a hypothesis: the performance of a parallel system increases (approximately) in proportion to the logarithm of the number of processors — this is much slower than a linear function (Minsky’s hypothesis).

To overcome this limitation, the following approach is used: for various classes of problems, maximally parallel solution algorithms are built that use some kind of abstract architecture (paradigm) of fine-grained parallelism, and for concrete parallel computers, means are created for implementing parallel processes of a given abstract architecture. As a result, an efficient apparatus for the production of parallel programs appears.

Neuroinformatics supplies universal fine-grained parallel architectures for solving various classes of problems. For specific tasks, an abstract neural network implementation of the solution algorithm is built, which is then implemented on specific parallel computing devices. Thus, neural networks make it possible to effectively use parallelism.

The long-term efforts of many research groups have resulted in a large number of different “learning rules” and architectural networks, their hardware implementations and techniques for using neural networks to solve applied problems.

These intellectual inventions [10] exist as a “zoo” of neural networks. Each network of the zoo has its own architecture, the rule of learning and solves a specific set of tasks. In the last decade, serious efforts have been made to standardize the structural elements and transform this “zoo” into a “technopark” [11] : each neural network from the zoo is implemented on an ideal universal neurocomputer with a given structure.

The basic rules for the allocation of functional components of an ideal neurocomputer (according to Mirkes):

The neurocomputer market is gradually taking shape. Currently, various highly parallel neuro-accelerators [12] (co-processors) for various tasks are widely distributed. There are few models of universal neurocomputers in the market, partly because most of them are implemented for special applications. Examples of neurocomputers are the Synapse neurocomputer (Siemens, Germany), [13] NeuroMatrix processor [14] . A specialized scientific and technical journal “Neurocomputers: development, application” is being published [15] . Annual conferences on neurocomputers are held [16] . From a technical point of view, today's neurocomputers are computing systems with parallel streams of the same commands and multiple data streams (MSIMD architecture). This is one of the main directions of development of computing systems with massive parallelism .

An artificial neural network can be transmitted from a (neuro) computer to a (neuro) computer, as well as a computer program. Moreover, based on it, specialized high-speed analog devices can be created. There are several levels of alienation of a neural network from a universal (neuro) computer [17] : from a network learning on a universal device and using rich possibilities in manipulating a task book, learning algorithms and architecture modifications, until complete alienation without training and modification opportunities, only the functioning of the trained network .

One way to prepare a neural network for transmission is to verbalize it: the trained neural network is minimized while retaining useful skills. The description of a minimized network is more compact and often allows for a clear interpretation.

In neurocomputing, a new direction is gradually maturing, based on the connection of biological neurons with electronic elements. By analogy with Software (software - "soft product") and Hardware (electronic hardware - "hard product"), these developments were calledWetware ( English ) - "wet product".

Currently, there is already a technology for connecting biological neurons with ultra miniature field-effect transistors using nanofibers (Nanowire). [18] The development uses modern nanotechnology. In particular, carbon nanotubes are used to create connections between neurons and electronic devices. [nineteen]

Another definition of the term “Wetware”, a human component in human-computer systems, is also common.

Comments

To leave a comment

Computer circuitry and computer architecture

Terms: Computer circuitry and computer architecture