Lecture

Intelligent multi-agent systems are one of the new promising areas of artificial intelligence, which was formed on the basis of research results in the field of distributed computer systems, network technologies for problem solving and parallel computing. In multi-agent technologies, the principle of autonomy of individual parts of the program, jointly functioning in a distributed system, where a lot of interrelated processes occur simultaneously, is laid. Such programs are called agents.

Examples of tasks solved with the help of MAS are:

· Management of information flows and networks;

· Air traffic control;

· Search for information on the Internet;

· E-commerce, training;

· Collective adoption of multi-criteria management decisions and others.

An agent is an autonomous artificial object, usually a computer program with active, motivated behavior and capable of interacting with other objects in dynamic virtual environments. Each agent can receive messages, interpret their content and form new messages that are either transmitted to a common database or sent to other agents.

Intellectual agents have the following basic properties:

· Autonomy - the ability to function without interference from its owner and to monitor their own actions and internal state;

· Activity - the ability to organize and implement actions;

· Sociability - interaction and communication with other agents;

· Reactivity - an adequate perception of the state of the environment and the reaction to its change;

· Focus - the presence of their own sources of motivation;

· Having a basic knowledge of yourself, other agents and the environment;

· Beliefs - the variable part of basic knowledge, changing over time;

· Desire - the desire for certain conditions;

· Intentions - actions that are planned by the agent to fulfill its obligations and / or desires;

· Obligations - tasks that one agent performs at the request and / or instructions of other agents.

Sometimes other qualities are added to this list, including:

· Truthfulness - the inability to substitute true information for knowingly false;

· Benevolence - willingness to cooperate with other agents in the process of solving their own tasks, which usually implies the absence of conflicting goals set for agents;

· Altruism - the priority of common goals compared to personal ones;

· Mobility - the ability of the agent to migrate over the network in search of the necessary information.

Agent classification

Two main features are used to classify agent programs: 1) the degree of development of the internal understanding of the external world; 2) the decision making method.

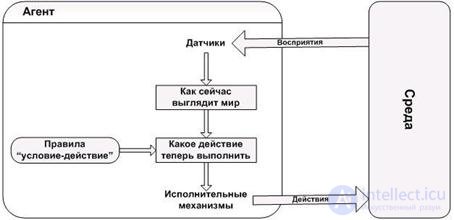

The simplest type of agent is a simple reflex agent. Such agents select actions based on the current perception of the state of the environment, ignoring the rest of the history of perception. Simple reflex agents are extremely simple, but have limited intelligence.

Figure 11.1 - Structure of a simple reflex agent.

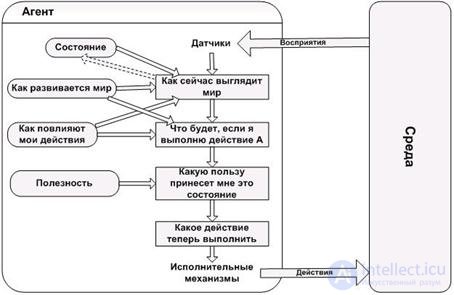

Under conditions of partial observability, it is necessary for the agent to track changes in the environment. This means that the agent must have a variety of internal states, the change of which depends on the history of perception.

In fig. 11.2 shows the structure of the agent, taking into account the internal state. The current perception is combined with the former internal state, as a result of which actions are performed and the internal state changes.

Figure 11.2 - Agent acting in view of the internal state.

Knowledge of the current state of the environment is not always enough to make a decision. Then the agent needs not only a description of the current state, but also information about the target, which describes the desired situations. The structure of the agent acting on the basis of the goal is shown in Figure 11.3. He monitors the state of the environment, as well as the many goals that he is trying to achieve, and chooses an action aimed at achieving these goals.

Figure 11.3 - The structure of the agent acting on the basis of goals.

Often there are situations when only a goal of information is not enough to make a decision. First, if there are conflicting goals, such that only some of them can be achieved (for example, either speed or safety). Secondly, if there are several goals to which the agent may strive, but each of them can be achieved with a certain probability of success. In this case, a utility function is introduced into the agent's program, which associates a real number with the meaning of the expected utility of a given state. The agent chooses the action that leads to the best expected utility.

Figure 11.4 - Structure of an agent based on model and utility.

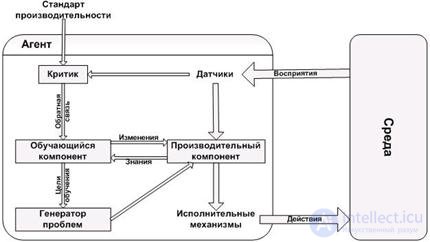

In a special class emit learning agents. The training has an important advantage: it allows the agent to function in initially unknown variants of the environment and to become more competent than only his initial knowledge could allow.

In the structure of the learning agent, four conceptual components are distinguished, as shown in Figure 11.5. The productive component may be any of the previously considered structures of agent programs. The training component uses the feedback information from the critic to evaluate how the agent acts, and determines how the productive component should be modified in order for it to operate more successfully in the future.

Figure 11.5 - The structure of the learning agent.

The critic informs the training component how well the agent works, taking into account the constant standard of performance, since the perception results themselves do not give any indication of whether the agent is operating successfully. This standard should be considered completely external to the agent, since the agent should not be able to modify it. For example, a chess program can get perception results rolling in that it mates its opponent, but it needs a performance standard that would allow it to be determined that it is a good result, since the perception data itself does not say anything about it.

The final component of the learning agent is the problem generator. His task is to propose actions that should lead to a new and informative experience. The fact is that if the productive component is left to itself, it continues to perform the actions that are best from the point of view of what it knows. But if an agent is ready to experiment a little and in the short term to perform actions that may not be entirely optimal, he can discover the best actions in the future.

The interaction between agents is the main feature of the MAC, distinguishing them from other intelligent systems. The main characteristics of any interaction are focus, selectivity, intensity and dynamism. In the context of MAC, these concepts can be interpreted as follows:

• orientation - positive or negative; cooperation or competition; cooperation or confrontation; coordination or subordination, etc .;

• selectivity - interaction occurs between agents that are in some way correspond to each other and the task. In this case, agents may be related in one respect and independent in another;

• intensity - the interaction between agents is not limited to the presence or absence, but is characterized by a certain strength;

• Dynamic - the presence, strength and direction of interactions may change over time.

The basic types of interaction between agents include:

• cooperation (collaboration);

• competition (confrontation, conflict);

• compromise (taking into account the interests of other agents);

• conformism (rejection of their interests in favor of others);

• avoidance of interaction.

The interaction of agents is due to a number of reasons, the most important of which are the following.

Compatibility of objectives (general purpose). This reason usually generates interaction in the form of cooperation or collaboration. In this case, it is necessary to find out whether the interaction does not lead to a decrease in the viability of individual agents. The incompatibility of goals or beliefs usually gives rise to conflicts, whose positive role is to stimulate development processes. The well-known predator-prey model is an example of simultaneous interaction in two types of cooperation-confrontation.

Shared resources. Resources will be called any means used to achieve their goals by agents. The limited resources that are used by many agents usually cause conflicts. One of the simplest and most effective ways to resolve such conflicts is the right of the strong: a strong agent takes resources from the weak. More subtle ways of resolving conflicts provide negotiations aimed at reaching compromises that take into account the interests of all agents. The objectives of the distribution of market shares, costs and profits of joint ventures can be viewed as examples of interactions arising from shared resources.

The need to attract the missing experience. Each agent has a limited set of knowledge necessary for him to realize his own and common goals. In this regard, he has to interact with other agents. In this case, various situations are possible: a) the agent is able to complete the task independently; b) the agent can do without outside help, but cooperation will allow solving the problem in a more efficient way; c) the agent is not able to solve the problem alone. Depending on the situation, agents choose the type of interaction and may show different degrees of interest in cooperation.

Mutual obligations. Commitments are one of the tools to streamline chaotic agent interactions. They allow you to anticipate the behavior of other agents, predict the future and plan your own actions. The following groups of obligations can be distinguished: a) obligations to other agents; b) the agent’s obligations to the group; c) the group's obligations to the agent; d) the agent's obligations to himself. A formal representation of goals, obligations, desires and intentions, as well as all other characteristics, forms the basis of the mental model of an intelligent agent, which ensures its motivated behavior offline.

The listed reasons in various combinations can lead to different forms of interaction between agents, for example:

• simple cooperation, which involves the integration of the experience of individual agents (distribution of tasks, knowledge sharing, etc.) without special measures to coordinate their actions;

• coordinated cooperation, when agents are forced to coordinate their actions (sometimes involving a special agent-coordinator) in order to effectively use resources and their own experience;

• unproductive cooperation, when agents share resources or solve a common problem, not sharing experiences and interfering with each other (like a swan, cancer and pike in the fable by IA Krylov).

In the process of modeling the collective work of agents, many problems arise:

• recognition of the need for cooperation;

• selection of suitable partners;

• the ability to take into account the interests of partners;

• organization of negotiations on joint actions;

• formation of joint action plans;

• synchronization of joint actions;

• decomposition of tasks and segregation of duties;

• identification of conflicting goals;

• competition for shared resources;

• formation of rules of behavior in a team;

• learning behavior in a team, etc.

The peculiarity of the collective behavior of agents is that their interaction in the process of solving particular problems (or one common one) generates a new quality of solving these problems. In this case, the following basic ideas are used in the models for coordinating the behavior of agents:

1. Refusal to find the best solution in favor of the “good”, which leads to a transition from a strict optimization procedure to the search for an acceptable compromise that implements a particular principle of coordination.

2. The use of self-organization as a sustainable mechanism for the formation of collective behavior.

3. Application of randomization (random-probabilistic way of choosing solutions) in coordination mechanisms for conflict resolution.

4. Realization of reflexive control, the essence of which is to force the subject to consciously obey outside influence, that is, to form in him such desires and intentions (intentions) that coincide with the requirements of the environment.

The most well-known models of agent behavior coordination are: game-theoretic models, collective behavior models of automata, collective behavior planning models, models based on BDI architectures (Belief-Desire-Intention), competition-based behavior coordination models.

Theoretical game models. The subject of game theory is the problem of decision making under uncertainty and conflict. The presence of a conflict implies the existence of at least two participants, which are called players. The set of solutions that each player can choose is called a strategy. Equilibrium points of the game (optimal solutions) call such states when it is unprofitable for any of the players to change their position. The concept of equilibrium turned out to be very useful in the theory of MAC, since the mechanism for finding equilibrium situations can be used as a means of self-organizing the collective behavior of agents. The consequence of this interpretation is an approach in which the necessary attributes of the collective behavior of agents are provided by constructing the rules of the game. In addition, based on the development of the theory of games in the field of MAC, attempts are being made to build efficient, stable, fully distributed negotiation protocols aimed at coordinating the collective behavior of agents.

Models of collective behavior of automata. They are based on the ideas of randomization, self-organization and complete distribution. Models of this type are suitable for building negotiation protocols in tasks that are characterized by a large number of very simple interactions with unknown characteristics.

Collective planning models. Planning can be centralized, partially centralized or distributed. In the latter case, the agents themselves decide on the choice of their actions in the process of coordinating private plans, which raises questions about rational decentralization, about the possibility of changing goals in the event of conflicts, as well as problems of computational complexity.

Models based on BDI architectures. The models of this class use axiomatic methods of game theory and the logical paradigm of artificial intelligence. The emphasis is on the description of meaningful concepts, such as beliefs (belief), desires (desires) and intentions (intention). The task of coordinating the behavior of agents is solved by coordinating the results of inference in the knowledge bases of individual agents obtained for the current state of the environment in which agents operate. The logical conclusion is carried out directly in the process of agent functioning, which leads to high complexity of models, computational difficulties and problems associated with the axiomatic description of nontrivial situations, for example, when the agent has a choice between solving his own task and fulfilling obligations towards partners.

Competition based models. In the models of this class, the concept of auction is used as a mechanism for coordinating the behavior of agents. The use of the auction mechanism is based on the assumption of the possibility of an explicit transfer of "utility" from one agent to another or to an auctioneer, and this utility usually makes sense of money.

Auctions can be divided into open and closed. In the first case, the proposed prices are announced to all participants. In a closed auction, only the auctioneer knows about the prices offered. Open auctions vary in how they are conducted. In the so-called English auctions, the starting price is usually set, which can be increased by participants during the bidding process. The winner is the one who gives the maximum price. The Dutch auction starts with the top price, which gradually decreases. The winner is the one who gave the highest current price. Closed auctions are divided into first and second price auctions. In auctions of the first price, the one who offered the highest price, known only to the auctioneer, wins. In the second price auction, the winner is determined in the same way, but the second highest price is not paid for the goods.

The auction mechanism itself does not affect the decision-making methods of the participants. Решения могут приниматься на основе некоторой модели рассуждений, которая может использовать различные типы знаний, доступных агентам, и разнообразные способы их обработки.

Аукцион всегда должен заканчиваться. Для этого в стратегии его проведения должны быть заложены средства для разрешения возможных конфликтов (например, при наличии нескольких победителей). Одним из самых простых способов разрешения конфликтов является рандомизация, когда применяется случайный механизм выбора.

Рассмотрим практические примеры организации взаимодействия в мультиагентных системах с использованием различных механизмов координации поведения.

Электронный магазин. Рассмотрим типичную задачу электронной коммерции, в которой участвуют агенты-продавцы и агенты-покупатели. Торговля осуществляется в электронном магазине, который представляет собой программу, размещенную на сервере. Ее основным назначением является организация взаимодействия агентов, интересы которых совпадают. Агенты действуют по поручению своих персональных пользователей. При этом агенты-продавцы стремятся продать свой товар по максимально возможной цене, а агенты-покупатели стремятся купить нужный товар по минимальной цене. Оба вида агентов действуют автономно и не имеют целей кооперации. Электронный магазин регистрирует появление и исчезновение агентов и организует контакты между ними, делая их «видимыми» друг для друга.

Рисунок 11.6 – Схема электронного магазина.

The behavior of the seller agent is characterized by the following parameters:

• the desired date before which it is necessary to sell the goods;

• the desired price at which the user wants to sell the product;

• the lowest allowable price below which the product is not for sale;

• price reduction function in time (linear, quadratic, etc.);

• description of the goods sold.

Agent-buyer has “symmetric” parameters:

• deadline for the purchase of goods;

• the desired purchase price;

• the highest reasonable price;

• price growth function over time;

• description of the purchased goods.

Торги ведутся по схеме закрытого аукциона первой цены. Поведение агентов описывается простой моделью, в которой не используются знания и рассуждения. Агент-продавец, получив от электронного магазина информацию о потенциальных покупателях своего товара, последовательно опрашивает их всех с целью принять решение о возможности совершения сделки. Сделка заключается с первым агентом-покупателем, который готов дать за товар запрашиваемую цену. Продавец не может вторично вступить в контакт с любым покупателем до тех пор, пока не опросит всех потенциальных покупателей. При каждом контакте агент-продавец ведет переговоры, предлагая начальную цену либо снижая ее. Агент-покупатель действует аналогичным образом, отыскивая продавцов нужного товара и предлагая им свою цену покупки, которую он может увеличить в процессе переговоров. Любая сделка завершается только в случае ее одобрения пользователем агента.

Данная схема переговоров представляет собой простейший случай взаимодействия автономных агентов, действующих реактивно. Тем не менее итоговое поведение системы вполне адекватно реальности.

Виртуальное предприятие. Создание виртуальных предприятий является одним из современных направлений бизнеса, которое в значительной мере стимулируется быстрым ростом информационных ресурсов и услуг, предоставляемых в сети Интернет. Кроме того, появлению виртуальных предприятий способствует сокращение времени жизненного цикла создаваемых изделий и повышение уровня их сложности, так как при этом возникает необходимость оперативного объединения производственных, технологических и интеллектуальных ресурсов. Еще одна немаловажная причина - ужесточение конкуренции на товарных рынках, стимулирующее объединение предприятий в целях выживания.

Виртуальное предприятие можно определить как кооперацию юридически независимых предприятий, организаций и индивидуумов, которые производят продукцию или услуги в общем бизнес-процессе. Во внешнем мире виртуальное предприятие выступает как единая организация, в которой используются методы управления и администрирования, основанные на применении информационных и телекоммуникационных технологий. Целью создания виртуального предприятия является объединение производственных, технологических, интеллектуальных и инвестиционных ресурсов для продвижения на рынок новых товаров и услуг.

Поскольку каждое реальное предприятие в рамках виртуального выполняет только часть работ из общей технологической цепочки, то при его создании решаются две главные задачи. Первая — это декомпозиция общего бизнес-процесса на компоненты (подпроцессы). Вторая задача заключается в выборе рационального состава реальных предприятий-партнеров, которые будут осуществлять технологический процесс. Первая задача решается с применением методов системного анализа, а для решения второй могут применяться средства мультиагентных технологий.

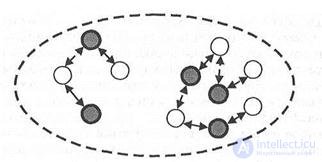

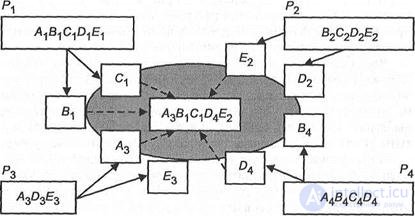

Задача оптимального распределения множества работ (подпроцессов) среди множества работников (реальных предприятий) в исследовании операций формулируется как задача о назначениях [5]. Ее решение начинается с формирования множеств подпроцессов и потенциальных предприятий-участников. Затем строятся возможные отображения из множества участников на множество подпроцессов и делается выбор наиболее приемлемого отображения, которое соответствует конкретным назначениям предприятий на бизнес-процессы. Для этого можно использовать механизм аукциона. In fig. 7.2 приведена схема аукциона по созданию виртуального предприятия, в котором выделены бизнес-процессы А, В, С, D, Е и участвуют четыре предприятия: Р 1 , Р 2 , Р 5 , P 4 , претендующие на их реализацию. Каждое из предприятий представлено интеллектуальным агентом, при этом одно из них (Р х ) выступает в роли инициатора (аукционера).

Рисунок 11.7 – Схема создания виртуального предприятия.

Перед началом аукциона аукционер (менеджер) формирует базу данных и базу знаний об участниках аукциона. Затем он выставляет на продажу отдельные бизнес-процессы, информация о которых представлена стартовой ценой и требованиями по заданному набору показателей. Каждый претендент выдвигает свои предложения по параметрам, которые он в состоянии обеспечить, и свою цену. Собрав и обработав эти предложения, аукционер с помощью некоторой модели рассуждения упорядочивает потенциальных претендентов с учетом собственной информации о них. После этого он принимает решение о выборе назначений или отвергает их и выдвигает новые предложения.

Следует отметить, что задачу создания виртуального предприятия можно отнести к задачам структурного синтеза сложных систем, удовлетворяющих заданным требованиям.

Comments

To leave a comment

Intelligent Information Systems

Terms: Intelligent Information Systems