Lecture

Test-driven development (TDD ) is a software development technique that is based on repeating very short development cycles: first a test is written covering the desired change, then code is written that allows the test to pass, and finally refactoring is done new code to the relevant standards. Kent Beck, who is considered the inventor of this technique, claimed in 2003 that developing through testing encourages simple design and inspires confidence (English inspires confidence ) [1] .

In 1999, when it emerged, development through testing was closely connected with the concept of “first test” (English test-first ), used in extreme programming [2] , but later emerged as an independent methodology. [3] .

A test is a procedure that allows you to either confirm or deny the performance of the code. When the programmer checks the performance of the code developed by him, he performs manual testing.

Development through testing requires the developer to create automated unit tests that define the requirements for the code just before writing the code itself. The test contains tests for conditions that can either be executed or not. When they are executed, they say that the test is passed. Passing the test confirms the behavior assumed by the programmer. Developers often use libraries for testing (born testing frameworks ) to create and automate the launch of test suites. In practice, unit tests cover critical and non-trivial code segments. This may be code that is subject to frequent changes, code that affects the performance of a large number of other code, or code with a large number of dependencies.

The development environment must respond quickly to small code modifications. The program architecture should be based on the use of a set of strongly related components that are weakly interlinked with each other, which makes testing the code easier.

TDD not only involves validation, but also affects the design of the program. Based on tests, developers can quickly imagine what functionality the user needs. Thus, the details of the interface appear long before the final implementation of the solution.

Of course, the same requirements of coding standards apply to tests as to the main code.

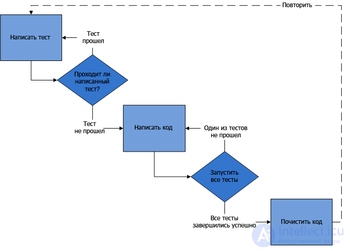

The sequence of actions is based on Kent Beck's “Development through testing: an example” (eng. Test Driven Development: By Example ). [one]

When developing through testing, adding each new functionality (eng. Feature ) to the program begins with writing a test. Inevitably, this test will not pass, because the corresponding code has not yet been written. (If the written test has passed, it means that either the proposed “new” functionality already exists, or the test has flaws.) To write a test, the developer must clearly understand the requirements for the new capabilities. For this, possible usage scenarios and user histories are considered. New requirements may also entail a change in existing tests. This distinguishes development through testing from a technician, when tests are written after the code is already written: it forces the developer to focus on the requirements before writing the code — a subtle but important difference.

At this stage, verify that the newly written tests do not pass. This stage also checks the tests themselves: a written test can always pass and therefore be useless. New tests should not pass for the explainable reasons. This will increase the confidence (although it will not guarantee completely) that the test actually tests what it was designed for.

At this stage, the new code is written so that the test will pass. This code does not have to be perfect. It is acceptable that he pass the test in some inelegant way. This is acceptable because the subsequent steps will improve and polish it.

It is important to write code designed specifically for passing the test. Do not add extra and, accordingly, not tested functionality.

If all tests pass, the programmer can be sure that the code meets all the tested requirements. After that, you can proceed to the final stage of the cycle.

When the required functionality has been achieved, the code can be cleaned at this stage. Refactoring is a process of changing the internal structure of a program that does not affect its external behavior and has the goal of facilitating an understanding of its work, eliminating code duplication, and facilitating changes in the near future.

The described cycle is repeated, implementing all the new and new functionality. Steps should be made small, from 1 to 10 changes between test runs. If the new code does not satisfy the new tests or the old tests cease to pass, the programmer should return to debugging. When using third-party libraries, one should not make such small changes that literally test the third-party library itself [3] , and not the code that uses it, unless there is a suspicion that the library contains errors.

Development through testing is closely connected with such principles as “make it easier, fool” ( keep it simple, stupid, KISS ) and “you will not need it” ( YAGNI ). The design can be cleaner and clearer when writing only the code that is necessary for passing the test. [1] Kent Beck also proposes the principle of “fake, until you do” ( fake it till you make it ). Tests should be written for the tested functionality. This is believed to have two advantages. This helps to ensure that the application is suitable for testing, since the developer will have to think about how the application will be tested from the very beginning. It also helps to ensure that the tests will cover all the functionality. When the functionality is written before the tests, developers and organizations tend to move to the implementation of the following functionality without testing the existing one.

The idea of checking that a newly written test fails helps to make sure that the test actually checks something. Only after this test should begin to implement new functionality. This technique, known as “red / green / refactoring”, is called the “development mantra through testing”. The red here means not past tests, and under green - past ones.

Proven development practices through testing led to the creation of the “development through acceptance testing” technique (eng. Acceptance Test-driven development, ATDD ), in which the criteria described by the customer are automated into acceptance tests used later in the normal development process through unit testing (eng. Unit test-driven development, UTDD ). [4] This process ensures that the application meets the stated requirements. When developing through acceptance testing, the development team focuses on a clear task: to satisfy acceptance tests that reflect the relevant user requirements.

Acceptance (functional) tests (English customer tests, acceptance tests ) - tests that test the application's functionality for compliance with customer requirements. Acceptance tests pass on the side of the customer. This helps him to be sure that he will receive all the necessary functionality.

A 2005 study found that using test-driven development implies writing more tests; in turn, programmers who write more tests tend to be more productive. [5] Hypotheses linking the quality of the code with TDD were inconclusive. [6]

Programmers using TDD on new projects note that they rarely feel the need to use a debugger. If some of the tests suddenly stop running, rolling back to the latest version that passes all the tests may be more productive than debugging. [7]

Development through testing offers more than just validation; it also affects the design of the program. Initially, focusing on the tests, it is easier to imagine what kind of functionality the user needs. Thus, the developer thinks over the details of the interface before implementation. Tests make your code more adaptable for testing. For example, discard global variables, singletons, make classes less connected and easy to use. Strongly related code or code that requires complex initialization will be much more difficult to test. Unit testing contributes to the formation of clear and small interfaces. Each class will play a specific role, usually small. As a result, the dependencies between the classes will decrease, and the engagement will increase. Contract programming (eng. Design by contract ) complements testing, forming the necessary approval requirements (eng. Assertions ).

Despite the fact that when developing through testing, it is required to write more code, the total time spent on development is usually less. Tests protect against errors. Therefore, the time spent on debugging decreases many times. [8] A large number of tests helps to reduce the number of errors in the code. Elimination of defects at an earlier stage of development, prevents the appearance of chronic and costly errors, leading to long and tedious debugging in the future.

Tests allow you to refactor code without the risk of spoiling it. When making changes to a well-tested code, the risk of new errors is much lower. If new functionality leads to errors, tests, if they are, of course, will immediately show it. When working with code for which there are no tests, an error can be detected after a considerable time, when it will be much more difficult to work with code. Well-tested code easily refactor. The confidence that the changes will not violate the existing functionality, gives confidence to developers and increases the efficiency of their work. If the existing code is well covered with tests, developers will feel much freer when introducing architectural solutions that are designed to improve the design of the code.

Test-driven development promotes more modular, flexible, and extensible code. This is due to the fact that with this methodology, the developer needs to think of the program as a set of small modules that are written and tested independently and only then connected together. This leads to smaller, more specialized classes, less connectivity and cleaner interfaces. Using mock objects also contributes to the modularization of code, since it requires a simple mechanism to switch between mock and regular classes.

Since only the code that is needed to pass the test is written, automated tests cover all execution paths. For example, before adding a new conditional operator, the developer must write a test that motivates the addition of this conditional operator. As a result, the tests developed as a result of development through testing are fairly complete: they detect any unintended changes in the behavior of the code.

Tests can be used as documentation. A good code tells how it works, better than any documentation. Documentation and comments in the code may become outdated. This can be confusing for developers who are learning code. And since documentation, unlike tests, cannot say that it is outdated, such situations when documentation does not correspond to reality is not uncommon.

A test suite must have access to the code under test. On the other hand, the principles of encapsulation and data hiding should not be violated. Therefore, unit tests are usually written in the same module or project as the code under test.

From the test code, there may be no access to private fields and methods. Therefore, during unit testing, additional work may be required. In Java, a developer can use reflection (eng. Reflection) to refer to fields marked as private. [10] Unit tests can be implemented in inner classes so that they have access to members of the outer class. In the .NET Framework, partial classes can be used to access private fields and methods from the test.

It is important that code fragments intended solely for testing do not remain in the released code. In C, conditional compilation directives can be used for this. However, this will mean that the released code does not fully coincide with the tested one. The systematic launch of integration tests on the released assembly will help to ensure that there is no code left that is hidden in various aspects of the unit tests.

There is no consensus among programmers who apply development through testing about how sensible it is to test private, protected methods, as well as data. Some are convinced that it is enough to test any class only through its public interface, since private variables are just an implementation detail that can change, and its changes should not affect the test suite. Others argue that important aspects of functionality can be implemented in private methods and testing them implicitly through the public interface will only complicate the situation: unit testing involves testing the smallest possible modules of functionality. [11] [12]

Unit tests test each module separately.It doesn't matter if the module contains hundreds of tests or only five. Tests used in development through testing should not cross the process boundary, use network connections. Otherwise, passing the tests will take a lot of time, and developers will be less likely to run the entire test suite. Introducing dependencies on external modules or data also turns unit tests into integration tests. Moreover, if one module in the chain behaves incorrectly, it may not be immediately clear which one [ source not specified 1332 days ] .

When developing code uses databases, web services, or other external processes, it makes sense to select the part covered by testing. This is done in two steps:

Использование fake- и mock-объектов для представления внешнего мира приводит к тому, что настоящая база данных и другой внешний код не будут протестированы в результате процесса разработки через тестирование. Чтобы избежать ошибок, необходимы тесты реальных реализаций интерфейсов, описанных выше. Эти тесты могут быть отделены от остальных модульных тестов и реально являются интеграционными тестами. Их необходимо меньше, чем модульных, и они могут запускаться реже. Тем не менее, чаще всего они реализуются используя те же библиотеки для тестирования (англ. testing framework ), что и модульные тесты.

Интеграционные тесты, которые изменяют данные в базе данных, должны откатывать состоянии базы данных к тому, которое было до запуска теста, даже если тест не прошёл. Для этого часто применяются следующие техники:

TearDown method is present in most libraries for testing.try...catch...finally , exception handling structures where available.There are libraries Moq, jMock, NMock, EasyMock, Typemock, jMockit, Unitils, Mockito, Mockachino, PowerMock or Rhino Mocks, as well as sinon for JavaScript designed to simplify the process of creating mock objects.

Comments

To leave a comment

Web site or software design

Terms: Web site or software design