Lecture

There is no more perfect psychophysiological instrument than speech, which people use to exchange thoughts, messages, orders, experiences, etc.

By definition, speech is a historically established form of communication between people through language. For each participant in speech communication, the speech mechanism necessarily includes three main links: speech perception, its production, and the central link, called "internal speech". Thus, speech is a multi-element and multi-level psycho-physiological process. This process is based on the work of various analyzers (auditory, visual, tactile and motor), through which the recognition and generation of speech signals takes place.

A person’s ability to analyze and synthesize speech sounds is closely related to the development of phonemic hearing, i.e. hearing, providing perception and understanding of the phonemes of a given language. In turn, verbal communication relies on the laws of a particular language, which dictate a system of phonetic, lexical, grammatical and stylistic rules. It is important to emphasize that speech activity is not only the perception of speech signals and the pronunciation of words. A full-fledged verbal communication also implies an understanding of speech in order to establish the meaning of a message.

Among cognitive processes, speech occupies a special place, since, being included in various cognitive acts (thinking, perception, sensation), it contributes to the “condemnation” of information received by a person.

However, the mechanisms of how one person materializes his thought into a stream of sounds, while another, perceiving this sound stream, understands the thought addressed to him, are still not clear. Nevertheless, the natural science approach to the study of speech has its own history. A prominent place here belongs to the ideas that have developed in line with the physiology of the GNI.

It is considered that verbal communication is a unique feature of a person.

The possibilities of animals in imitation of speech. It is known that some animals can imitate the sounds of human speech with amazing accuracy. However, imitating speech, animals are not able to give words new meanings. Therefore, the words spoken by animals can not be called speech. However, only some animals have a speech apparatus necessary to simulate the sounds of human speech. In the experiments, however, it was shown that most animals lack the invariant perception of phonemes, peculiar to humans.

The experience of people interacting with animals shows that most mammals can learn to understand the meanings of many words and phrases. But this understanding does not constitute true verbal communication. The animal will never be able to learn the syntactic rules of the language, that is, after hearing a new sentence, it will not be able to parse it, identify the subject and predicate, use it in a different context.

This point of view would seem to contradict the experiments on teaching apes to the language of the deaf. So a monkey - a gorilla named Koko took possession of about 370 signs. However, it should be emphasized that the gestures, signs, used by great apes, perform only communicative function. Higher conceptual forms of speech for monkeys are not available. Moreover, animals are not able to add new sentences from signs, to change the order of gestures, signs to express the same thought, or rather, the same need.

It is also known that animals communicate with each other using sign language and sounds, each of which has its own rigidly fixed meaning. In most cases, such a sign is a congenital reaction to a specific situation, and the reaction of another animal to this sign is also genetically determined.  For example, dolphins have an unusually rich repertoire of vocalizations, but recent studies of their “speech” with the help of modern methods of analysis have not yielded positive results. Dolphin vocalization is chaotic and random, it is impossible to isolate invariants that could be considered messages with a certain meaning.

For example, dolphins have an unusually rich repertoire of vocalizations, but recent studies of their “speech” with the help of modern methods of analysis have not yielded positive results. Dolphin vocalization is chaotic and random, it is impossible to isolate invariants that could be considered messages with a certain meaning.

Non-verbal communication. On the other hand, communication through signs is inherent not only in animals, but also in humans. It is known that people intensively use facial expressions and gestures. According to some data, in the process of communication, the information conveyed by the word takes up only 7% of the total volume, 38% is accounted for by the intonation components and 55% is occupied by non-verbal communication signals. According to other calculations, the average person speaks only 10-11 minutes a day. The average sentence sounds about 2.5 seconds. At the same time, the verbal component of the conversation is about 35%, and the non-verbal component is 65%. It is believed that with the help of words information is mainly transmitted, and with the help of gestures - a different attitude towards this information, while sometimes gestures can replace words.

I.P. Pavlov proposed to allocate a set of verbal stimuli into a special system that distinguishes man from animals.

The second signal system. According to I.P. Pavlov, in humans there are two systems of signal stimuli: the first signal system is the direct effects of the internal and external environment on different receptors (this system also exists in animals) and the second signal system consisting only of words. Moreover, only a small part of these words denotes various sensory effects on a person.

Thus, using the concept of the second signal system I.P. Pavlov outlined the special features of man's GNI, which significantly distinguish him from animals. This concept covers a set of conditioned-reflex processes associated with the word. The word is understood as “signal signals” and is the same real conditioned stimulus as all the others. The work of the second signaling system consists primarily in the analysis and synthesis of generalized speech signals.

The development of these ideas is reflected in the works of MM. Koltsova, T.N. Ushakova, N.I. Chuprikova et al. For example, TN Ushakov, relying on these ideas and drawing on modern ideas about the structure of neural networks (see topic 1), suggested highlighting three hierarchically organized levels in the structure of internal speech, the separation of which is clearly visible in ontogenesis.

Three levels of inner speech. The first level is associated with the mechanisms of action and possession of individual words denoting events and phenomena of the external world. This level implements the so-called nominative function of language and speech and, in ontogenesis, serves as the basis for the further development of the mechanisms of internal speech. In the works of M.M. Koltsova, devoted to the ontogeny of speech, it was shown that traces of verbal signals in the cortex of the child’s brain, together with images of perceived objects, form specialized complexes of temporary connections that can be considered as basic elements of internal speech.

The second level is related to the formation of multiple links between the basic elements and the materialized language vocabulary, the so-called "verbal network". In numerous electroencephalographic experiments it was shown that objective and linguistic connectedness of words corresponds to the connectedness of their traces in the nervous system. These links are the "verbal networks", or "semantic fields". It is shown that when the node of the "verbal network" is activated, damped excitation extends to nearby nodes of this network. Such connections of the "verbal network" are stable and persist throughout life. T.N. Ushakov assumes that the language experience of mankind materializes in the structure of the “verbal network”, and the “verbal network” itself constitutes the static basis of people's speech communication, allowing them to transmit and perceive speech information.

Third level. Since human speech is always dynamic and individual, the “verbal network”, by virtue of its features, such as static and standard, can only be a prerequisite and possibility of the speech process. Therefore, according to TN Ushakova, in the mechanism of internal speech there is a third dynamic level, corresponding in its temporal and meaningful characteristics of the produced external speech. This level consists of rapidly alternating activations of individual nodes of the “verbal network”, so that each word uttered by a person is preceded by an activation of the corresponding structure of internal speech, which is transferred by recoding into commands to articulation bodies.

Verbal networks actually represent the morphofunctional substrate of the second signal system.

Speech is formed as a result of a change in the shape and volume of an extension tube consisting of the mouth, nose and throat. In the resonator system, responsible for the timbre of the voice, certain formants are formed that are specific to a given language. Resonance occurs as a result of a change in the shape and volume of the extension tube.

Articulation is a joint work of the organs of speech, necessary for the pronunciation of speech sounds. Articulation is regulated by the speech zones of the cortex and subcortical formations. For proper articulation, a certain system of speech movements is needed, which is formed under the influence of the auditory and kinesthetic analyzers .

The speech process can be viewed as the result of the work of peripheral organs, based on the generation of differentiated acoustic sequences (sounds) and being a highly coordinated voluntary motor activity of the phonation and articulation apparatus. All speech characteristics, such as speed, sound power, timbre, are finally formed by a man after the so-called “breaking voice”, and by a woman after reaching adolescence, they represent a stable functional system that remains almost unchanged until extreme old age. That is why we can easily recognize a friend by voice and speech features.

Clinical data obtained in the study of local brain lesions, as well as the results of electrical stimulation of brain structures, made it possible to clearly identify those specialized structures of the cortex and subcortical structures that are responsible for the ability to make and understand speech. So it is established that local lesions of the left hemisphere of different nature in right-handers lead, as a rule, to impaired speech function as a whole, and not to the loss of any one speech function.

Clinical data obtained in the study of local brain lesions, as well as the results of electrical stimulation of brain structures, made it possible to clearly identify those specialized structures of the cortex and subcortical structures that are responsible for the ability to make and understand speech. So it is established that local lesions of the left hemisphere of different nature in right-handers lead, as a rule, to impaired speech function as a whole, and not to the loss of any one speech function.

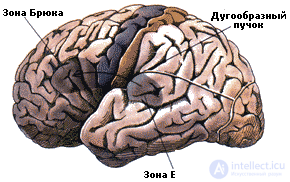

A person's ability to analyze and synthesize speech sounds is closely related to the development of phonemic hearing, i.e. hearing, providing perception and understanding of the phonemes of a given language. The main role in the adequate functioning of the phonemic hearing belongs to such a central organ of speech as the hearing-speech zone of the cerebral cortex - the posterior third of the upper temporal gyrus of the left hemisphere, the so-called. center Wernicke . To another central organ of speech belongs to the so-called. Broca's zone, which in individuals with dominance of speech in the left hemisphere, is located in the lower sections of the third frontal gyrus of the left hemisphere. Broca's area provides motorized speech organization.

A person's ability to analyze and synthesize speech sounds is closely related to the development of phonemic hearing, i.e. hearing, providing perception and understanding of the phonemes of a given language. The main role in the adequate functioning of the phonemic hearing belongs to such a central organ of speech as the hearing-speech zone of the cerebral cortex - the posterior third of the upper temporal gyrus of the left hemisphere, the so-called. center Wernicke . To another central organ of speech belongs to the so-called. Broca's zone, which in individuals with dominance of speech in the left hemisphere, is located in the lower sections of the third frontal gyrus of the left hemisphere. Broca's area provides motorized speech organization.

In the 60s. The studies of V. Penfield, who during stimulations on the open brain with the help of weak currents, irritated the speech zones of the cortex (Broca and Wernicke) and received changes in the patients' speech activity, became widely known. (Operations of this kind are sometimes performed with local anesthesia, therefore, voice contact can be maintained with the patient). These facts were confirmed in later works. It was found that with the help of electrostimulation it is possible to isolate all the zones and areas of the cortex that are included in the performance of a particular speech task, and these areas are very specialized in relation to the peculiarities of speech activity.

Syntagmatic and paradigmatic aspects of speech. In the study of local lesions of the brain, neuropsychology has established the existence of disturbances in speech functions (aphasias) of two categories: syntagmatic and paradigmatic. The first are associated with the difficulties of dynamic organization of speech utterances and are observed with the defeat of the anterior parts of the left hemisphere. The latter occur in the defeat of the posterior parts of the left hemisphere and are associated with a violation of speech codes (phonemic, articulatory , semantic, etc.).

Syntagmatic aphasia occurs when there are disturbances in the work of the anterior regions of the brain, in particular the Broca center. His defeat causes efferent motor aphasia, in which his own speech is disturbed, and the understanding of someone else's speech is preserved almost completely. In efferent motor aphasia, the kinetic melody of words is disrupted due to the impossibility of smoothly switching from one element of the utterance to another. Patients with Broca's aphasia are aware of most of their speech errors, but can communicate with great difficulty and only a small amount of time. The defeat of another department of the front speech zones (in the lower parts of the premotor zone of the cortex) is accompanied by so-called dynamic aphasia, when the patient loses the ability to formulate a statement, to translate his thoughts into expanded speech (violation of the programming function of speech).

With the defeat of the center of Wernicke, there are violations of phonemic hearing, difficulties arise in the understanding of oral speech, in a letter dictation ( sensory aphasia ). The speech of such a patient is rather fluent, but usually meaningless, because the patient does not notice their defects. Acoustic-mnetic, optical-mnestic aphasia, which are based on memory impairment, and semantic aphasia, a violation of the understanding of logical-grammatical structures reflecting spatial relations of objects, are also associated with damage to the posterior regions of the speech cortex.

Mechanisms of speech perception. One of the fundamental provisions of the science of speech is that the transition to understanding the message is possible only after the speech signal is converted into a sequence of discrete elements. Further, it is assumed that this sequence can be represented by a string of phoneme symbols, and the number of phonemes in each language is very small (for example, there are 39 in Russian). Thus, the speech perception mechanism necessarily includes a phonetic interpretation unit, which provides a transition from a speech signal to a sequence of elements. The specific psychophysiological mechanisms that ensure this process are far from clear. Nevertheless, it seems that the principle of detector coding is also valid here (see topic 5, paragraph 5.1). At all levels of the auditory system, a rather strict tonotopic organization was found, i.e. neurons that are sensitive to different sound frequencies are located in a certain order in the subcortical auditory centers and the primary auditory cortex. This means that neurons have a well-defined frequency selectivity and respond to a certain frequency band, which is significantly narrower than the full auditory range. It is also assumed that in the auditory system there are more complex types of detectors, in particular, for example, selectively responding to the signs of consonants. At the same time, it remains unclear what mechanisms are responsible for the formation of the phonetic image of the word and its identification.

In this regard, of particular interest is the model of identification of letters and words when reading, developed by D. Meyer and R. Schwaneweldt. In their opinion, the identification process begins at the moment when a series of letters enters the "detail analyzer ". The resulting codes containing information about the shape of the letters (straight lines, curves, corners) are transmitted to the word detectors. If sufficient detectors are detected by these detectors, a signal is generated confirming that a word has been detected. Detecting a specific word also activates nearby words. For example, when the word "computer" is detected, the words located in the memory network of a person close to it, such as "software", "hard drive", "Internet", etc., are also activated. The excitation of semantically related words facilitates their subsequent detection. Эта модель подтверждается тем, что испытуемые опознают связанные слова быстрее, чем несвязанные. Эта модель привлекательна также и тем, что открывает путь к пониманию структуры семантической памяти.

Организация речевого ответа. Предполагается, что у взрослого человека, владеющего языком, восприятие и произношение слов опосредуются внутренними кодами, обеспечивающими фонологический, артикуляторный, зрительный и семантический анализ слова. Причем все перечисленные коды и операции, осуществляемые на их основе, имеют свою мозговую локализацию.

Клинические данные позволяют выстроить следующую последовательность событий. Заключенная в слове акустическая информация обрабатывается в "классической" слуховой системе и в других "неслуховых" образованиях мозга (подкорковых областях). Поступая в первичную слуховую кору (зону Вернике), обеспечивающую понимание смысла слова, информация преобразовывается там для формирования программы речевого ответа. Для произношения слова необходимо, чтобы "образ", или семантический код, этого слова поступил в зону Брока. Обе зоны — Брока и Вернике связаны между собой дугообразным пучком нервных волокон. В зоне Брока возникает детальная программа артикуляции, которая реализуется благодаря активации лицевой зоны области моторной коры, управляющей лицевой мускулатурой.

Однако, если слово поступает через зрительную систему, то вначале включается первичная зрительная кора. После этого информация о прочитанном слове направляется в угловую извилину, которая связывает зрительную форму данного слова с его акустическим сигналом в зоне Вернике. Дальнейший путь, приводящий к возникновению речевой реакции, такой же, как и при чисто акустическом восприятии.

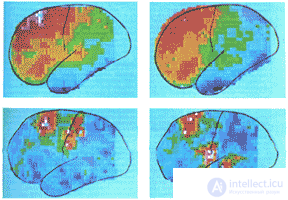

Данные, полученные с помощью позитронной эмиссионной томографии (ПЭТ) показывают, что у праворуких здоровых грамотных взрослых отдельные операции при восприятии слов действительно обеспечиваются за счет включения разных зон, главным образом, левого полушария. При звуковом восприятии слов активируются две зоны: первичная слуховая зона и височно-теменная. По-видимому, левая височно-теменная зона непосредственно связана с операцией фонологического кодирования, т.е. воссоздания звукового образа слова. При чтении (восприятии письменных знаков) эта зона, как правило, не активируется. Однако усложнение словесных заданий, предъявляемых в письменном виде, может повлечь за собой и фонологические операции, которые связаны с возбуждением височно-теменной зоны.

Основные очаги возбуждения при восприятии написанных слов находятся в затылке: первичной проекционной и вторичных ассоциативных зонах , при этом охватывая как левое, так и правое полушарие. Судя по этим данным, зрительный "образ" слова формируется в затылочных областях. Семантический анализ слова и принятие решения в случае смысловой неоднозначности осуществляется главным образом при активном включении передних отделов левого полушария, в первую очередь, фронтальной зоны. Предполагается, что именно эта зона связана с нервными сетями, обеспечивающими словесные ассоциации, на основе которых программируется ответное поведение.

Из всего вышесказанного можно сделать следующий вывод: даже относительно простая лексическая задача, связанная с восприятием и анализом слов, требует участия целого ряда зон левого и частично правого полушария (см. Хрестомат. 8.1).

В настоящее время представляется очевидным, что между двумя полушариями мозга существуют четкие различия в обеспечении речевой деятельности. Немало данных свидетельствует о морфологических различиях в строении симметричных зон коры, имеющих отношение к обеспечению речи. Так, установлено, что длина и ориентация сильвиевой борозды в правом и левом полушариях разная, а ее задняя часть, образующая зону Вернике , у взрослого праворукого человека в левом полушарии в семь раз больше, чем в правом.

В настоящее время представляется очевидным, что между двумя полушариями мозга существуют четкие различия в обеспечении речевой деятельности. Немало данных свидетельствует о морфологических различиях в строении симметричных зон коры, имеющих отношение к обеспечению речи. Так, установлено, что длина и ориентация сильвиевой борозды в правом и левом полушариях разная, а ее задняя часть, образующая зону Вернике , у взрослого праворукого человека в левом полушарии в семь раз больше, чем в правом.

Речевые функции левого полушария. Речевые функции у правшей локализованы преимущественно в левом полушарии и лишь у 5% правшей речевые центры находятся в правом. Большая часть леворуких — около 70% также имеют речевые зоны в левом полушарии. Примерно у 15% речь контролируется правым полушарием, а у оставшихся (около 15%) — полушария не имеют четкой функциональной специализации по речи.

Установлено, что левое полушарие обладает способностью к речевому общению и оперированию другими формализованными символами (знаками), хорошо "понимает" обращенную к нему речь, как устную, так и письменную и обеспечивает грамматически правильные ответы. Оно доминирует в формальных лингвистических операциях, свободно оперирует символами и грамматическими конструкциями в пределах формальной логики и ранее усвоенных правил, осуществляет синтаксический анализ и фонетическое представление. Оно способно к регуляции сложных двигательных речевых функций, и обрабатывает входные сигналы, по-видимому, последовательным образом. К уникальным особенностям левого полушария относится управление тонким артикуляционным аппаратом, а также высокочувствительными программами различения временных последовательностей фонетических элементов. При этом предполагается существование генетически запрограммированных морфофункциональных комплексов, локализованных в левом полушарии и обеспечивающих переработку быстрой последовательности дискретных единиц информации, из которых складывается речь.

Установлено, что левое полушарие обладает способностью к речевому общению и оперированию другими формализованными символами (знаками), хорошо "понимает" обращенную к нему речь, как устную, так и письменную и обеспечивает грамматически правильные ответы. Оно доминирует в формальных лингвистических операциях, свободно оперирует символами и грамматическими конструкциями в пределах формальной логики и ранее усвоенных правил, осуществляет синтаксический анализ и фонетическое представление. Оно способно к регуляции сложных двигательных речевых функций, и обрабатывает входные сигналы, по-видимому, последовательным образом. К уникальным особенностям левого полушария относится управление тонким артикуляционным аппаратом, а также высокочувствительными программами различения временных последовательностей фонетических элементов. При этом предполагается существование генетически запрограммированных морфофункциональных комплексов, локализованных в левом полушарии и обеспечивающих переработку быстрой последовательности дискретных единиц информации, из которых складывается речь.

Однако, в отличие от правого полушария, левое не различает интонации речи и модуляции голоса, нечувствительно к музыке как к источнику эстетических переживаний (хотя и способно выделить в звуках определенный устойчивых ритм) и плохо справляется с распознаванием сложных образов, не поддающихся разложению на составные элементы. Так, оно не способно к идентификации изображений обычных человеческих лиц и неформальному, эстетическому восприятию произведений искусства. Со всеми этими видами деятельности успешно справляется правое полушарие.

Метод Вада. Для точного установления специализации полушарий по отношению к речи используют особый прием, так называемый метод Вада — избирательный "наркоз полушарий". При этом в одну из сонных артерий на шее (слева или справа) вводят раствор снотворного (амитал-натрий). Каждая сонная артерия снабжает кровью лишь одно полушарие, поэтому с током крови снотворное попадает в соответствующее полушарие и оказывает на него свое действие. Во время теста испытуемый лежит на спине и считает вслух. При попадании препарата в речевое полушарие наступает пауза, которая в зависимости от введенной дозы может длиться 3-5 минут. В противоположном случае задержка речи длится всего несколько секунд. Таким образом, этот метод позволяет на время "выключать" любое полушарие и исследовать изолированную работу оставшегося.

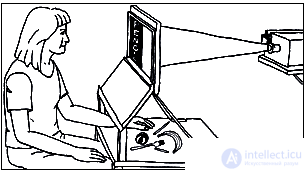

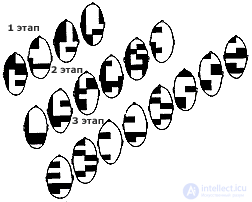

Дихотическое прослушивание. При предъявлении двух разных по содержанию или звучанию стимулов, один из которых поступает через наушник в левое ухо, а другой в правое, эффект восприятия информации, поступающей в каждое ухо, оказывается разным. Метод, с помощью которого удалось установить, что симметричные слуховые каналы функционально изолированы и работают с разной успешностью, получил название "дихотическое прослушивание".

|

Модель слуховой асимметрии у нормальных людей, предложенная Д.Кимура (цит. по С.Спрингер и Г.Дейч, 1983). A. In case of a monaural presentation of a stimulus to the left ear, information is transmitted to the right hemisphere via the contralateral routes and to the left hemisphere via the ipsilateral routes. The subject correctly names the syllable ('ba'). B. In case of a monaural presentation of the stimulus in the right ear, information is sent to the left hemisphere by the contralateral routes and to the right hemisphere by the ipsilateral routes. The subject correctly names the syllable ('ha'). B. When the dichotomous presentation is transmitted, the transmission in the ipsilateral paths is suppressed, therefore the “ha” goes only to the left (speech) hemisphere. The syllable “ba” reaches the left (speech) hemisphere only through the commissures. As a consequence, the syllable 'ha' is usually identified more accurately than the 'ba' (advantage of the right ear). |

The essence of this method is the simultaneous presentation of various acoustic signals in the right and left ear and the subsequent comparison of the effects of perception. For example, pairs of numbers are presented simultaneously to the subject: one digit in one ear, the second to the other, at a speed of two pairs per second. After listening to the three pairs of numbers, the subjects are asked to name them. It turned out that the subjects prefer to first call the numbers presented in one ear, and then to the other. If they were asked to give the numbers in the order of presentation, the number of correct answers decreased significantly. Based on this, an assumption was made about the separate functioning of the auditory canals, firstly, and about the greater power of the contralateral (opposite) auditory pathway compared to the ipsilateral (belonging to the same side), secondly.

As a result of numerous experiments, it was found that in the conditions of competition between the right and left auditory canals, there is an advantage of the ear, the contralateral hemisphere, which dominates in the processing of the presented signals. So, if you simultaneously give auditory signals to the left and right ear, then people with the right hemisphere dominating in speech will better perceive the signals given in the left ear, and people with the left hemisphere dominating in speech - in the right. Since the overwhelming majority of people are right-handed, their speech center is usually concentrated in the left hemisphere, they tend to be dominated by the right auditory canal. This phenomenon has a special name - the effect of the right ear. The magnitude of the effect in different people may vary. The degree of individual severity of the effect can be assessed using a special coefficient, which is calculated on the basis of differences in the accuracy of reproduction of signals supplied to the left and right ear.

So, this effect is based on the separate functioning of the auditory canals. In this case, it is assumed that during dichotomous listening the transmission along the direct path is inhibited. This means that, for right-handed people, information from the left ear first comes through a cross path to the right hemisphere, and then through special connecting paths (commissures) to the left, and part of it is lost.

However, the advantage of the right ear is found only in 80% of right-handers, and the center of speech (according to Vada's test) is in the left hemisphere in 95% of right-handed people. The reason for this is that in a number of people, direct auditory pathways prevail morphologically.

The dichotomy method is currently one of the most common methods for the study of interhemispheric asymmetry of speech in healthy people of different ages and people with CNS pathology.

Model of speech signal processing in the human auditory system. A generalized model of the interaction of the cerebral hemispheres in speech perception, developed on the basis of the dichotic testing method, is proposed by V.P. Morozov et al. (1988). Presumably in each hemisphere of the brain there are two consecutive blocks: signal processing and decision making. The left-hemispheric processing unit selects the signal segments associated with linguistic units (phonemes, syllables), determines their characteristics (spectral maxima, noise patches, pauses), and identifies the segments. The right-hemisphere processing unit compares the pattern of the presented signal with the integral standards stored in memory, using information about the signal envelope, the ratio between the segments in terms of duration and intensity, average spectrum, etc. The standards are stored in a dictionary in a compressed form. The dictionary of integral standards is organized by associative type, and search in it is carried out on the basis of probabilistic forecasting. On the basis of the obtained results, the decision block of the corresponding hemisphere forms a linguistic solution.

The principal fact is that during the processing of speech stimuli the exchange of information is possible: 1) between similar blocks of both hemispheres; 2) between the processing and decision blocks in each of the hemispheres. This type of interaction provides an intermediate assessment and opens up the possibility of correction. In addition, according to this model, each hemisphere is able to independently recognize the signal, but for the right hemisphere there are limitations related to the size of the volume of the dictionary of complete standards.

This model of interaction of the cerebral hemispheres in the process of speech perception involves parallel processing of speech information based on different principles: the left hemisphere performs a segment-by-speech analysis of the speech signal, the right uses a holistic analysis principle based on comparing the acoustic image of the signal with the stored standards.

There are two known concepts relating to the problem of functional specialization of the hemispheres in ontogenesis: equipotential hemispheres and progressive lateralization . The first involves the initial equality, or equipotentiality, of the hemispheres with respect to all functions, including speech. This concept is supported by numerous data on the high plasticity of the child’s brain and the interchangeability of symmetrical parts of the brain in the early stages of development.

Interhemispheric differences. In accordance with the second concept, hemispheric specialization exists already from the moment of birth. In right-handed people, it manifests itself in the form of a pre-programmed ability of the nerve substrate of the left hemisphere to detect the ability to develop speech function and determine the activity of the leading hand. Indeed, it is established that already in the fetus, i.e. Long before the actual development of the speech function, one can detect manifestations of hemispheric asymmetry in the morphological structure of future speech zones.

Newborns have anatomical differences between the left and right hemispheres - the sylvian groove on the left is significantly larger than the right. This fact suggests that structural hemispheric differences are to some extent congenital. In adults, structural differences are manifested mainly in the larger area of the Wernicke zone in the temporo-parietal region.

Evidence that the asymmetry of the brain structure in newborn children reflects functional differences were obtained when studying electroencephalographic reactions to the sounds of human speech. Registration of the electrical activity of the brain in babies at the sounds of human speech showed that in 9 out of 10 children the amplitude of the reaction in the left hemisphere is noticeably larger than in the right hemisphere. With non-verbal sounds - noise or chords of music - the amplitude of reactions in all children was higher in the right hemisphere.

Thus, studies conducted on children of the first year of life allowed to detect signs of functional inequalities of the hemispheres to the effects of speech stimuli and to confirm the concept of the original “speech” specialization of the left hemisphere in right-handed people.

Transfer of speech centers. However, clinical practice indicates a high plasticity of the cerebral hemispheres at early stages of development, which, first of all, manifests itself in the ability to restore speech functions in local lesions of the left hemisphere by transferring speech centers from the left to the right hemisphere. It has been established that if the speech areas of the left hemisphere are damaged in the early period of life, the symmetric parts of the right hemisphere can assume their functions. If, for medical reasons, babies remove the left hemisphere, the development of speech does not stop and, moreover, goes without visible violations.

The development of speech in infants with the left hemisphere removed is possible due to the transfer of speech centers to the right hemisphere. Subsequently, standard tests that assess the level of verbal intelligence do not reveal significant differences in the verbal abilities of the operated compared with all the others. Only extremely specialized tests can reveal the difference in the speech functions of children with the left hemisphere removed and healthy ones: children operated on in infancy find it difficult to use complex grammatical structures.

A relatively complete and effective replacement of speech functions is possible only if it began in the early stages of development, when the nervous system has a high plasticity. As ripening plasticity decreases and there comes a period when substitution becomes impossible.

Despite theoretical disagreements, all researchers agree on one thing: in children, especially in preschool years, the right hemisphere plays a much larger role in speech processes than in adults. However, progress in speech development is associated with the active inclusion of the left hemisphere. According to some ideas, language learning plays the role of a trigger mechanism for the normal specialization of the hemispheres. If, at the proper time, mastering of speech does not occur, areas of the cortex, normally intended for speech and related abilities, may undergo a functional rebirth. In connection with this, the idea of a sensitive period of speech development emerged, which covers a rather long period of ontogenesis - all pre-school childhood, while the plasticity of the nerve centers gradually decreases and is lost by the beginning of puberty. In addition, by the age of 7-8 years, the right hemisphere advantage is formed in the perception of emotions in singing and speaking.

It is generally accepted that the behavioral criterion for language acquisition is the child’s ability to consciously and arbitrarily regulate speech activity. According to clinical data, it is this conscious and arbitrary level of organization of speech activity, and not the fact of its implementation is provided by the structures of the left hemisphere of the brain that is dominant in speech (in right-handers).

A new stage in the study of the psychophysiology of speech processes is associated with the development of electrophysiological methods, primarily the registration of the activity of individual neurons, evoked potentials and total bioelectric activity.

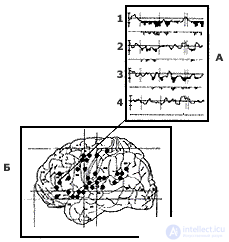

Neural correlates of word perception. Unique studies of the impulse activity of human neurons in the perception of various acoustic stimuli: speech and non-speech stimuli were conducted by N.P. Bekhtereva and her staff (1985). At the same time, some general principles of acoustic coding of the word in brain structures were revealed, it was shown that the impulse activity of neural populations, as well as neurodynamic rearrangements in various links of the perception system, are naturally associated with the acoustic characteristics of speech stimulus. In the impulse activity of various brain structures, the neurophysiological correlates of phonetic coding are distinguished: in the perception and reproduction of both vowel and consonant phonemes, the space-time organization of neural ensembles has a specific and stable character. Moreover, the stability in time is most pronounced in the coding of vowel phonemes and is characteristic for a period of approximately 200 ms.

It is also shown that the principle of phoneme coding prevails when learning and verbalizing the answer, along with this, variants of a more compact “block” coding of syllables and words are possible. This form, being more economical, represents another coding level and serves as a kind of bridge in the semantic combination of words that differ in their acoustic characteristics. In the tasks on semantic generalization and linguistic tests, containing both the words of the native language and the words of a foreign language unknown to the subject, facts have been revealed that make it possible to judge the neurophysiological features of semantic coding. Semantic features are reflected in the differences in the neurodynamics of impulse flows for different areas of the brain, which vary depending on the degree to which the word is known and its relation to the general semantic field. It turned out that imparting a semantic meaning to a previously unknown word changes neurophysiological indicators, and common neurophysiological signs can be distinguished for words of a general semantic field.

|

The study of cerebral organization of speech using the analysis of the pulse activity of neurons (A) and PET (B) (according to NP Bekhtereva). A. Peristimulus histograms of the impulse activity of the neural populations of the 46th field: 1 - grammatically correct phrase; 2 - grammatically incorrect phrase; 3 - grammatically correct quasi-phrase; 4 - grammatically incorrect word-like set of letters. B. Scheme of localization of significant cortical activations when comparing the perception of the text with the score of a certain letter in a grammatically incorrect word-like set of letters. |

With the help of various psychophysiological and neurophysiological methods, the search for the “standard” of the word was carried out, i.e. a certain pattern of interaction of impulse activity between different zones of the cerebral cortex, which characterizes the perception of a word. Such patterns (patterns) were found, but they are characterized by significant interindividual variability, which, possibly, is determined by individual peculiarities in the semantic coding of words. The use of computers made it possible to reveal the unfolded and compressed (minimized) forms of the analogs of the "standards" of words in the impulse activity of the neural populations. It was shown that when analyzing the acoustic, semantic and motor characteristics of perceived and reproducible words, there is a specialization of different brain zones for different speech operations ( Bekhtereva et al. 1985).

Spatial synchronization of biopotentials. Neurophysiological support of speech functions was also studied at the level of macro-potentials of the brain, in particular, using the method of spatial synchronization. Spatial synchronization of individual brain regions is considered as a neurophysiological basis of systemic interactions that ensure speech activity. This method allows to assess the dynamics of the involvement of various areas of the cortex in the speech process. For example, the earliest periods of perception and word recognition are associated with the movement of activation zones: first, the frontal, central and temporal zones of the left hemisphere are most activated, as well as the back and central areas of the right. Then the activation focus moves to the occipital regions, while remaining in the right latent and anterior temporal areas. The processing of the word is mainly associated with the activation of the left temporal and partially right temporal zones of the cortex. The preparation for articulation and the pronouncement of the word to oneself is accompanied by an increased activation of the front-center areas, which seem to be of decisive importance in ensuring the articulation process.

Spatial synchronization of biopotentials. Neurophysiological support of speech functions was also studied at the level of macro-potentials of the brain, in particular, using the method of spatial synchronization. Spatial synchronization of individual brain regions is considered as a neurophysiological basis of systemic interactions that ensure speech activity. This method allows to assess the dynamics of the involvement of various areas of the cortex in the speech process. For example, the earliest periods of perception and word recognition are associated with the movement of activation zones: first, the frontal, central and temporal zones of the left hemisphere are most activated, as well as the back and central areas of the right. Then the activation focus moves to the occipital regions, while remaining in the right latent and anterior temporal areas. The processing of the word is mainly associated with the activation of the left temporal and partially right temporal zones of the cortex. The preparation for articulation and the pronouncement of the word to oneself is accompanied by an increased activation of the front-center areas, which seem to be of decisive importance in ensuring the articulation process.

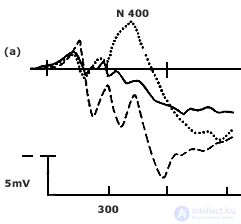

Evoked potentials. Additional opportunities for studying the brain mechanisms of speech opens the method of recording evoked or event-related potentials. For example, when using emotionally significant and neutral words as visual stimuli, some general patterns of analysis of verbal stimuli were revealed. So, when comparing the time parameters of the late component of the P300 to verbal stimuli, it was found that the speed of information processing in the right hemisphere is higher than in the left hemisphere. It is assumed that first in the right hemisphere a visual-spatial, pre-semantic analysis of verbal stimuli is carried out, i.e. figuratively speaking, letters are read without their understanding (see p. 8.4). The transfer of results to the left “speech” hemisphere represents the next stage in the process of perception of verbal stimuli - the comprehension of what is read. Thus, the mechanism of faster processing of information in the right hemisphere compared to the left provides consistency and consistency in time of the processing of verbal information that begins in the right hemisphere with an analysis of the physical signs of individual letters and then continues in the left where the semantic analysis of the word is carried out.

The wave form of the EP significantly changes depending on the semantic meaning of the word. So it was established that when one perceives the same words that receive different interpretations depending on the context (for example, when comparing the word "fire" in the expressions: "sit by the fire" or "get ready, fire"), the configuration of the EP turns out to be different, and in the left hemisphere these differences are expressed much more.

A special place in the series of information oscillations is occupied by the negative component N 400, or N 4, which, starting after 250 ms, reaches a maximum of 400 ms. Functionally, this component is considered as an indicator of a lexical decision. When used as incentives for proposals in which the last word gave rise to a semantic inconsistency or logical violation, this negative fluctuation was the greater, the greater was the degree of mismatch. Очевидно, волна N4 отражает прерывание обработки предложения в результате его неправильного завершения и попытку заново пересмотреть информацию.

|

Событийно-связанные потенциалы на слова, завершающие предложения (по M.Kutas & S.Hillyard, 1980). Представлены три варианта ответов на слово, завершающее предложение: 1) соответствующее cмыслу предложения (он намазал хлеб маслом) (______ - сплошная линия) 2) несоответствующее cмыслу предложения (он намазал хлеб носками) (...... - точечная линия) 3) имеющее тот же смысл, что и в первом случае, но иную форму написания (он намазал хлеб МАСЛОМ ) (- - - - - пунктирная линия) |

Это, однако, не единственная лингвистическая задача в электрофизиологических исследованиях, где был выявлен негативный информационный компонент N4. Подобный компонент был зафиксирован в задачах, когда надо было дифференцировать семантические классы, наборы слов или решать, относится ли данное слово к определенной семантической категории. Называние слов и картинок, принятие лексического решения, лексические суждения - все эти задачи сопровождаются появлением хорошо выраженного негативного колебания с латентным периодом приблизительно 400 мс. Есть также данные о том, что этот компонент регистрируется и в тех случаях, когда требуется оценить степень соответствия или рассогласования слов не только по семантическим, но и по физическим характеристикам. По-видимому, совокупность компонентов N4 отражает процессы анализа и оценки лингвистических стимулов в разных экспериментальных задачах.

Таким образом, с помощью электрофизиологических методов установлен ряд общих закономерностей пространственно-временной организации нейронных ансамблей и динамики биоэлектрической активности, сопровождающих восприятие, обработку и воспроизведение речевых сигналов у человека.

Comments

To leave a comment

Psychophysiology

Terms: Psychophysiology