Lecture

For distributed memory systems, MPI — the Message Passing Interface — is widely used.

Others are used: PMV (Parallel Virtual Machine) or SHMEM.

MPI is based on the message passing mechanism.

MPI is a common medium for creating and executing parallel programs.

Message passing

Method of transferring data from the memory of one processor to the memory of another

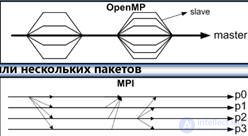

Data sent as packets

The message may consist of one or several packets.

Packages usually contain routing and management information

Process :

Set of executable commands (program)

The processor can run one or more processes.

Processes exchange information only via messages.

To improve performance, it is desirable to run each process on a separate processor.

On a multi-core PC, processes can be performed on separate cores.

Message PassingLibrary

Collection of functions used by the program

Designed to send, receive and process messages

Environment of parallel program execution

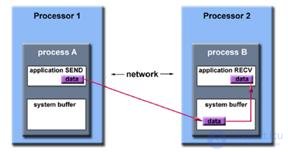

Send / receive

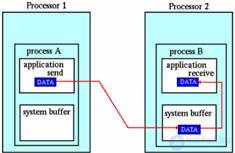

In the transmission of data, the interaction of two processors, the transmitter and receiver, is required.

Transmitter determines data position, size, type, receiver

Receiver must match the transmitted data.

Synchronous / Asynchronous

The synchronous transfer is completed only after the receiver confirms receipt of the message.

Asynchronous transfer is performed without quitt (less reliably)

Applicationbuffer

Address space containing data to be transmitted or received (for example, a data memory area storing some variable)

Systembuffer

System memory for storing messages

Depending on the type of message, the data from the ApplicationBuffer can be copied to the SystemBuffer and vice versa.

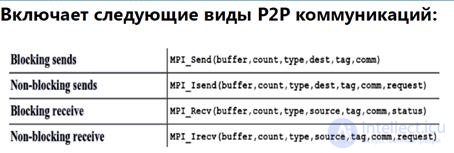

BlockingCommunication

The function is completed only after some event

Non-blocking Communication

The function is completed without waiting for any communication events.

Using the system buffer after performing a non-blocking transfer operation DANGER!

CommunicatorsandGroups

Special objects that determine which processes can exchange data.

Process may be part of a group.

The group communicates using the communicator

Within the group, the process gets a unique number (identifiers)

Process can belong to several groups (different identifiers!)

Messages sent in different communicators do not interfere with each other

Supports 2 programming styles:

MIMD -MultipleInstructionsMultipleData

• Processes execute different program code

• It is very difficult to write and debug.

• Difficult to synchronize and manage the interaction of processes

SPMD -SingleProgramMultipleData

• The most common option

• All processes run the same software code.

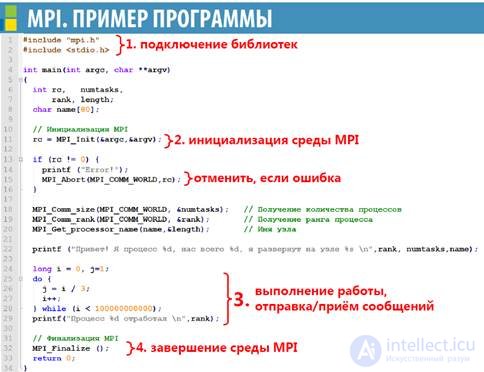

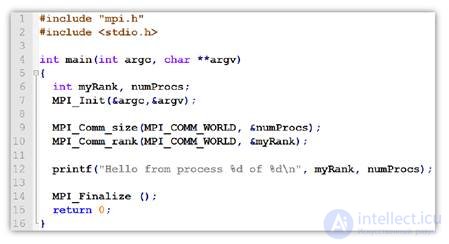

MPI is both a collection of libraries and a runtime environment.

Compiling and linking with mpicc script

mpiccMPI_Hello.co MPI_Hello

Run the program for execution using 5 processes

mpiexec-n 5 MPI_Helloс

Adding host addresses is done with the parameters “-hosts”, “-nodes”, “-hostfile”:

mpiexec – hosts 10.2.12.5 10.2.12.6

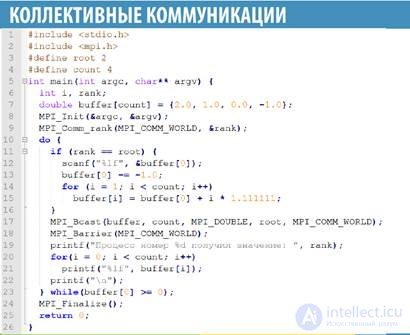

MPI feature set for data exchange between process groups

Features:

in the reception-transfer mode all processes work

The collective function works simultaneously on reception and transmission.

values of all parameters in all processes (except the buffer address) must match

Include:

MPI_Bcast () - message broadcast

MPI_SCATTER () - distribution of data to different processes

MPI_Gather () - collecting data from all processes into one process

MPI_Allgather () - collecting data from all processes in all processes

intMPI_Barrier () - point sync

MPI_Bcast (* buffer, count, datatype, root, comm)

buffer data buffer

count - data transfer counter

datatype data type

root - data source process

comm-communicator

MPI_Barrier ( comm )

Suspends the process until the moment when all group processes do not reach the barrier (synchronization point)

Processes are waiting for each other.

MPI_Scatter (* sendbuf, sendcnt, sendtype, * recvbuf, recvcnt, recvtype, root, comm)

Transfers data from the source process array to all process drives

sendbuf-buffer source with an array of broadcast data

sendcnt-data transfer counter

sendtype data type

recvbuf-receive buffer

recvcnt-received data counter

recvtype type of received data

root-number of the process sending the data

comm-communicator

MPI_Gather (* sendbuf, sendcnt, sendtype, * recvbuf, recvcnt, recvtype, root, comm)

Collects data from the buffers of all processes in the accumulator of the collector process.

sendbuf-buffer source of broadcast data

sendcnt-data transfer counter

sendtype data type

recvbuf-receive buffer for data collection

recvcnt-received data counter

recvtype type of received data

root-collecting process number

comm-communicator

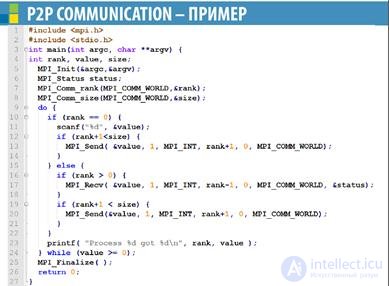

MPI function set for exchanging data between separate processes

One branch calls the transfer function and the other the receive function.

The bottom line:

Task 1 transmits:

intbuf [10];

MPI_Send (buf, 5, MPI_INT, 1, 0, MPI_COMM_WORLD);

Task 2 accepts:

intbuf [10];

MPI_Statusstatus;

MPI_Recv (buf, 10, MPI_INT, 0, 0,

MPI_COMM_WORLD, & status);

Create an application from N processes

Create and fill with a large array of data in the process 0

Transfer the part of the array to each process

In each process, we will sort the local array in ascending order.

In process 0, copy the local array to the output array

In process 0, in turn, we take local arrays from other processes and merge with the output array

Secondary functions:

• Buffer creation and filling with numbers

• Sort ascending specified buffer

• Print buffer items in the terminal window

• Merge two ordered arrays in ascending order

Функций Function Prototypes

double * generate1 (int);

double * processIt1 (double *, int);

voidshowIt1 (double *, int);

double * merge (double * arr1, double * arr2, intl1, intl2);

Comments

To leave a comment

Highly loaded projects. Theory of parallel computing. Supercomputers. Distributed systems

Terms: Highly loaded projects. Theory of parallel computing. Supercomputers. Distributed systems