Lecture

Prehistory

By the end of the 60s of the last century, integrated circuits were widely used in the development of electronic systems. At first, the processors of large computers consisted of several integrated circuits. However, the number of transistors on a single chip (the degree of integration of the circuit) has steadily increased, reaching several thousand elements. In accordance with the empirical rule formulated by Gordon Moore in 1965, the density of elements on a microcircuit doubled every one and a half year. Thus, it became obvious that the day was not far off when a whole processor could be placed on a single chip.

The first success smiled at a small American company Intel. Working on a microcalculator project, Intel engineers managed in 1971 to create a processor on a single chip (from the English chip - a chip, a microcircuit). The new 4-bit microprocessor, which received the 4004 index, had too modest capabilities and was not suited for the role of a mainframe mainframe computer, but Intel continued to develop and after a year released an 8-bit 8008 chip with fairly successful characteristics. A year later, the famous Intel-8080 processor appeared, which served as the basis for many microcomputers, including the first commercially successful microcomputer Altair-8800 .

Intel competitors were not asleep either - at about the same time Motorolla, National Semiconductor, MOS Technology firms launched their own single-chip microprocessors on the market. Thus began the era of microcomputers.

Apple I

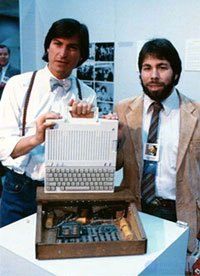

Steve Jobs and Steve Wozniak

The first microcomputers available to fans, such as the Altair-8800, were simply sets of parts, which they still had to be able to assemble. Therefore, in the mid-1970s, many computer clubs emerged in the USA in which enthusiasts could exchange information or get advice on building their own microcomputer. Some engineers sought not so much to assemble a microcomputer from ready-made parts according to the instructions, as to develop their own. The industry was in its infancy, no standards existed, and any idea or development had the right to life.

One such lover was Steve Wozniak , a 26-year-old engineer at Hewlett-Packard from Palo Alto, located in Silicon Valley, California. He developed more than one project of his own computer on paper and even wrote several compilers from FORTRAN and BASIC interpreters. However, the lack of funds hindered the implementation of the plan. Considered at that time, the best microprocessors Intel-8080 and Motorolla-6800 were not affordable for Wozniak. And yet he found a suitable processor at an affordable price. It turned out to be the 6502 chip from MOS Technology with a system of commands similar to that used in the Motorolla-6800.

One of the drawbacks of the first microcomputers was their very primitive user interface, which made it difficult for the operator to communicate with the machine. So, the Altair-8800 did not have a monitor, or a keyboard, much less a mouse. Information had to be entered using switches on the front panel, and the results were displayed on LED indicators. Later, on the model of large machines began to use the teletype - telegraph apparatus with a keyboard. It is clear that working at such a computer required a lot of preparation from the user and therefore remained the lot of a few lovers.

Wozniak made a revolution in the interface of microcomputers, for the first time using a keyboard like a typewriter keyboard for data entry, and an ordinary television for displaying information. The characters were displayed on the screen in 24 lines, 40 characters each, the graphics mode was absent. The computer had 8 kilobytes of memory - half of them were occupied by the built-in BASIC, and the user could use the remaining 4 kilobytes for his programs. Compared with the Altair-8800, which had only 256 bytes of memory, the progress was significant. In addition, Wozniak provided for his computer expansion slot (connector) for connecting additional devices.

By the beginning of 1976, the production of the microcomputer was completed, and Wozniak brought his creation to the computer club Homebrew, in order to demonstrate his like-minded people. However, most of the club members did not appreciate Wozniak's ideas and criticized his system for using a microprocessor not from Intel. But Wozniak's friend 21-year-old Steve Jobs immediately realized that this computer had a great future. Unlike Wozniak, an engineer to the bone, Jobs had a strong entrepreneurial spirit. Therefore, he proposed Wozniak to organize a company for the serial production of a computer in order to sell it to everyone by mail in the form of a set of parts. Wozniak agreed, and as a result, on April 1, 1976 (on the so-called April Fool's Day - an analogue of our "April First - I Don't Believe Anyone"), Apple Computer Company was founded; it was officially registered almost a year later, in January 1977. The new computer is called the Apple I.

Immediately found and the buyer. The owner of the computer store "The Byte Shop" Paul Terrell agreed to purchase from the new company a lot of 50 computers for $ 500 for each, but with the condition that all of them will be assembled and ready for use. The young company simply did not have the money to purchase components for the entire party, but Jobs managed to get a loan of 15 thousand dollars for one month. It was a big risk, but he was justified - Wozniak and Jobs managed to assemble and test all computers in the garage of Jobs' parents, but Terrell didn’t really deceive him by purchasing the whole lot for his shop. As a result, Paul Terrell sold nearly two hundred copies of the Apple I for ten months at a price of $ 666. Now every surviving copy of these two hundred has become a collectible rarity and costs much more than the 666 dollars that were paid for it in 1976.

Apple II

While the Apple I was slowly selling in Terrell’s shop, Wozniak was already working on the next version of the computer, called the Apple II. In it, he tried to correct many of the flaws of the first version: the computer received color and graphics mode, sound and extended memory, eight expansion slots instead of one, and a tape recorder as a means of saving programs. Finally, the Apple II was dressed in an elegant plastic case.

To expand the mass production of Apple II, required funds, and considerable. Initially, Jobs and Wozniak tried to interest the idea of mass production of their computer company, in which they previously worked - Atari and Hewlett-Packard, but to no avail. Then most people personal computer seemed just a fun toy, very doubtful from a commercial point of view. However, Jobs managed to entice entrepreneur Mike Markulla with his idea, who invested 90 thousand dollars from his own savings into a young company, and also introduced Jobs to more serious investors.

The basis of the first model of Apple II was, as in the Apple I, the microprocessor 6502 of MOS Technology with a clock frequency of 1 megahertz. In permanent memory was recorded BASIC, working only with integer data. The amount of RAM in 4 KB is easily expanded to 48 KB. The information was output on a color or black and white TV that runs on NTSC, standard for the United States. In the text mode, 24 lines were displayed, 40 characters each, and in the graphic resolution it was 280 by 192 points (six colors).

The first Apple II was shown at the First West Coast Computer Fair in April 1977. The famous Apple emblem - a bitten, multicolored apple - was developed by the same time by the young artist Rob Janov by order of Jobs. Apple's exposition at the fair attracted attention and impressed the specialists - firstly, because it was located at the entrance and was visible to everyone who entered, and secondly, thanks to the kaleidoscopic video show, which demonstrated on the big screen the excellent graphic capabilities of the new computer.

Apple has competed with new models of PET computers from Commodore (based on the 6502 processor) and TRS-80 from Radio Shack (based on the Z-80 processor). Just like the Apple II, these models could be attributed to the second generation of microcomputers: they no longer bore the imprint of amateur performance, were fully assembled and ready to go. The competitors were distinguished by a low price (about $ 600) and functional completeness - a monitor and a cassette drive were included in the package. The Apple II without a monitor and a cassette drive and with a minimum memory of 4 KB was worth twice as much - 1298 dollars. But the power of Apple II was in its extensibility - users could independently increase the amount of RAM to 48 KB and use eight connectors to connect additional devices. In addition, thanks to the color graphics Apple II could serve as an excellent gaming platform. Indeed, shortly after the appearance of Apple II, the creation of games for him turned into a serious business for both individual programmers and small start-up firms.

Apple II became the first computer, popular among people of various professions. Its owners did not need serious knowledge of microelectronics and the ability to hold a soldering iron in their hands. Knowledge of programming languages was also not necessary - a small number of simple commands were used to communicate with the Apple II. As a result, scientists and businessmen, doctors and lawyers, schoolchildren and housewives could use the computer. Subsequently, many models of so-called home 8-bit computers from different manufacturers, in fact, repeated the concept, first introduced by Steve Wozniak in the design of the Apple II: built-in keyboard, BASIC in ROM, tape drive, output to the TV.

However, there was one difficulty that prevented the use of Apple II in business, an imperfect way of storing information. Cassettes and household tape recorders are a constant headache for many first microcomputer users. No businessman would put up with numerous failures and repeated lengthy downloads of programs from the tape. It was required to find a more elegant solution. And it existed - floppy drives have long been used in large and mini-computers, it remains only to find a way to use the drive in a microcomputer. In December 1977, when Apple CEO Markulla was making a list of improvements to be introduced next year, the word “diskette” came first. And in July 1978, a Disk II drive with a controller was available for sale at a price of $ 495. Many experts later acknowledged that the development of a floppy disk drive for Apple in strategic terms was no less important than the creation of the computer itself. The drive has expanded the capabilities of Apple II so much that in this form it can already be completely attributed to the third generation of microcomputers.

The built-in ROM BASIC could not manage a complex file system on floppy disks, so the Apple-DOS disk operating system was developed for the Apple II version with the disk drive, and version 3.1 was immediately. At the end of 1978, the computer was improved by giving it the name Apple II Plus. Now it has become suitable for use in the business field. A businessman has at his disposal a powerful tool for doing business, storing information, helping in decision-making. It was at this time that the software classes that are so familiar to us now began to take shape - text editors, database management systems, personal information systems (organizers).

In 1979, MIT graduates Dan Bricklin and Bob Frankston created the VisiCalc program, the world's first spreadsheet. This tool is best suited for accounting calculations. Its first version was written for Apple II and caused an explosive growth in sales of these computers. People acquired Apple only to work with VisiCalc. In fact, it was the first time that a program was selling a computer.

Thus, in just a few years, the microcomputer has evolved from a toy of an electronics engineer and programmer into a business tool for people of many professions. Computers spread around the world in millions of copies and took their place on the tables of engineers, doctors, businessmen, scientists, teachers and schoolchildren. Apple, one of the many pioneers of the microcomputer industry, who, thanks to the genius of its founders Stephen Jobs and Stephen Wozniak, has come to rise above the general amateur level of development in this area, guess the development directions of microcomputers and offer the right solutions in time.

IBM PC vs Apple

The explosive growth of the microcomputer industry has finally attracted the attention of America's largest computer corporations - IBM, DEC, Hewlett-Packard, and others. The giants also decided to participate in the section of the new pie. But if the first DEC and Hewlett-Packard microcomputers were not lucky - the market did not accept them, then the share of the IBM PC, which appeared in 1981, was an unprecedented success. The IBM personal computer became the de facto industry standard and, over the course of several years, ousted almost all competing models from the market. It cannot be said that some outstanding abilities of the IBM PC contributed to this, it did not stand out against the background of its competitors. Rather, the indisputable authority of the "Blue Giant" played its role.

It seemed the days of Apple are numbered, especially since the new model — the Apple III, which could compete with the IBM PC — had failed — there were a number of flaws in the computer. In those years, many reputable companies were forced to abandon the development of their own original computers in favor of producing IBM-compatible models that were in demand on the market, and simply copies of someone else's computer. But not Apple. In order not to disappear, an outstanding non-standard solution was required. And it was found - a graphical interface. In 1984, the Apple Macintosh was born - the first computer controlled by the mouse.

In fairness, it should be recognized that the graphical interface was not invented by Apple, but by engineers at the Xerox research center in Palo Alto. In the early 80s, both Steve Jobs and Bill Gates visited this center and got acquainted with its developments. But the result of the visits was different. Jobs created the Macintosh, and Gates began developing a graphical Windows operating system, the first version of which was born in 1985. Only ten years later, with the release of Windows'95, this operating system was equal in its capabilities with MacOS, used in the Apple Macintosh in 1984.

Thanks to the advantages of Macintosh computers, Apple was able to stay on the market for personal computers, although not in leading positions. For some time, it continued to support both its line of computers - 8-bit Apple II based on the MOS Technology 6502 processor and its modifications and 16-bit (and later 32-bit) Apple Macintosh based on the Motorolla 68000 processor and its variants. After the failure of the Apple III, the company took up the improvement of its predecessor. In the 80s, modifications of Apple IIe, Apple IIc, Apple IIGS consistently appear. Demand for 8-bit models is still high. First, this is a great gaming platform, and the Apple II set is purchased as a home computer. Secondly, thanks to a reasonable marketing policy, the company managed to win a strong position in the field of education. In the mid-80s, more than half of American schools were equipped with Apple II computers. The release of Apple II and its variants was completed only in 1993, but many copies are still still kept with their owners and even continue to work. Apple II marked an entire era in the development of computerization and has long been turned into a kind of "cult computer."

And Macintosh computers were able to win a place under the sun in areas where their outstanding graphics capabilities are in demand. The vast majority of publishers in the world still uses Apple equipment. No one better than Macintosh can cope with the layout, image processing and other printing works. It's no secret that almost all serious graphics programs and layout programs were originally created for the Macintosh and only later were transferred to the Windows platform. Macintosh is still strong in education.

According to various sources, today Apple controls 8-10% of the global personal computer market. Most of the Macintosh computers are from US users. In Russia, the share of Apple is much lower and does not exceed, as it is considered, a few percent. Apple has always been largely focused on the domestic market, paying much less attention to foreign markets.

Of all the many firms that started the microcomputer revolution a quarter of a century ago, only Apple survived, the rest either went bankrupt or were absorbed by their more successful competitors. And all this time, Apple kept on the crest of technical progress.Will the word "Apple" continue to be synonymous with technical excellence? As they say, wait and see.

Comments

To leave a comment

History of computer technology and IT technology

Terms: History of computer technology and IT technology