Lecture

Web parsing (or crawling) is the extraction of data from a third-party website by downloading the HTML code of the site and its analysis to obtain the necessary data.

But you should use the API for this!

Not every website offers an API, and the API does not always provide all the necessary information. So often parsing is the only way to get data from the site.

There are many reasons for parsing.

But there are also many people and researchers who need to create a data collection, and parsing for them is the only solution available.

So what's the problem?

The main problem is that most websites do not want to be parsed. They want to provide content only to real users using a real web browser (except Google, because all sites want Google to crawl them, well, of course, Yandex too).

Therefore, when parsing, you should be careful not to recognize the bot in you. The main thing to remember about two things: you should use custom tools and imitate human behavior. In this post, we will tell you about all the tools that hide the fact of parsing and tell you what tools the sites use to block parsers.

When you launch the browser and go to the web page, it almost always means asking the HTTP server for some data. And one of the easiest ways to get content from an HTTP server is to use a classic command line tool like cURL .

But it’s worth remembering that even if you just do: curl www.google.com, Google has a ton of ways to determine that you are a bot, for example, just by looking at the HTTP headers. Headers are small pieces of information that come with every HTTP request that hits the servers, and one of these fragments accurately describes the client making the request. I am talking about the "User-Agent" header. And, just by looking at the “User-Agent” heading, Google now knows that you are using cURL. If you want to know more about headlines, then Wikipedia has a great article on them.

The headers are really easy to change with cURL, and copying the User-Agent header of a legitimate browser can hide you from seeing the site. In the real world, you need to set more than one header, but in general it is not very difficult to artificially create an HTTP request using cURL or any other library that will make this request look like a browser one. Everyone knows this method, and therefore, to determine the “authenticity” of the request, the website checks one thing that cURL and libraries cannot fake - the execution of JS (JavaScript).

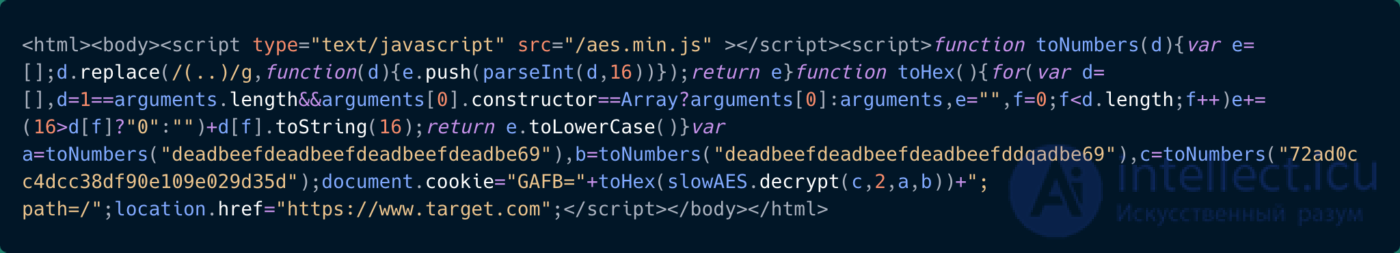

The concept is very simple: the website embeds a small fragment of JS in its web page, which, when launched, “unlocks” the site. If you use a real browser, you will not notice the difference, but if not, all you get is an HTML page with incomprehensible JS code.

something strange

But we repeat again: this method of protection against parsing is not completely reliable, mainly thanks to nodeJS, using which it is now easy to run JS outside the browser. However, do not forget that the Internet has evolved, and there are other tricks to determine if you are using a real browser or its imitation.

Trying to execute a JS fragment on the host side is really difficult and not at all reliable. And more importantly, as soon as you come across a site that has a more complex verification system or is a large one-page application, running cURL and pseudo-JS with nodeJS becomes useless. So the best way to look like a real browser is to use it.

Headless browsers will behave similar to a real browser, except that they can be easily used programmatically. Most commonly used is Chrome-Headless, an option in the Chrome browser that works in Chrome without using a user interface.

The easiest way to use Headless Chrome is to call a driver that combines all its functions into a simple API, Selenium and Puppeteer are the two most famous solutions.

However, this will not be enough, because websites now have tools to detect a headless browser. This arms race has been going on for a long time.

Everyone, and especially the frontend developers, knows that every browser behaves differently. Sometimes it can be about rendering CSS, sometimes JS, or just about internal properties. Most of these features are well known, and you can easily determine if a site is actually visited through a browser. This means that the website asks itself: “Do all the properties and behavior of the browser correspond to what I know about the User-Agent that was sent to me?”

That's why there is a constant arms race between parsers who want to impersonate a real browser and websites that want to distinguish a headless browser from the rest.

However, in this arms race, parsers tend to have a big advantage, and that's why.

In most cases, the behavior of Javascript code when it tries to determine whether it runs in headless mode is similar to malware that tries to evade dynamic digital fingerprints. This means that JS will behave well in a scanning environment and poorly in real browsers. And that is why the team behind the development of headless-Chrome mode is trying to make it indistinguishable from the real user's web browser in order to protect it from malicious attacks. And for this reason, parsers can make the most of these efforts.

One more thing you need to know, even if parallel operation of 20 cURL is a trivial task, and Chrome Headless is relatively easy to use for small projects, but it can be difficult to scale. Mainly because it uses a lot of RAM, so managing more than 20 simultaneously running sessions is a difficult task.

(The headless-Chrome plurality management issue is one of many things that can be solved using the ScrapingNinja API .)

If you want to know more about fingerprinting in a browser, I suggest you take a look at Antonio Vastel’s blog , a blog devoted entirely to this topic.

This is all you need to know in order to understand how to make the site think you are using a real browser. Let's now see how to impersonate a real user.

A person using a browser is unlikely to request 20 pages per second from one site. Therefore, if you are going to request a large number of pages from one site, then you need to make the site think that requests come from different users i.e. from different IP addresses. In other words, you need to use a proxy.

Today proxies are not very expensive: ~ $ 1 per IP. However, if you need to make more than 10 thousand requests per day on the same website, then the costs can greatly increase, because you will need hundreds of addresses. Keep in mind that the IP addresses of proxies must be constantly monitored in order to drop the one that turned off and replace it.

There are several proxy solutions on the market, the most commonly used ones: Luminati Network , Blazing SEO and SmartProxy .

Of course, there are many free proxies, but I do not recommend using them, because they are often slow, unreliable, and sites offering these lists are not always transparent as to where these proxies are located. Most often, free proxies are publicly available, and therefore their IP addresses will be automatically blocked by most websites. The quality of the proxy server is important, because the anti-parsing services contain an internal list of proxy IP addresses. And any traffic from these IP addresses will be blocked. Be careful, choose a proxy service with a good reputation. That's why I recommend using paid proxies or creating your own proxy network.

To create such a network, you can use scrapoxy , an excellent open source API that allows you to create proxy APIs on top of various cloud providers. Scrapoxy will create a proxy pool by instantiating with various cloud providers (AWS, OVH, Digital Ocean). You can then configure your client to use the Scrapoxy URL as the primary proxy, and Scrapoxy will automatically designate the proxy in the proxy pool. Scrapoxy is easily customizable to your needs (speed limit, blacklist, etc.), but setting it up can be a bit tedious.

Do not forget about the existence of a network such as TOR, also known as Onion Router. This is a worldwide computer network designed to route traffic through many different servers in order to hide its origin. Using TOR greatly complicates network monitoring and traffic analysis. There are many ways to use TOR to maintain confidentiality, freedom of speech, protection of journalism in dictatorship regimes and, of course, illegal activities. In the context of a web search, TOR can hide your IP address and change the IP address of your bot every 10 minutes. The IP addresses of the TOR output nodes are public. Some websites block TOR traffic using a simple rule: if the server receives a request from one of the TOR public output nodes, it blocks it. That is why in many cases TOR loses compared to classic proxies. It is worth noting,that the speed of traffic through TOR will be much slower due to multiple routing.

But it happens that a proxy is not enough, and some websites systematically ask you to confirm that you are a person using the so-called captcha. Most of the time, captchas are displayed only if the IP address is suspicious, so proxy switching will work in such cases. In other cases, you need to use the captcha solution service (for example, 2Captchas and DeathByCaptchas ).

It must be remembered that although some captchas can be resolved automatically using optical character recognition (OCR), the most recent one must be resolved manually.

Old CAPTCHA - manages

Google ReCaptcha V2

This means that if you use these aforementioned services, then on the other side of the API call you will have hundreds of people solving captcha for only 20 cents per hour .

But then again, even if you decide to captcha or switch to another proxy, websites can still “pinpoint” your parser.

The latest and most advanced tool used by the website to detect parsers is pattern recognition. Therefore, if you plan to refuse each identifier from 1 to 10000 for the URL www.example.com/product/ , try not to do this sequentially and with a constant frequency of requests. For example, you can save a set of integers from 1 to 10000 and randomly select one integer in this set, and then parse the data.

This is one of the simplest examples, because some websites make statistics on browser fingerprints on the endpoint. This means that if you don’t change some parameters in your browser without a header and aim at one endpoint, you can still get a ban.

Web sites typically track the origin of traffic, so for example, if you want to parse a website in Brazil, try not to do this with a proxy in Vietnam.

But from experience I can say that speed is the most important factor in the Pattern Recognition Request, so the slower you pars, the less chance you have of being detected.

We hope that this review will help you better understand web parsing and that you learned something new while reading this post.

Comments

To leave a comment

Operating Systems and System Programming

Terms: Operating Systems and System Programming