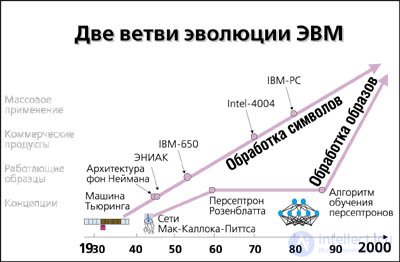

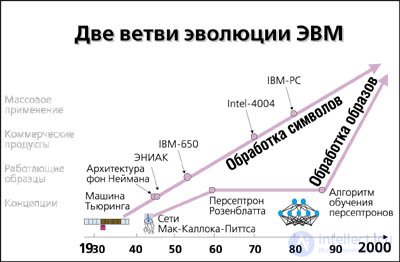

Two branches of computer evolution

Two basic computer architectures — sequential processing of characters from a given program and parallel pattern recognition from teaching examples — appeared almost simultaneously.

- Conceptually, they took shape in the 30s-40s. The first is in the theoretical work of 1936 by Turing, who proposed a hypothetical machine for the formalization of the concept of a computable function, and then, in a practical way, in the von Neumann’s 1945 report that was not completed on page 101, summarized the lessons of creating the first ENIAC computer and proposed a methodology constructing machines with memorized programs (ENIAC was programmed with plugs). Von Neumann, by the way, used not only the ideas of Turing. So, von Neumann proposed modified formal neurons (!) Of McCulloch and Pitts, the founders of neural network architecture, as basic elements of a computer. In an article published in 1943, they proved that networks of such threshold elements are capable of solving the same class of problems as the Turing machine.

However, the fate of these computational paradigms rather resembles the vicissitudes of numerous stories about princes and beggars. World War II pushed the work to create a super calculator.

- One of the main tasks of the Ballistic Research Laboratory of the US Department of Defense was the calculation of ballistic trajectories and the preparation of correction tables. Each such table contained more than 2 thousand trajectories, and the laboratory could not cope with the volume of computations, despite its swollen staff: about a hundred skilled mathematicians, reinforced by several hundreds of auxiliary calculators who had completed three-month preparatory courses. Under pressure from these circumstances, in 1943 the army entered into a contract with the Higher Technical School of the University of Pennsylvania for 400 thousand dollars to create the first electronic computer ENIAC. The project was led by John Mauchly and Pres Eckert (the last one on the day of signing the contract was 24 years old). ENIAC was built after the war. It consumed 130 kW, contained 18 thousand lamps operating with a clock frequency of 100 kHz, and could perform 300 multiplication operations per second.

In the future, computers began to be widely used in business, but in this very quality - computers. Computers were rapidly evolving, following the path traced by von Neumann.

- The first production commercial computers IBM-650 appeared in 1954 (for 15 years, they were sold 1.5 thousand pieces). In the same year, a silicon transistor was invented. In 1961, his field analogue used in microchips appeared, and in 1962 mass production of microchips began. In 1970, Intel released its first microprocessor, giving a start to the microprocessor revolution of the 1970s. Just four years later, the first Altair personal computer appeared at a very affordable price of $ 397, although it was also in the form of a set of parts, just as the radio amateur kits were then sold. And in 1977, a fully featured user-friendly Apple-2 appeared. In 1981, computer giants, IBM, finally entered the PC market. In the 90s, a typical user already has on his desktop an analogue of the Cray-1 supercomputer.

As for the neural network architecture, despite the numerous overtures to the neural networks from the side of the cybernetics classics, their influence on industrial developments until recently was minimal. Although in the late 1950s and early 1960s, great hopes were pinned on this direction, mainly due to Frank Rosenblatt, who developed the first neural network pattern recognition device, the perceptron (from English perception, perception).

- Perceptron was first modeled in 1958, and its training required about half an hour of computer time on one of the most powerful IBM-704 computers at that time. The hardware version - Mark I Perceptron - was built in 1960 and was designed to recognize visual images. His receptor field consisted of a 20x20 array of photodetectors, and he successfully coped with the solution of a number of problems — for example, he could distinguish the transparencies of some letters.

At the same time, the first commercial neurocomputing companies appeared. The enthusiasm of that heroic period of “storm and onslaught” was so great that many, carried away, predicted the appearance of thinking machines in the very near future. These prophecies were blown up by the press to an entirely indecent scale, which naturally repelled serious scientists. In 1969, Marvin Minsky, a former Rosenblatt schoolmate in the Bronx, who himself had paid tribute to the design of neurocomputers, decided to put an end to it, publishing the book “Perceptrons” together with the South African mathematician Peipert. In this fatal book for neurocomputing, the fundamental, as it seemed then, limitation of perceptrons was strictly proved. It was argued that only a very narrow range of tasks was available to them. In fact, the criticism concerned only the perceptron with one layer of learning neurons. But for multilayer neural networks, the learning algorithm proposed by Rosenblatt was not suitable. The cold shower of critics, having moderated the ardor of enthusiasts, slowed down the development of neurocomputing for many years. Research in this direction was curtailed until 1983, when they finally received funding from the United States Agency for Advanced Military Research (DARPA). This fact was the signal for the start of a new neural network boom.

The interest of the general scientific community to neural networks has awakened after the theoretical work of the physicist John Hopfield (1982), who proposed a model of associative memory in neural ensembles. Holfield and his numerous followers have enriched the theory of neural networks with many ideas from the physics arsenal, such as collective interactions of neurons, network energy, learning temperature, etc. However, the real boom of practical application of neural networks began after the publication in 1986 by David Rumelhart and co-authors of the multi-layer perceptron training method , they called the error propagation method. The perceptron limitations that Minsky and Papert wrote about turned out to be surmountable, and the capabilities of computing technology were sufficient to solve a wide range of applied problems. In the 90s, the productivity of serial computers increased so much that it allowed us to simulate the work of parallel neural networks with the number of neurons from several hundred to tens of thousands. Such neural network emulators are capable of solving many interesting problems from a practical point of view. In turn, neural network software systems will become the carrier that will bring to the technological orbit a real parallel neural network hardware.

Two calculation paradigms

Even at the dawn of the computer era — before the advent of the first computers — two fundamentally different approaches to information processing were outlined: sequential processing of symbols and parallel pattern recognition. In the context of this article, both characters and images are “words” that computers use, their difference is only in dimension. The information capacity of an image can exceed the number of bits by which orders are described by many orders of magnitude. But this purely quantitative difference has far-reaching qualitative consequences due to the exponentially rapid increase in the complexity of data processing with the increase in their bit depth.

So, you can strictly describe all possible operations on relatively short characters. So, you can make a device - a processor -, in a predictable way, processing any character received at the input - a command or data. If now it is possible to do so, you can strictly describe all possible operations on relatively short characters. So, you can make a device - a processor -, in a predictable way, processing any character received at the input - a command or data. If now it is possible to reduce a complex task to the listed basic operations of such a processor — to write an algorithm for its solution — then you can entrust its execution to a computer, thereby getting rid of the tedious routine work. It turns out that any arbitrarily complex algorithm can be programmed on elementary simple processors. [2]

To describe all operations on multi-bit images is fundamentally impossible, since the description length grows exponentially. Consequently, any “image processor” is always specialized - the task for its development is presented by a deliberately incomplete table of image transformations, as a rule, containing an insignificant part of possible combinations of inputs and outputs. In all other situations, his behavior is not specified. The processor should “think out” its behavior, summarize the existing training examples in such a way that, with those examples that were not encountered in the learning process, its behavior would be “to an acceptable degree similar”.

Thus, the difference between sequential and parallel computations is much deeper than just a longer or shorter computation time. Behind this difference are completely different methodologies, methods of setting and solving information problems. It remains to add that the first way is familiar to us von Neumann computers, and the second way, following the natural neural ensembles of the brain, is chosen by neurocomputing.

To fit neurocomputing into the overall picture of the evolution of computing machines, consider these two paradigms in a historical context. It has long been known the fact of "counter-orientation" of biological and computer evolution. A person first learns to recognize visual images and move, then speak, count, and finally, the most difficult thing is to acquire the ability to think logically in abstract categories. Computers, on the contrary, first mastered logic and counting, then learned to play games and only with great difficulty approach the problems of speech recognition, processing of sensory images and orientation in space. What explains this difference?

Sequential character processing

The first computers owe their appearance not only to the genius of scientists, but not least to the pressure of circumstances. The Second World War demanded the solution of complex computational problems. We needed super calculators, which became the first computer.

At the then high cost of hardware, the choice of a coherent architecture, the principles of which were formulated in the famous von Neumann report of 1945, was perhaps the only possible one. And this choice for several decades determined the main occupation of a computer: the solution of formalized problems. The division of labor between the computer and the person was fixed by the formula: “the person programs the algorithms — the computer executes them”.

Meanwhile, the progress of semiconductor integrated technologies has provided several decades of exponential growth in the performance of von Neumann computers, mainly due to an increase in the clock frequency, and to a lesser extent through the use of parallelism. If the clock frequency grows in inverse proportion to the size of the gates on the chip, that is, in proportion to the square root of the number of elements, then the number of inputs and outputs is only as a fifth-degree root. This fact is reflected in the empirical Rent law: the number of gates in a processor is proportional to approximately 5th power of the number of its inputs and outputs (D. Ferry, L. Ajkers, E. Greenich, “Ultra-large-scale electronics integrated circuits”, M .: “Mir” , 1991). Therefore, a dramatic increase in the processor capacity while maintaining the current circuit design principles is impossible. The processing of really complex - figurative information should be based on fundamentally different approaches. That is why we do not yet have artificial vision systems, which are somehow comparable in their capabilities to their natural prototypes.

Parallel image processing

The recognition of sensory information and the development of an adequate response to external influences are the main evolutionary task of biological computers, from the simplest nervous systems of mollusks to the human brain. Not executing external algorithms, but developing your own in the learning process. Learning is a process of self-organization of a distributed computing environment - neural ensembles. In distributed neural networks, parallel processing of information takes place, accompanied by constant training directed by the results of this processing.

Only gradually during the evolution of biological neural networks have learned to implement a sufficiently long logical chain - to emulate logical thinking. Recognition of images does not imply a rationale. As a rule, every man can easily discern a pretty girl who flashed across the crowd. But who among them will risk proposing an algorithm for assessing female beauty? And does such an algorithm even exist? Who formalizes this task, and who needs it? Evolution does not solve formalized problems, does not reason, it only rejects the wrong decisions. The elimination of errors is the basis of any learning, both in evolution, in the brain, and in neurocomputers.

But finally let us ask ourselves the question: how exactly do neurocomputers work? The brain device is too complicated. Artificial neural networks are simpler - they “parody” the work of the brain, as it should be for any scientific model of complex systems. The main thing that unites the brain and neurocomputers is the focus on image processing. Let's leave biological prototypes aside and focus on the basic principles of distributed data processing.

Connectionism

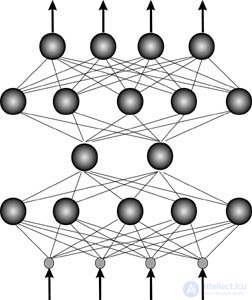

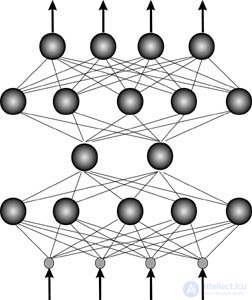

A distinctive feature of neural networks is the globality of connections. The basic elements of artificial neural networks - formal neurons - are initially aimed at working with vector information. Each neuron of a neural network is usually connected with all neurons of the previous data processing layer (see fig.).

The specialization of relations arises only at the stage of their adjustment - training on specific data. The architecture of the processor or, what is the same thing, the algorithm for solving a specific problem manifests itself, like a photograph, as we learn.

Each formal neuron performs the simplest operation — weights the values of its inputs with its own locally stored synaptic weights and performs a non-linear transformation on their sum:

The nonlinearity of the output activation function is fundamental. If neurons were linear elements, then any sequence of neurons would also produce a linear transformation, and the entire neural network would be equivalent to one layer of neurons. Nonlinearity destroys linear superposition and leads to the fact that the capabilities of a neural network are significantly higher than the capabilities of individual neurons. [

Comments

To leave a comment

Artificial Intelligence. Basics and history. Goals.

Terms: Artificial Intelligence. Basics and history. Goals.