Lecture

The history of artificial intelligence (AI) begins long before our era. Aristotle was the first to attempt to define the laws of the “right

thinking "or processes of irrefutable reasoning. Attempts to create mechanical counting devices in the Middle Ages were very impressive.

contemporaries. The most famous car Pascalina, built in 1642 Blaise Pascal. Pascal wrote that "an arithmetic machine produces an effect that seems closer to thinking than any other animal activity."

We can assume that the history of artificial intelligence begins with the creation of the first computers in the 40s. With the advent of electronic computers with high (by the standards of the time) performance, the first questions in the field of artificial intelligence began to arise: is it possible to create a machine whose intellectual capabilities would be identical to human intellectual capabilities (or even superior to human capabilities).

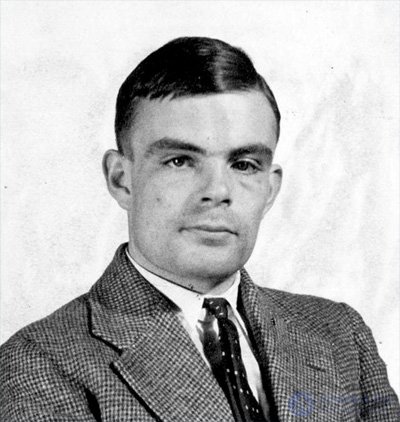

Alan Matison Turing , OBE (English Alan Mathison Turing [ʊətjʊərɪŋ]; June 23, 1912 - June 7, 1954) - English mathematician, logician, cryptographer, who had a significant impact on the development of computer science. Cavalier of the Order of the British Empire (1945), member of the Royal Society of London (1951) [4] . The abstract computing “Turing Machine” proposed by him in 1936, which can be considered a model of a general-purpose computer [5] , allowed to formalize the concept of an algorithm and is still used in many theoretical and practical studies. A. Turing's scientific works are a generally recognized contribution to the foundations of computer science (and, in particular, the theory of artificial intelligence) [6] .

During World War II, Alan Turing worked at the Government School of Codes and Ciphers, located in Bletchley Park, where work was concentrated on hacking ciphers and codes of axis countries. He led the group Hut 8, responsible for the cryptanalysis of communications of the Navy of Germany. Turing has developed a number of hacking methods, including a theoretical basis for Bombe , the machine used to hack the German Enigma encoder.

After the war, Turing worked at the National Physical Laboratory, where according to his project the first computer in the world with a program stored in memory was implemented - ACE. In 1948, the scientist joined the computing laboratory of Max Newman at the University of Manchester, where he assisted in the creation of Manchester Computers [7] , and later became interested in mathematical biology. Turing published a paper on the chemical foundations of morphogenesis and predicted chemical reactions that take place in an oscillatory mode, such as the Belousov-Zhabotinsky reaction, which were first presented to the scientific community in 1968. In 1950, he proposed the Turing empirical test for evaluating computer artificial intelligence.

In 1952, Alan Turing was convicted on charges of "gross obscenity" in accordance with the "Labusher amendment", according to which homosexual men were persecuted. Turing was given the choice between compulsory hormone therapy, designed to suppress libido, or imprisonment. The scientist chose the first. Alan Turing died in 1954 from cyanide poisoning. The investigation established that Turing committed suicide, although the mother of the scientist believed that the incident was an accident. Alan Turing was recognized as "one of the most famous victims of homophobia in the UK" [8] . On December 24, 2013, Turing was posthumously pardoned by Queen Elizabeth II of Great Britain [9] [10] .

In honor of the scientist named Turing Award - the most prestigious award in the world in the field of computer science.

Alan turing

On computable numbers, with an application to the Entscheidungsproblem (1936)

Computing machinery and intelligence (1950)

some quotes from his writings

A machine, each movement of which ... is completely determined by its configuration, we will call an automatic machine (or a-machine).

However, for some purposes we can use machines (c-machines), whose behavior is only partially determined by their configuration. ... When such a machine reaches an ambiguous configuration, it stops until the external operator chooses any option to continue. In this article I consider only automatic machines, and therefore omit the prefix a.

(Alan Turing, On computable numbers, with an application to the Entscheidungsproblem)

The behavior of a computer at any given time is determined by the symbols it observes and its state of consciousness. We can assume that

the number of such symbols is limited at each instant in time from above by the boundary B. If the computer needs to consider more symbols, it is necessary to use successive observations. We can also assume that the number of his states of consciousness is also finite.

It is determined that it’s not a rule of thumb at all moment. We can suppose that you can observe at one moment. If he wishes you to observe more, he must use successive observations. We will also suppose that you have been taken into account.

(Alan Turing, On computable numbers, with an application to the Entscheidungsproblem)

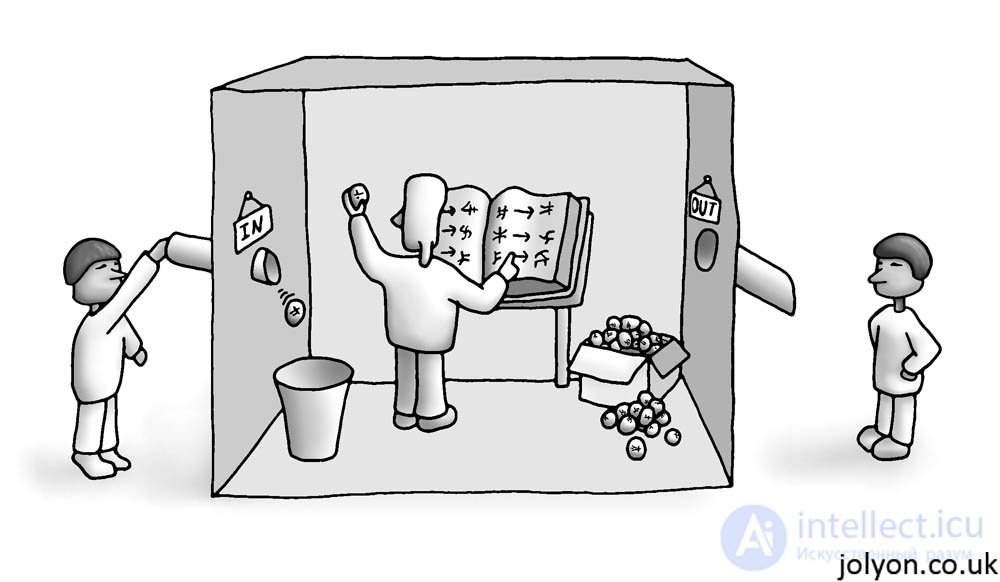

notice the animation of the computer

[Consider] a game that we will call an imitation game. It is played by a man (A), a woman (B), and a jury (C). The jury is located in a separate room from the rest. The goal of the jury is to determine which of the players is a man and who is a woman, [the goal of the players is to confuse the jury]. The jury knows the players by the X and Y tags. Next, the jury asks questions, for example:

C: X, what is your hair length?

Suppose X is a man, and X responds:

I have a haircut under the foxtrot, and the longest strands are about 20 centimeters

(Alan Turing, Computing machinery and intelligence)

Now let's ask ourselves what would happen if the car took its place

A in this game? Maybe the jury would be wrong? This question we will replace

our initial question, Can a car think ?.

( Alan Turing, Computing machinery and intelligence)

Opportunities for the practical implementation of AI have appeared since

creation of electronic computers. At this time, turned

philosophical discussion on the topic "Can a car think?". The result of this

discussion was the test proposed by Alan Turing in the 50s. The twentieth century [1].

The test is as follows: There are two teletypes (while others

There were no terminal devices, now they would suggest ICQ). One of

Teletypes connected to the machine, the other - to the unit, which sits

person. Several experts alternately conduct a dialogue at each of

teletypes. If most experts fail within five minutes

recognize a car in one of the interlocutors, the Turing test is considered

passed successfully

\

\

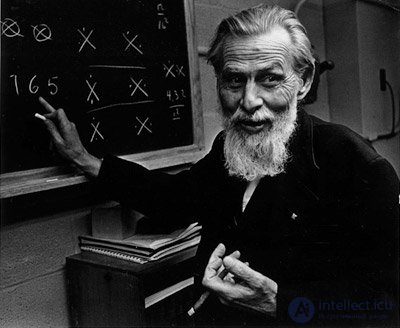

Warren McCulloch (born Warren Sturgis McCulloch ; November 16, 1898, Orange, New Jersey, USA - September 24, 1969, Cambridge, Massachusetts, USA) - American neuropsychologist, neurophysiologist, theorist of artificial neural networks and one of the fathers of cybernetics.

He studied at Haverford College, studied philosophy and psychology at Yale University, where he received a bachelor of arts degree in 1921. He continued his studies at Columbia University and received a Master of Arts degree in 1923. He received the title of doctor of medicine in 1927 at the College of Physicians and Surgeons of Columbia University. He interned at the Bellevue Hospital Center in New York until he returned to university studies in 1934. He worked at the Laboratory of Neurophysiology at Yale University from 1934 to 1941, after being transferred to the Faculty of Psychiatry at the University of Illinois at Chicago.

He was an active participant in the first cybernetics group, The Human Machine Project, which was unofficially created during the conference in New York on the topic Cerebral Inhibition Meeting (1942). Honorary Chairman of all ten conferences held from 1946 to 1953 with the participation of members of the cybernetic group (about 20 people in total). McCulloch was a professor of psychiatry and physiology at the University of Illinois and held a responsible position in the Electronics Research Laboratory at the Massachusetts Institute of Technology.

Together with the young researcher Walter Pitts laid the foundation for the subsequent development of neurotechnologies. His fundamentally new theoretical substantiations turned the language of psychology into a constructive means of describing machines and machine intelligence. One of the ways to solve such problems was chosen mathematical modeling of the human brain, for which it was necessary to develop a theory of brain activity. McCulloch and Pitts are the authors of the model, according to which neurons are simply considered to be a device operating with binary numbers. The merit of McCulk and Pitts is that their network of electronic "neurons" could theoretically perform numerical or logical operations of any complexity. For many years, Makkalok was engaged in artificial intelligence and was able to find a common language with the world community in the question of how machines could apply the concepts of logic and abstraction in the process of self-learning and self-improvement. Specialist in the epistemological problems of artificial intelligence.

McCulloch’s priority is confirmed by the publication of such articles as A logical calculus of ideas in the nervous activity, but after a break with N. Wiener, it turns out to be beyond the mainstream studies of cybernetics. With a significant contribution to world science today, McCulloch is less well known than Norbert Wiener, Arturo Rosenbluth [en] and Julian Bigelow [en]

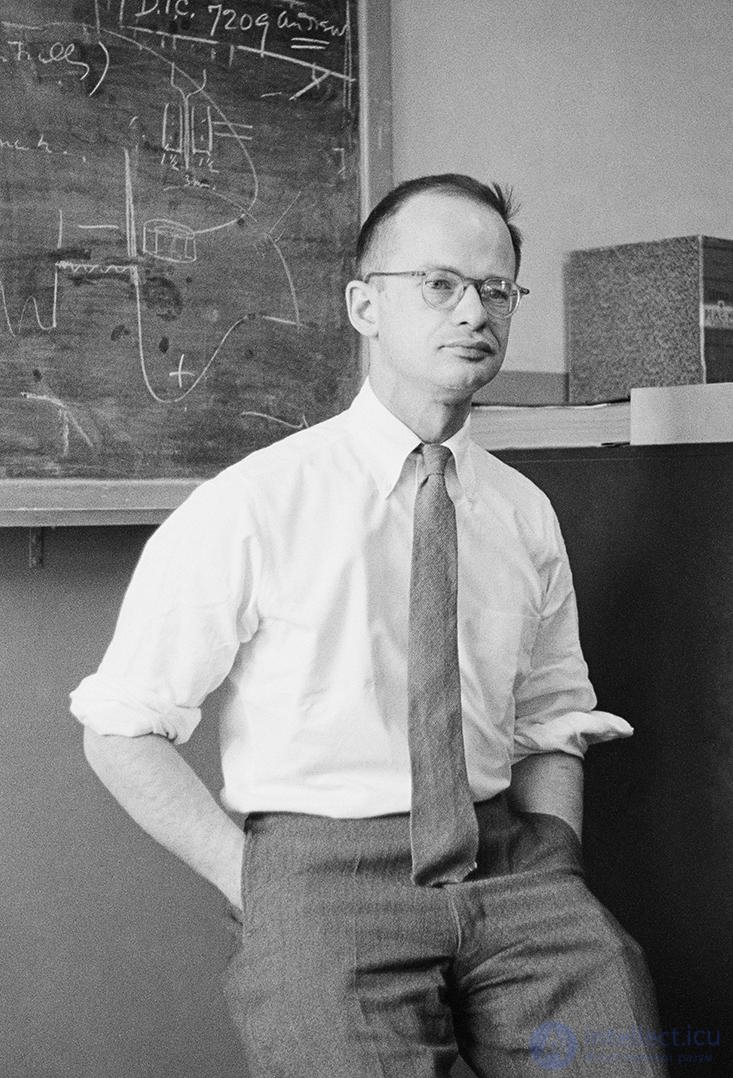

Walter Pitts (born Walter Pitts , April 23, 1923 - May 14, 1969) is an American neuro-linguistics, logician and mathematician of the 20th century. Walter Pitts and his closest friend, Warren McCulloch, worked on the creation of artificial neurons; it was this work that laid the foundations for the development of artificial intelligence. Pitts laid the foundation for the revolutionary idea of the brain as a computer, which stimulated the development of cybernetics, theoretical neurophysiology, computer science [1] .

Walter Pitts was born in Detroit on April 23, 1923 in a dysfunctional family. He independently studied Latin and Greek languages, logic and mathematics in the library. At 12 he read the Principia Mathematica book in 3 days and found several controversial points in it, which he wrote to one of the authors of the three-volume book, Bertrand Russell. Russell responded to Pitts and invited him to enroll in graduate school in the UK, but Pitts was only 12 years old. After 3 years, he learned that Russell came to lecture at the University of Chicago and ran away from home [2] [3] .

In 1940, Pitts met Warren McCullock and they begin to engage in the idea of McCullock about the computerization of the neuron. In 1943, they published the work "The logical calculus of ideas relating to nervous activity" [3] .

On May 14, 1969, Pitts passed away at his home in Cambridge.

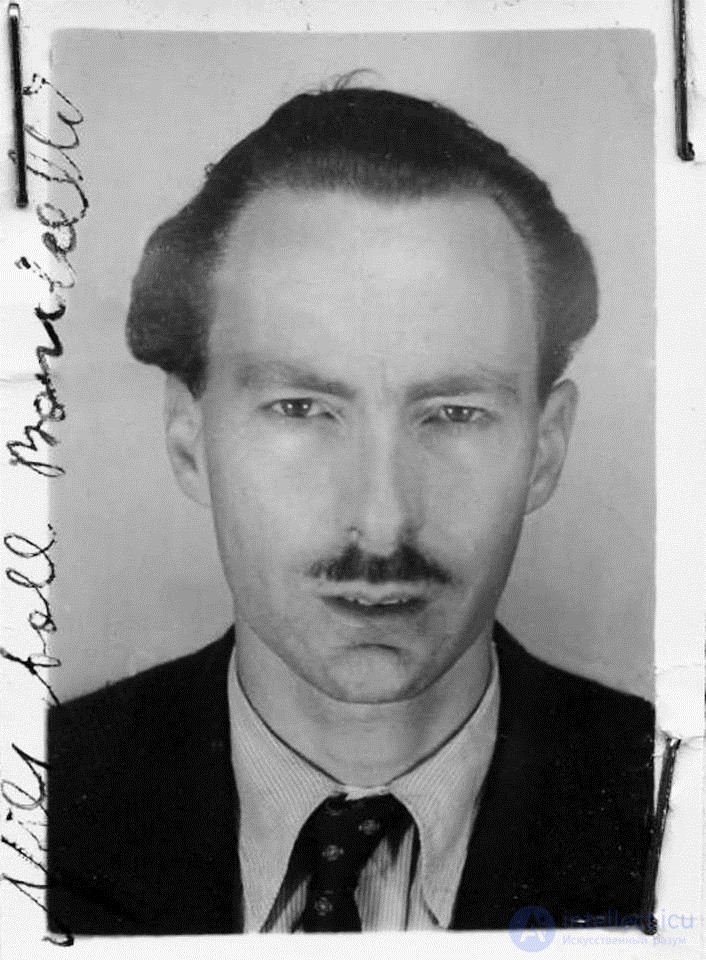

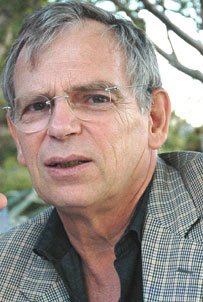

Nils Aall Barricelli (January 24, 1912 - January 27, 1993) was a Norwegian - Italian mathematician.

The first computer experiments helped Barricelli in [1] in symbiogenesis and evolution to be considered innovative in the life of the study. Barricelli, who was independently wealthy, conducted an unpaid residency program at the Institute for Advanced Study in Princeton, New Jersey in 1953, 1954 and 1956. [2] [3] He later worked at the University of California, Los Angeles, at Vanderbilt University (1964) , in the Department of Genetics, University of Washington, Seattle (until 1968), and then at the Institute of Mathematics, University of Oslo. Barricelli has been published in various fields, including viral genetics, DNA, theoretical biology, space flight, theoretical physics and the mathematical language

We offer a two-month research seminar consisting of ten

trap for researching artificial intelligence during the summer of 1956

at the Dortmund College of Hanover, New Hampshire. The starting point of the research

is the conviction that all aspects of learning, and other

phenomena of intelligence can be described so accurately that a machine can

programmed to perform them. An attempt will be made to find out how

machines can use language, do abstractions, solve various kinds

tasks that while solves only a man, and learn. We assume that

possible substantial progress in this matter if carefully selected

A team of scientists will work on it over the summer.

(McCarthy et al., Proposal for the project)

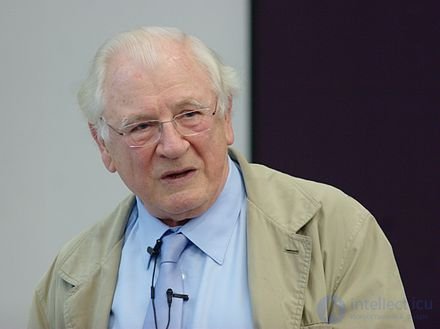

John Alan Robinson (born 1928) [ 2 ] is a philosopher (by education), a mathematician and a scientist . He is a University Honorary Professor at Syracuse University , USA .

The main contribution of Alan Robinson is the fundamentals of automated proofs of theorems . His association algorithm eliminated one source of combinatorial explosion in prover resolution ; he also set the stage for the logic of programming paradigms, in particular, for the Prolog of the language. Robinson received the 1996 Herbrand Award for Outstanding Contributions to Automated Reasoning .

Robinson was born in Yorkshire , England and left for the United States in 1952 with a classic degree from the University of Cambridge . He studied philosophy at the University of Oregon before moving to Princeton University , where he received his Ph.D. in philosophy in 1956. He then worked at Du Pont as an analyst operations research where he learned how to program and taught mathematics . He moved to Rice University in 1961, spending the summer months as a visiting fellow at the Argonne National Laboratory of the Division of Applied Mathematics' s. He moved to Syracuse University as Professor Emeritus of Logic and Computer Science in 1967 and became Professor Emeritus in 1993. [ edit ]

It was in Argonne that Robinson became interested in automatic proof and advanced unification and resolution principle. Resolution and integration have since been incorporated into many automated Theory Systems and are the basis for inference mechanisms used in logic programming and the Prolog programming language.

Robinson was the founder and chief editor of the journal of logical programming , and received many awards. They include the Guggenheim Fellowship in 1967 , in the American Mathematical Society, the Milestone award in automatic proof by Theorem 1985, [ 3 ] AAAI Fellowship 1990, [ 4 ] Humboldt Senior Fellow, 1995 Award [ edit ] Herbraine Awards for Outstanding Contributions Automatic Reasoning 1996 , [ 5 ] [ 6 ] and the Association for Logic Programming, honorary title Founder of Logic Programming in 1997 [ 7 ] He received an honorary doctorate from the Catholic University of Leuven in 1988 [ 8 ] University of Uppsala 1994, [ 9 ] and Universidad Politecnica de Madrid 2003.

|

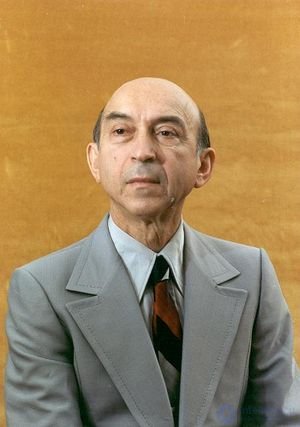

Lotfi Zadeh (eng. Lotfi Askar Zadeh - Lotfi A. Zadeh , Azeri. Lütfi Zadə - Lyutfi Zadeh , also Azeri. Lütfəli Əsgərzadə - Lutfali Askerzade [1] ; born February 4, 1921, Novkhani, Azerbaijan SSR) - American mathematician and American mathematician [1] ; , founder of the theory of fuzzy sets and fuzzy logic, professor at the University of California (Berkeley).

Born in Baku as Lutfi Aleskerzade (or Asker Zade ). Father - Ragim Aleskerzade (1895-1980), [2] [3] [4] a journalist by profession, was an Azerbaijani and an Iranian subject; mother - Feiga (Fania) Moiseevna Korenman (1897–1974), [5] [6] [7] [8] pediatrician by profession, - Jewish origin from Odessa [9] [10] [11] . Ragim Aleskerzade, whose family came from the city of Ardabil, was sent to Baku during the First World War as a foreign correspondent for the weekly Iran. Here he married Fane Moiseyevna Korenman, a student at the medical institute, whose family left Odessa during the Jewish pogroms, [12] and in the years of NEP engaged in the wholesale trade in matches. The family lived in Baku at the intersection of Asiatic and Maxim Gorky streets.

He studied at the Russian school in Baku number 16, in his childhood he read a lot - both classic works of Russian literature and world classics in Russian translations. From 1932 he lived in Iran, for 8 years he studied at the American College of Tehran (later known as Alborz, a missionary Presbyterian school with a Persian language education), then at the Electrical Engineering Faculty at Tehran University (graduated in 1942). At school, he met his future wife Faney Zand (in marriage Fay Zade, born 1920) from a family of Dvina Jews who had fled from Germany to Tehran after the Nazis came to power [13] . Many years later, Fay Zade became the author of the most complete biography of her husband - “My life and travels with my father of fuzzy logic” [14] .

After graduation, he worked with his father as a supplier of building materials for the American troops stationed in Iran, moved to the United States in July 1944 and entered the Massachusetts Institute of Technology in September (he received a master’s degree in electrical engineering in 1946). At that time, Lotfi Zadeh’s parents lived in New York (his mother worked as a doctor), [15] where he entered graduate school at Columbia University, and after defending his dissertation in 1949, he remained there as an assistant in the engineering department. Since 1959 he has been working at the University of California (Berkeley).

He published the fundamental work on the theory of fuzzy sets in 1965, in which he outlined the mathematical apparatus of the theory of fuzzy sets. In 1973 he proposed the theory of fuzzy logic, later - the theory of soft computing (eng. Soft computing ), as well as the theory of verbal computations and representations (eng. Computing with words and perceptions ).

Joseph Weizenbaum ( Josef Weizenbaum , Weisenbaum, Weisenbaum; Eng. Joseph Weizenbaum ; January 8, 1923, Berlin - March 5, 2008, Gröben, Germany) - American scientist, expert in artificial intelligence. Known as the author of the Eliza program, written by him in 1966.

Born in Berlin in a Jewish family. In 1935 he emigrated with his family to the USA. He graduated from Wayne State University (Wayne State University, USA, Detroit, Michigan): Bachelor (1948), MA (1950). in 1953-1963 he worked at General Electric.

Since 1963, he worked at the Massachusetts Institute of Technology.

In 1996 he moved to Berlin.

example

RCD Hello

ELIZA Hello, RCD!

RCD How are you today?

ELIZA I'm afraid of bit stressed today.

RCD So, probably not a good time to talk to you?

ELIZA You're not really talking about me?

RCD Who else will I be talking about then?

ELIZA Why do you ask?

RCD You don't feel interested in talking to me.

I’m interested in talking to you?

RCD Because you are not really responding to my

questions properly.

ELIZA Tell me RCD, does that suggest anything else which belongs to you?

RCD Sorry, I don't get you!

ELIZA It didn’t bother me. Please continue

It is a good conversationist!

ELIZA Why do you ask?

RCD I am not asking, just commenting.

ELIZA

I’m talking to someone so dumb.

It’s a dumb?

RCD No, I don't think so.

ELIZA

RCD No.

ELIZA Why 'no'?

RCD Why not 'no'?

ELIZA Why 'no'?

RCD I give up.

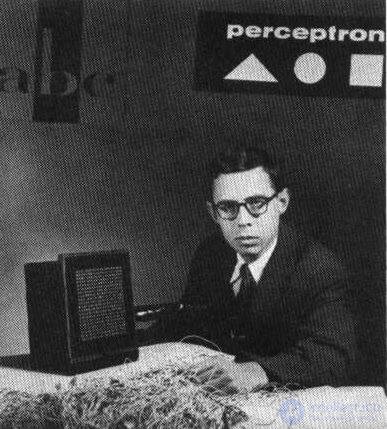

Frank Rosenblatt (Eng. Frank Rosenblatt , 1928–1971) is a famous American scientist in the field of psychology, neurophysiology and artificial intelligence.

Born in New York, the youngest (third) child in a family of Jewish immigrants from the Russian Empire (Labun, now Khmelnitsky region of Ukraine) - sociologist and economist Frank Ferdinand Rosenblatt (English Frank Ferdinand Rosenblatt , 1882-1927) and social worker Catherine Rosenblatt ( nee golding). He had a sister, Bernice, and a brother, Maurice Rosenblatt (1915–2005), later an American liberal politician and lobbyist who played a leading role in the fall of Senator Joseph McCarthy. [1] [2] [3] My father worked as director of the United Distribution Committee of the American foundations for helping Jews who suffered from the war in Russia and Siberia (1920-1921), then one of the leaders of this organization, was the author of a number of scientific works, editor of periodical workers publications naidishe; died a few months before the birth of the youngest son. [four]

He graduated from Princeton University. In the years 1958-1960 at the Cornell University created a computing system "Mark-1" (in the photo next to it). It was the first neurocomputer capable of learning simplest tasks, it was built on a perceptron, a neural network that Rosenblatt had developed three years earlier.

Rosenblatt’s Perceptron was originally programmed on the IBM 704 computer at the Cornell Aeronautical Laboratory in 1957. While studying neural networks like a perceptron, Rosenblatt hoped to “understand the fundamental laws of organization common to all information processing systems, including both machines and the human mind.”

Rosenblatt was a very bright personality among teachers at Cornell University in the early 1960s. A handsome bachelor, he skillfully drove a classic MGA sports car and often walked with his cat Tobermory. He enjoyed spending time with students. For several years he gave a course of lectures for undergraduate students who was called Theory of the Mechanisms of the Brain and was aimed at students of both engineering and humanities departments at Cornell University.

This course was an incredible mix of ideas from a huge number of areas of knowledge: the results obtained during brain surgery on epileptic patients who are conscious; experiments to study the activity of individual neurons in the visual cortex of cats; work on the study of violations of psychic processes as a result of injuries of individual areas of the brain, principles of operation of various analog and digital electronic devices that simulate the details of the behavior of neural systems (as an example, the perceptron itself), etc.

In his course, Rosenblatt was doing an exciting discussion about brain behavior based on what was known about the brain at that time, long before the methods of computed tomography and positron emission tomography became available for brain research. For example, Rosenblatt gave a calculation showing that the number of neural connections in the human brain (more precisely, in the cerebral cortex) is enough to save complete “photographic” images from the organs of perception at 16 frames per second for about two hundred years.

In 1962, on the basis of the course materials, Rosenblatt published the book Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms, which was later used as a textbook for his course.

In 2004, the IEEE awarded the Frank Rosenblatt Award, which is awarded for “outstanding contributions to the promotion of projects, practices, methods or theories that use biological and linguistic computational paradigms including, but not limited to neural networks, connective systems, evolutionary computing, fuzzy systems, and hybrid intelligent systems that contain these paradigms. "[1]

However, in 1969, Rovinblatt’s former fellow student Marvin Minsky and Seymour Papert published the book Perceptrons, in which they gave a rigorous mathematical proof that the perceptron is not capable of learning in most cases that are interesting for application. As a result of the adoption of the ideas and conclusions of the book by M. Minsky and S. Papert, work on neural networks was curtailed in many research centers and funding was significantly curtailed. Since Rosenblatt died as a result of a tragic accident while sailing on a yacht on his birthday, he could not reasonably respond to criticism and research on neural networks stopped for almost a decade.

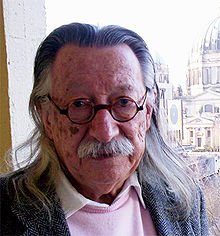

John Henry Holland (Eng. John Henry Holland ; February 2, 1929, Fort Wayne - August 9, 2015, Ann Arbor) - American scientist, professor of psychology, professor of electrical engineering and computer science at the University of Michigan, Ann Arbor. One of the first scientists who began to study complex systems and nonlinear science; known as the father of genetic algorithms.

J. Holland was born in Fort Wayne, Indiana, in 1929. He studied physics at the Massachusetts Institute of Technology, where he received a bachelor of science degree in 1950. Then J. Holland studied mathematics at the University of Michigan, where he received a Master of Arts degree in 1954 and a first Ph.D. in computer science in 1959.

He was a member of the Center for the Study of Complex Systems at the University of Michigan and a member of the Attorneys Committee and Scientific Committee of the Institute in Santa Fe.

John Holland was awarded the MacArthur Prize [1] . He was a member of the World Economic Forum [2] .

Holland often lectured around the world about his research, current research and open-ended questions in the study of complex adaptive systems. In 1975, he wrote a book about the genetic algorithms "Adaptation in Natural and Artificial Systems". He also developed a theorem of schemes.

Holland is the author of several books on complex adaptive systems, including:

Selected articles:

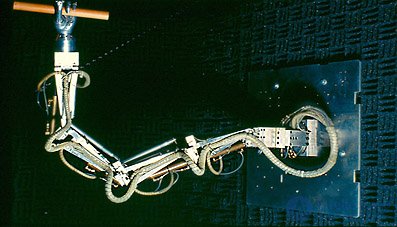

1959 - Development of the first industrial robot

|

The first industrial robot was created by self-taught inventor George Devol. The robot weighed two tons and was controlled by a program recorded on a magnetic drum. The creators used hydraulic drives, and the accuracy of the manipulator was 0.254 mm. As a result, US Patent No. 2988237 was issued and then Unimation was founded. |

1961 - Unimation introduced the first robot

|

The world's first industrial robot was introduced on the production line of the General Motors plant in New Jersey. The robot was involved in the processes (movement) of products in the production of scenes for shifting gears, as well as window handles. The cost price of the technology was about $ 65,000, but Unimation sold it for only $ 18,000. The control program was recorded on a magnetic drum, the weight of which was 1814 kg.

|

1962 - The first cylindrical robot is installed.

|

6 Versatran robots from AMF (American Machines Foundry) were installed at the Ford plant in Guangzhou, C |

1967 - The first industrial robot in Europe is installed.

|

The first industrial robot in Europe was installed at a metallurgical plant - Uppsland Väsby, Sweden. |

1968 - The first industrial robot, the hand, is created.

Created the first industrial robot manipulator similar to the human hand  |

1969 - The first robot to automate spot welding in the USA is introduced.

|

The introduction of Unimation robots to automate resistance welding at General Motors, USA, has increased the plant’s overall performance, as well as significantly reduced the heavy and dangerous work of people. |

erroneous statements of that time

Within three to eight years, the intelligence of the machine will be equal to the general intelligence

the average person. I mean that the machine will be able to read Shakespeare by

live a car, engage in politics, tell jokes and argue.

At the same time, the machine will start learning at an incredible speed. AT

within a few months she will reach the level of genius, and then her

The features will become unimaginable.

(Marvin Minsky, Quoted by Life, 1970)

Marvin Lee of Minsk (Eng. Marvin Lee Minsky ; August 9, 1927 - January 24, 2016) - American scientist in the field of artificial intelligence, co-founder of the Laboratory of Artificial Intelligence at the Massachusetts Institute of Technology.

He wrote the book "Perceptrons" (with Seymour Papert), which became the fundamental work for subsequent developments in the field of artificial neural networks. He cited a number of his own proofs of the perceptron convergence theorem. The criticism of research in this field contained in the book and the demonstration of the necessary computing resources for this is considered to be the reason for the loss of interest in artificial neural networks in academic articles of the 1970s.

“Our mathematical analysis has shown why increasing the size of a perceptron does not lead to an improvement in the ability to solve complex problems. Moreover, in contradiction with the generally accepted opinion, practically all theorems can be applied to multilayered consecutive unidirectional neural networks. Although it is interesting that no one has ever proved this, and Papert and I proceeded to the following questions in this area. ”Marvin Minsky

Minsky was a consultant to the 2001 film Space Odyssey and is mentioned in the film’s script and book:

“In the 1980s, Minsky and Goode showed how neural networks can automatically create their own kind (self-replicate) in accordance with an arbitrary training program. Artificial intelligence can be grown in a very similar way to the development of the human brain. But in any case, it is hardly possible to establish the details of this process; and if it does happen, these details will be millions of times more difficult for human understanding. ”

er Michael James Lighthill (eng. Sir Michael James Lighthill ; January 23, 1924 - July 17, 1998) - English scientist in the field of applied mathematics, the founder of aeroacoustics. Worked at Cambridge University. Author of solutions to a wide range of fundamental problems in the field of aviation acoustics, real gas dynamics, boundary layer, hydromechanics and gas dynamics, biomechanics. He was a member of the Royal Society of London and President of the International Union of Theoretical and Applied Mechanics (1984).

In his youth, Sir James Lighthill was known as Michael Lighthill. Michael was born on January 23, 1924 in Paris, where his father, Ernst Balzar Lighthill worked as a mining engineer. The real name of Lighthill is Lichtenberg, but in 1917 Ernst Lichtenberg changed the name to Lighthill. James' mother, Mariori Holmes, was the daughter of an engineer and 18 years younger than her husband. Ernst Laythid was 54 years old at the time of the birth of his son, and three years later, in 1927, he retired and moved with his family to England.

As a child, James was a child prodigy and graduated from school as an external student two years earlier than his peers. Already at the age of 15, he received a scholarship to study at Trinity College, Cambridge University, but the University offered to wait two years to seventeen. During this time, James and his school friend Freeman Dyson (became known as one of the founders of quantum electrodynamics) independently studied the mathematical courses of the first semesters, which allowed them to graduate from the university in 1943. Interestingly, during the war, college classes continued, but there were almost no students, and Paul Dirac, who read the equations of mathematical physics at Cambridge, dealt with James and Freeman virtually individually.

While studying at Cambridge, James met his future wife, Nancy Dumaresk, who was studying mathematics. After graduating from university, he tried to get a job at the Royal Air Force Establishment ( Royal Aircraft Establishment ), where Nancy had already got a place, but he was offered a place in the Aerodynamic Department of the National Physical Laboratory. In 1945, they were married and James left the lab to work at Trinity College. The following year, he was offered a leading lecturer position at the University of Manchester, where he created the strongest hydrodynamics group in the country. In 1950 (26 years old) he was made professor of applied mathematics at the University of Manchester. Since 1953 he was elected a member of the Royal Society of London, and from 1964 a professor at Imperial College London, where he founded the Institute of Applied Mathematics.

In 1969, Paul Dirac retired from the position of Lutsisian professor of mathematics at Cambridge; Lighthill took his place for the next 10 years (then Stephen Hawking took the place).

Lighthill was a very active person, for example, he was repeatedly fined for speeding while driving, even when he was a Lucian professor. He also loved to swim, once sailed several miles across the bay to get to a conference in Scotland, instead of using land transport. For his "rescue" a helicopter was called, but he refused to interrupt the swim. Every summer, Lighthill spent on the small island of Sark (Channel Islands), which first circled around in 1973. July 17, 1998 drowned during the morning swim.

Lighthill was a foreign member of the Russian Academy of Sciences.

In 1952, Lighthill published an article on aerodynamically generated sound, where he first proposed the acoustic analogy to calculate the noise of a turbulent jet. An elegant, mathematically pure approach to solving an engineering problem immediately gained recognition in the scientific community. A direct consequence of the analogy, the law of the eighth degree , states that the sound power from a jet of an engine is proportional to the speed of a jet to the eighth degree. The theoretically derived law was confirmed experimentally and formed the basis for reducing the aircraft noise of modern aircraft. This publication, together with the second part, published in 1954, marked the beginning of a new direction in science - Aeroacoustics.

Then Lighthill dealt a lot with the issues of numerical modeling, biomechanics and artificial intelligence. In 1973, Lighthill Report was published, where he critically described developments in the field of artificial intelligence and gave very pessimistic forecasts for the main directions of this science.

It is sometimes said that the motivation of men for work ... in pure science is a com-

sensation of the impossibility of having children. If this is true, then Building Robots is

perfect compensation! ... most of the robots are designed to function

em in accordance with the ideas of men about the world of the child: robots play games,

solve puzzles, describe words with pictures (like a bear on a rug with

ball). But the rich emotional component of childhood is completely absent. On

it usually answers that robotics is a young science, and therefore robots are capable

imitate only children's actions. However, the author came to the ... opinion that

The connections between robots and their builders are reminiscent of pseudo-child-parent

(Charles Lighthill, Arti cial Intelligence: A General Survey)

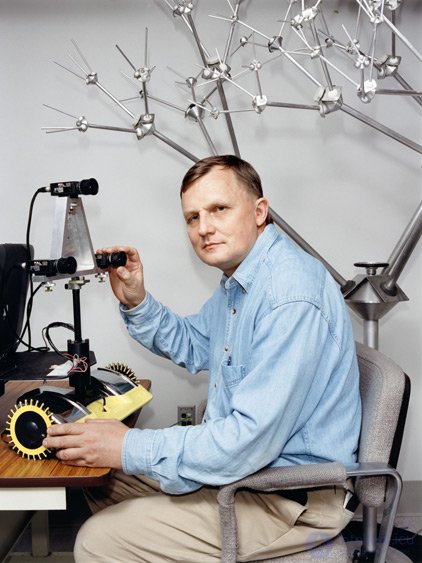

Hans Moravec (born Hans Moravec ; born November 30, 1948, Kautzen, Austria) is an adjunct professor at the Institute of Robotics at Carnegie Mellon University [1] . Known for his work in the field of robotics, artificial intelligence and writing activities on the influence of technology. Moravec is also known as a futurologist, and many of his publications are focused on transhumanism.

His most cited work was Sensor Fusion in Certainty Grids for Mobile Robots , published in AI Magazine [2] .

In 1988, his book Mind Children was released, in which Moravec describes his view on Moore's Law and the development of artificial intelligence. In particular, he writes that robots evolve into separate artificial species, starting from 2030-2040.

The Moravek paradox is a principle in the fields of artificial intelligence and robotics, according to which, contrary to popular belief, highly cognitive processes require relatively small computations, while low-level sensorimotor operations require enormous computational resources. The principle was formulated by Hans Moravek, Marvin Minsky and other researchers in the 1980s. According to Moravek, “it is rel

It is relatively easy to get your computer to test for intelligence or

games of checkers at the level of an adult; and hard or not possible to give it

the level of a one-year-old child when it comes to perception or movement.

(Hans Moravec, Mind Children: the robot)

'According to Strong AI, a computer is not just a tool in the study of consciousness; rather, the appropriately programmed computer really is the mind ... said to understand other cognitive states.

Searle here is going to argue that "a properly programmed computer" can have cognitive states and can explain human cognition by referring to Roger Schank's projects.

'You can describe the Schank program, proceed as follows: The goal of the program is to simulate the human ability to understand stories. It is typical of people [that they can] answer questions about the story, even if the information they give was not explicitly stated in this story.

'Schank machines can answer questions in a similar way ... in this fashion. For this, they have a "view" of what information people have about restaurants ... the machine prints answers to what we would expect people to give if they said similar storis'.

Guerrillas of a strong AI claim that [in this situation] machines ... literally, you can say, understand the story ... and ... what the machine and its program do explains the human ability to understand the story and answer questions about it "

Both claims to Searle seem to be "completely unsupported by Schank's work ." (That is, it cannot be said that Schank himself is fully committed to these claims.)

“One way to test any theory of the mind is to ask yourself what it would be like if my mind actually worked on the principles that the theory says that all minds work. Let's apply this test to the Schank program.”

... Suppose I locked the room and gave a large batch of Chinese writing ... I don’t know, not a single Chinese ... Now suppose that after this first batch of Chinese writing I gave the second batch along with a set of rules for relating the second batch with the first batch ... I can [now] define the characters completely their form. Now suppose also that I received the third installment along with some instructions that allow me to relate the elements of this third installment to the first two installments.

... Unknown to me, people who give me all these characters to name the first batch in the 'Script', the second batch "history" and "questions" of the third batch. They call the characters that I give them back in response to the third party’s “answers to questions” and the many ruls in English that they gave me, they call the “program”.

... No one just looking at my answers can say that I am not saying a word of Chinese. As for the Chinese, I just behave like a computer. "

In light of the above requirements ...

1. “It’s quite obvious in this example that I don’t understand a word of Chinese. I have ins and outs that are indistinguishable from the native Chinese speaker ... I still don’t understand anything. For the same reasons, Schank's computer doesn’t understand any stories. "

2. The statement that the program explains human understanding is false, because "the computer and its programs do not provide sufficient conditions for understanding, since the computer and the program are funtioning and there is no understanding.

"There is no reason to suggest that such principles are necessary or even at the expense of contributions, since none of the reasons was given to suggest that I understand English with any formal profram at all."

Before looking at the answers to this example, I first want to block out some common misundertandings about "understanding." There are clear cases in which “understanding” literally applies to clear cases in which it does not apply: and these two kinds of cases are all I need for this argument.

“If the meaning in which the programmed Schank computers are to figure out the history should be a metaphorical sense in which the door understands, and not in what sense I understand in English.

"Newell and Simon write that the kind of knowledge they claim is for computers just as much as for humans, and this is a kind of claim I will be given." “I will argue that literally the programmed computer understands that the car and the adding machine do not understand, namely, absolutely nothing. Computer understanding is not only partial or incomplete; it is zero .

Now to the answers:

1. Systems Answer (Berkeley) - This answer is basically that in the example, the number, "understanding is not attributed to the common man; and this is currently attributed to the whole system, of which it is part of"

"My answer to the theory of systems is quite simple: Let an individual assimilate all these elements of the system ... We can even get rid of the room, and let it work outdoors. And yet, he does not understand the Chinese and does not make a system because that there is nothing in the system, that this is not it. If it is not unerstand, then there is no way the system could understand, because the system is only a part of it.

The counter to this may be that "man, as a formal system of manipulation of characters," in fact, nevertheless understands Chinese . But, this cannot be true, because “the Chinese subsystem knows only that the squiggle of a squiggle” follows the “squoggle squoggle. ” “The whole point of the original example was to say that such manipulations cannot be enough for the Chinese to understand language in any literal sense

“The only motivation for saying that it should be a subsystem in me that understands the Chinese language is that I have a program, and I can pass the Turing test; I can fool native Chinese speakers. But precisely one of the controversial issues is the adequacy of the Turing test. The example shows that there may be two "systems", both of which pass the Turing test, but only one of them understands.

In addition, systems answer woud appear to have consequences that are independantly absurd ... it looks like all types of non-cognitive subsystems are going to turn out to be cognitive "(ie. Heart, Stomach, etc.). " If we accept the system answer, it is difficult to understand how we avoid saying, [that] these subsystems are all understanding subsystems.

“If Strong AI should be a branch of psychology, then it should be able to distinguish between systems that are truly mental from those that are not ... Anyone who thinks that a strong AI has a chance as a theory of the mind should think about the consequences of this remark.

2. Robot Reply (Yale) - This argument goes; "Suppose that we wrote various kinds of programs from Schank's program. Suppose we put a computer inside a robot. This computer will not only accept formal characters as input, and will issue formal characters as output , but the robot is doing -. it is very similar to the perception of walking, moving o- anything All this will be controlled by its computer "brain" Such a robot would, unlike Schank computer to have a genuine understanding and other mental. x states. "

продолжение следует...

Часть 1 The history of artificial intelligence. Main steps

Часть 2 - The history of artificial intelligence. Main steps

Comments

To leave a comment

Artificial Intelligence. Basics and history. Goals.

Terms: Artificial Intelligence. Basics and history. Goals.