Lecture

An example of solving the simplest abstract problem here

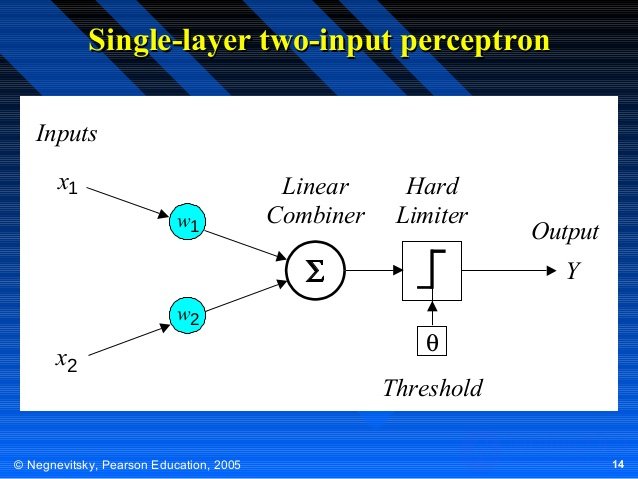

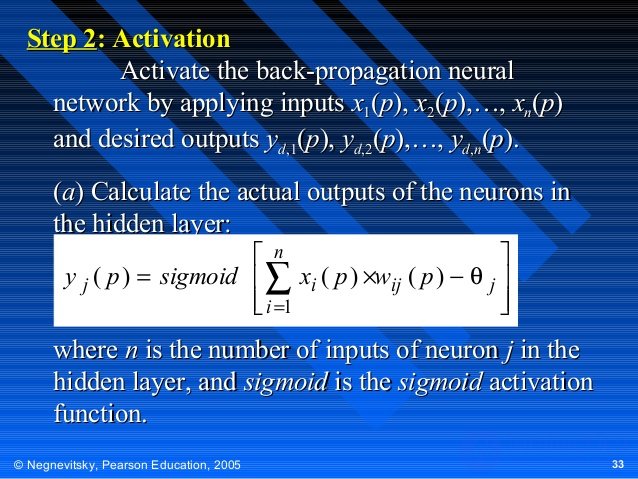

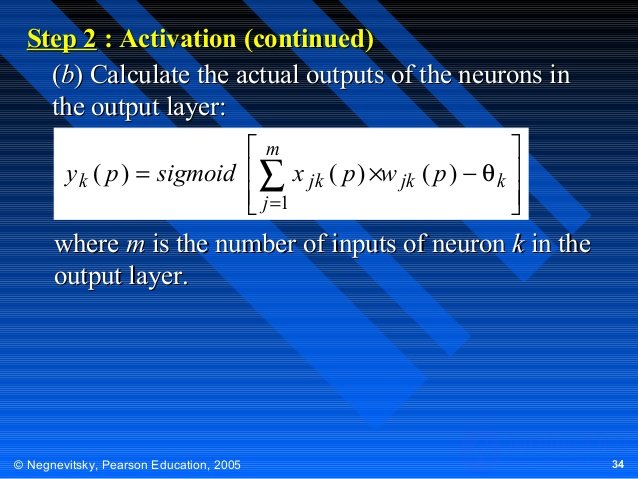

The input signals (variables) Xi are weighted (multiplied by the coefficients Wi, called synaptic weights), then summed, and the resulting weighted sum

S = W1X1 + W2X2 + ... + WNXN

subject to change by the function f (S), called the activation function.

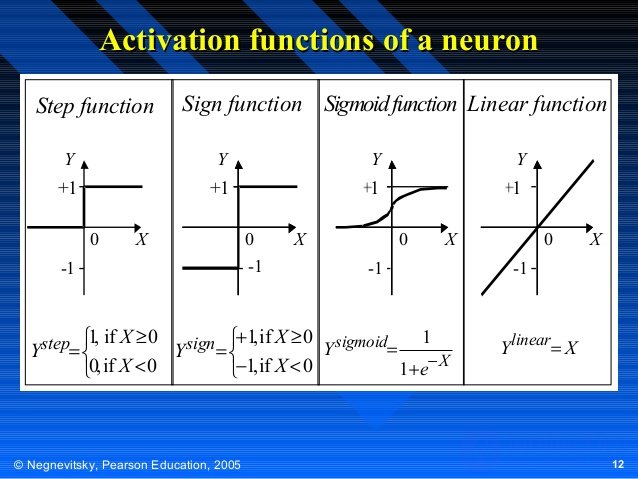

The output signal Y can also be weighted (scaled). As the activation function is most often used

sigmoid function Y = 1 / (1 + exp (-λS)) , as well as hyperbolic tangent, logarithmic function, linear and others. The main requirement for such functions is monotony.

S = W1X1 + W2X2 = 0.7x1.5 + 2.5x-1 = 1.45

Y = 1 / (1 + exp (-λS)) = 1 / (1 + exp (-0.5 1.45 )) = 0.326

Activation functions for neural networks

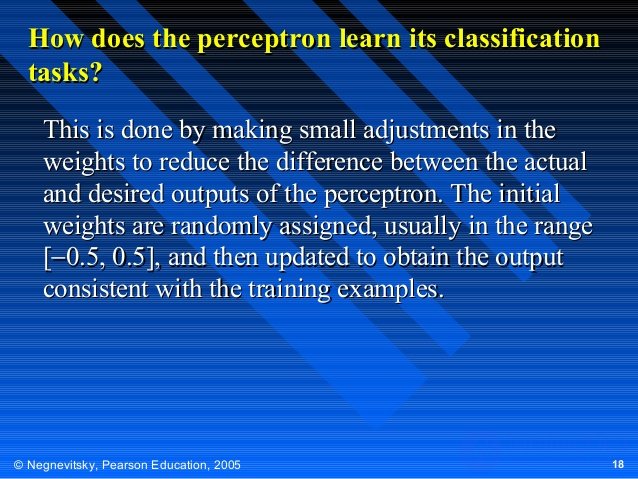

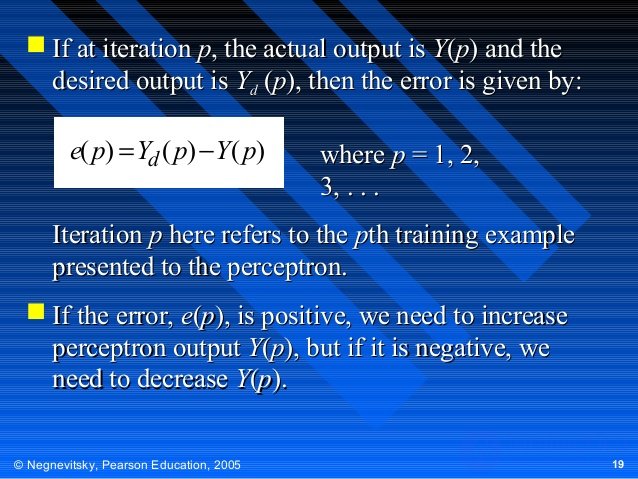

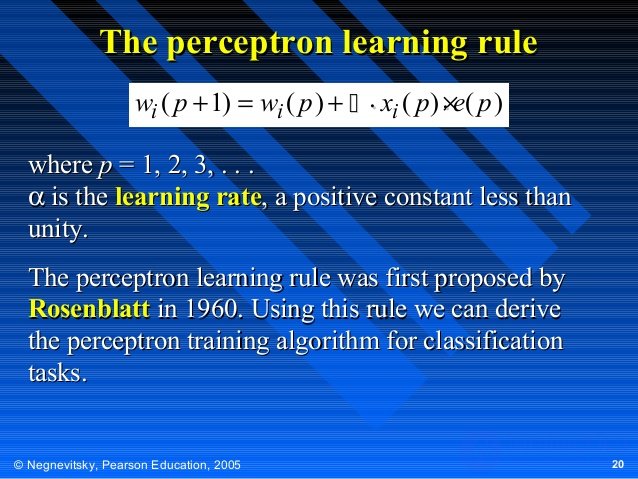

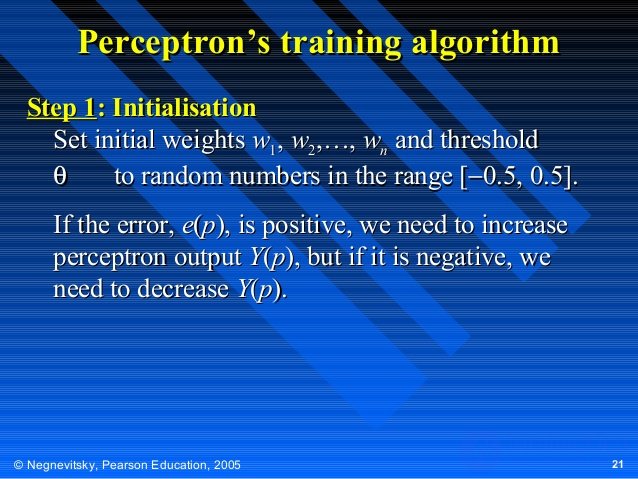

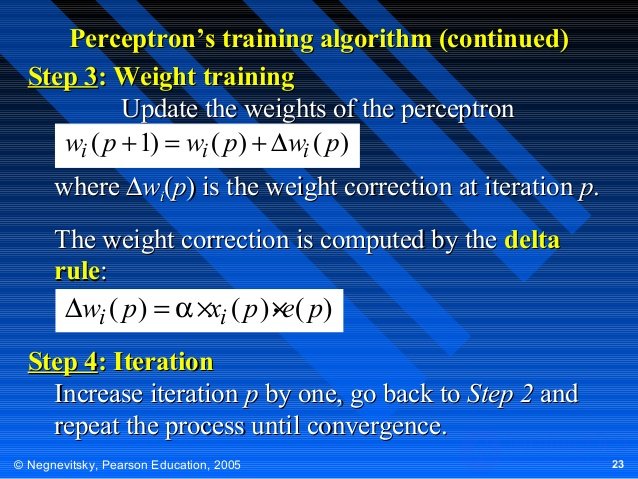

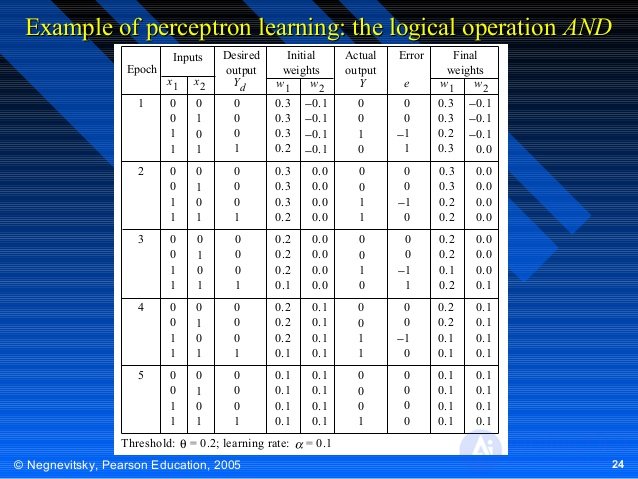

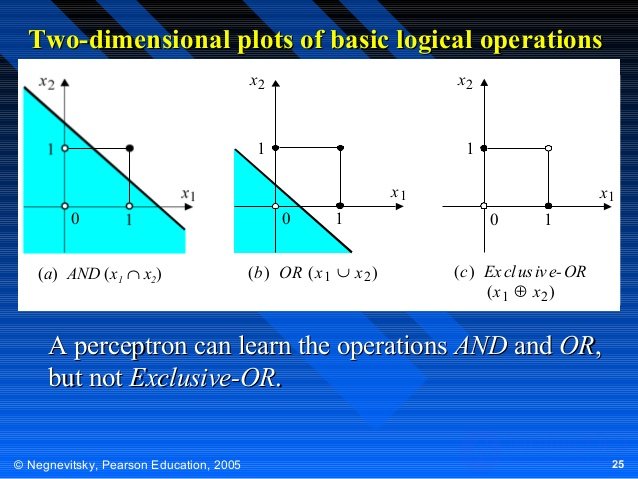

perceptron training

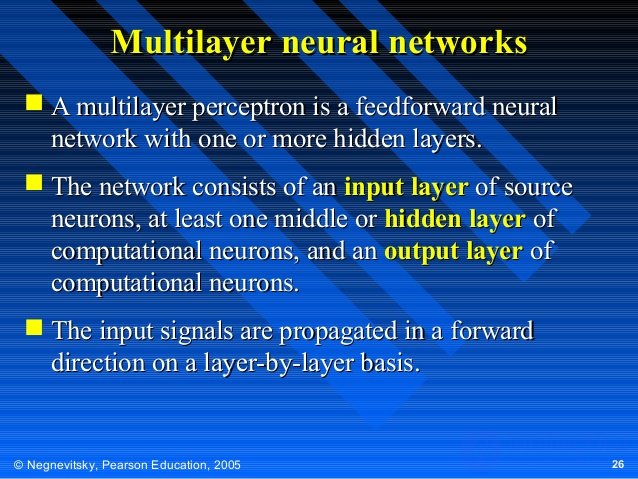

Multilayer neural networks

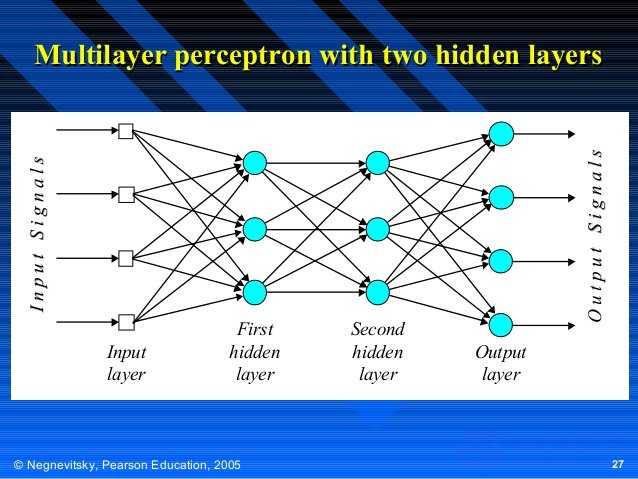

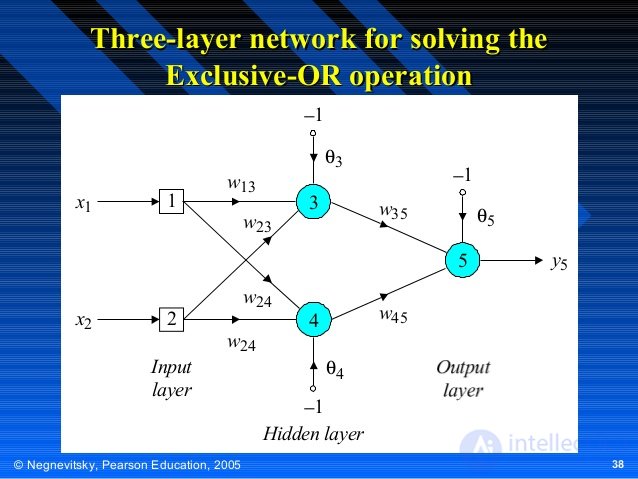

26. Multilayer neural networks multi A multilayer perceptron is a feedforward neural network with one or more hidden layers. Network network hidden Input The input signals are propagated in a forward direction on a layer-by-layer basis.

27. Input Signal Multiple Layer

28. What does hide hide?

Desired A hidden layer “hides” its desired output. You can see the network. The pattern should not be obvious.

Commercial ANNs incorporate three and sometimes four layers, including one or two hidden layers. Each layer can contain from 10 to 1000 neurons. Experimental neural networks may have five or even six layers, including millions of neurons.

29. Back propagation neural network

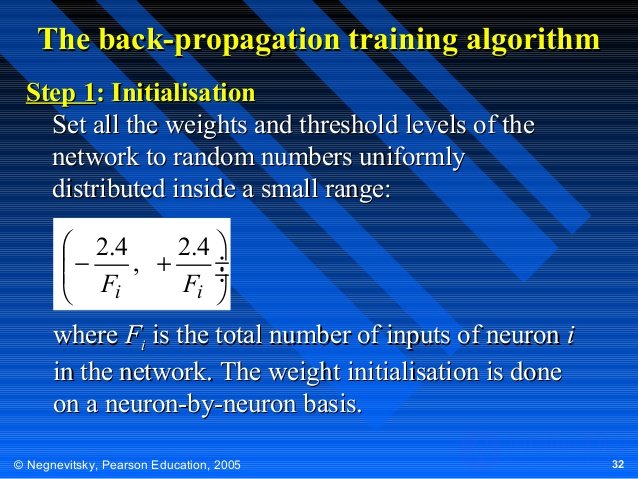

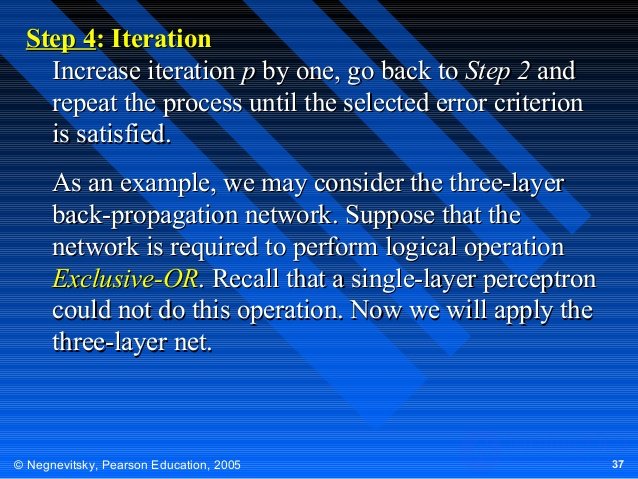

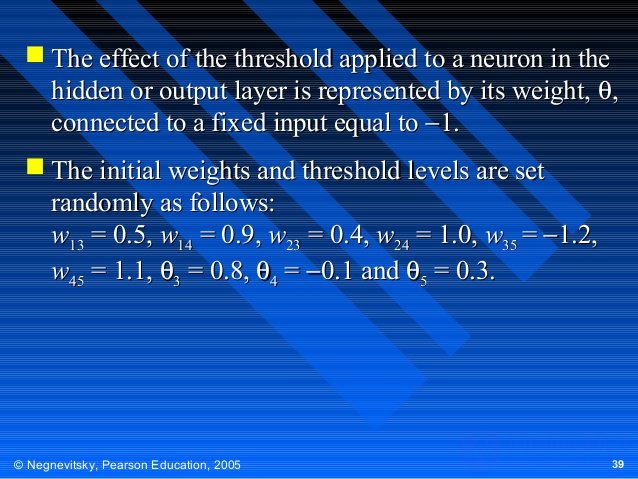

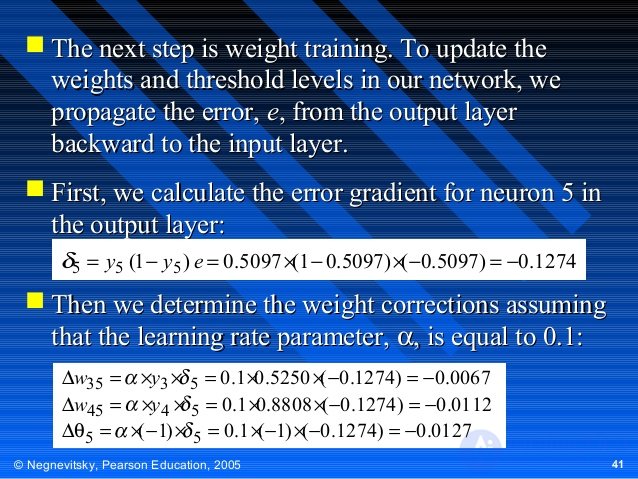

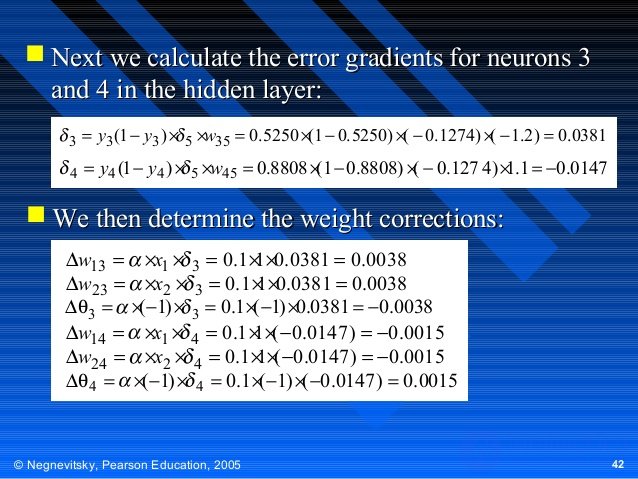

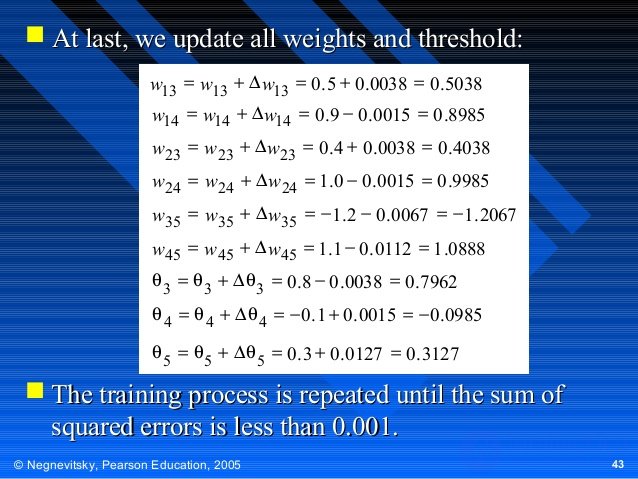

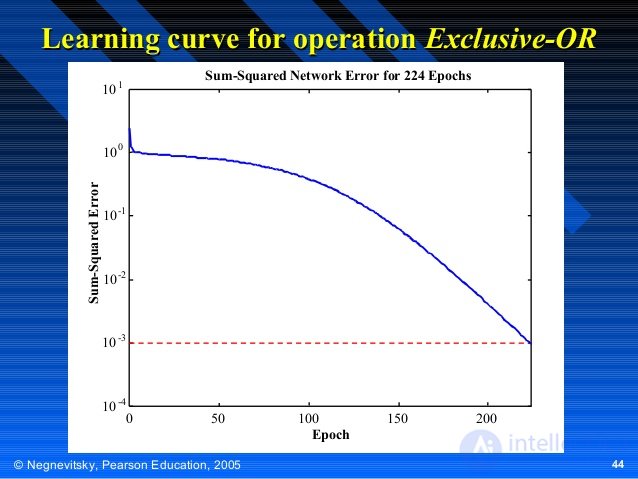

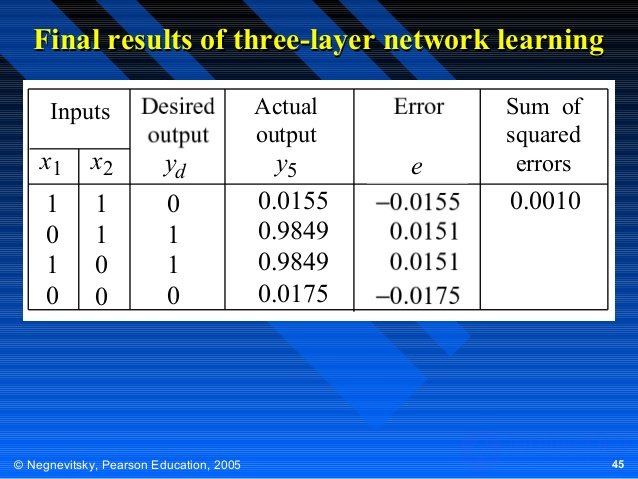

Learning in a multilayer network proceeds.

Training A training set of input patterns is presented to the network.

It is an error.

thirty.

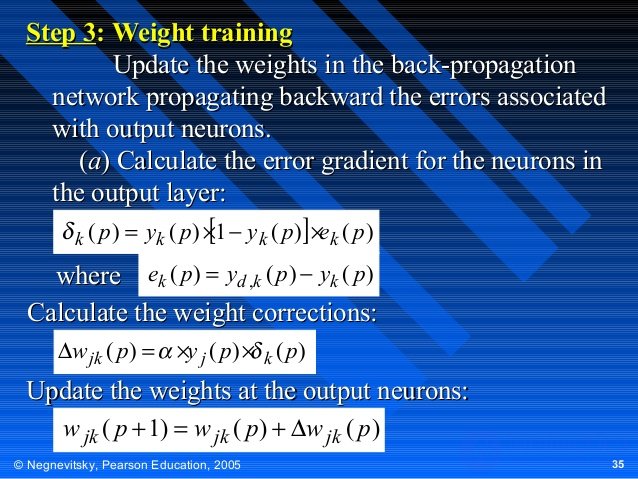

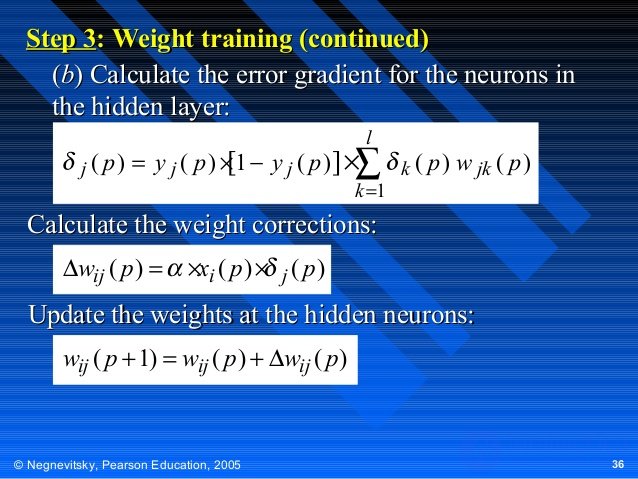

Two In a back-propagation neural network, it has two phases.

First, a training input pattern. The output pattern is generated by the output layer.

Error The weights are modified as the error is propagated.

Comments

To leave a comment

The practical application of artificial intelligence

Terms: The practical application of artificial intelligence